AI inferencing

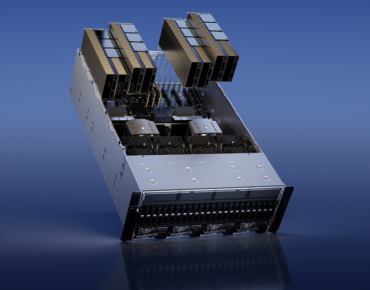

Nvidia Tees Up New Platforms for Generative Inference Workloads like ChatGPT

Today at its GPU Technology Conference, Nvidia discussed four new platforms designed to accelerate AI applications. Three are targeted at inference workloads for generative AI applications, including generating text, ...Full Article

d-Matrix Gets Funding to Build SRAM ‘Chiplets’ for AI Inference

Hardware startup d-Matrix says the $44 million it raised in a Series A round this week (April 20) will help it continue development of a novel “chiplet” architecture that ...Full Article

Nvidia Dominates MLPerf Inference, Qualcomm also Shines, Where’s Everybody Else?

MLCommons today released its latest MLPerf inferencing results, with another strong showing by Nvidia accelerators inside a diverse array of systems. Roughly four years old, MLPerf still struggles to ...Full Article

AWS Boosting Performance with New Graviton3-Based Instances Now Available in Preview

Three years after unveiling the first generation of its AWS Graviton chip-powered instances in 2018, Amazon Web Services just announced that the third generation of the processors – the ...Full Article

NeuReality Partners with IBM Research AI Hardware Center on Next-Gen AI Inferencing Platforms

In February of 2019, IBM launched its IBM Research AI Hardware Center in Albany, N.Y., to create a global research hub to develop next-generation AI hardware with various technology partners ...Full Article

Nvidia’s Base Command On-Demand Platform for AI Training Now Generally Available in North America

Nvidia’s hosted AI development hub, Nvidia Base Command Platform, was unveiled in May as a way for enterprises to take on complex AI model training projects on a subscription ...Full Article

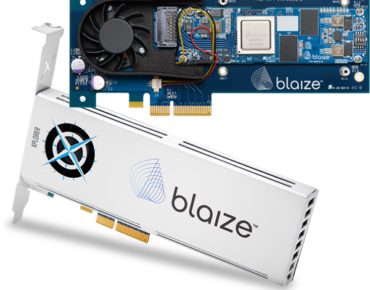

AI Edge Chip Vendor Blaize Gets $71M in Series D Funding to Expand Its Edge, Automotive Products

Seven months ago, AI edge chip vendor, Blaize, launched a new no-code AI software application to make it easier for customers to build applications for its AI chip-equipped PCIe ...Full Article

Mythic AI Targets the Edge AI Market with Its New Smaller, More Power-Efficient M1076 Chips

It has been a busy seven months for AI chip startup Mythic AI. Seven months ago, the company unveiled its first M1108 Analog Matrix Processor (AMP) for AI inferencing. ...Full Article

With $70M in New Series C Funding, Mythic AI Plans Mass Production of Its Inferencing Chips

Six months after unveiling its first M1108 Analog Matrix Processor (AMP) for AI inferencing, Mythic AI has just received a new $70 million Series C investment round to bring ...Full Article

AI Startup NeuReality Aims Its Inference Platform to ‘Replace Outdated AI System Architecture’

Only three months after emerging from stealth with $8 million in seed funding, Israeli AI startup NeuReality has revealed plans for its all-new NR1-P novel AI-centric inference platform, which ...Full Article