Nvidia’s Base Command On-Demand Platform for AI Training Now Generally Available in North America

Nvidia’s hosted AI development hub, Nvidia Base Command Platform, was unveiled in May as a way for enterprises to take on complex AI model training projects on a subscription basis, while avoiding huge monetary investments in on-premises hardware and software.

Now, after two months of early access experimentation for some customers, Nvidia has announced that the Base Command Platform is generally available to customers in the United States and Canada that want to take advantage of its on-demand AI training capabilities.

The Base Command Platform gives enterprises the ability to perform batch training runs on their data while using single node, multi-node, single GPU or multi-GPU hardware configurations, all as a hosted SaaS service, Stephan Fabel, the senior director of product management for the service, told EnterpriseAI.

“All of this is wrapped in a multi-tenant environment,” which is hosted by Nvidia’s data center partner on the project, Equinix, said Fabel. “There are admin controls that are typical for a cloud environment. You can create teams, you have access rights and sharing of data sets, training runs and all of that.”

Using Base Command, enterprises can create and train their models and then use Nvidia’s Fleet Command to deploy and manage those models in production, said Fabel.

Nvidia Base Command’s hosted subscription services are powered by the company’s high-performance DGX SuperPod systems, which produce large amounts of computing power for AI and other power-hungry workloads.

The nascent Nvidia Base Command on-demand services also include a partnership with cloud services and storage vendor NetApp, which is providing the data storage for the hosted AI workloads. The Nvidia NetApp partnership for the Base Command offering was unveiled in early June at Computex 2021.

The hosted Nvidia Base Command services are the first offering in Nvidia’s recently-announced Nvidia AI LaunchPad partner program.

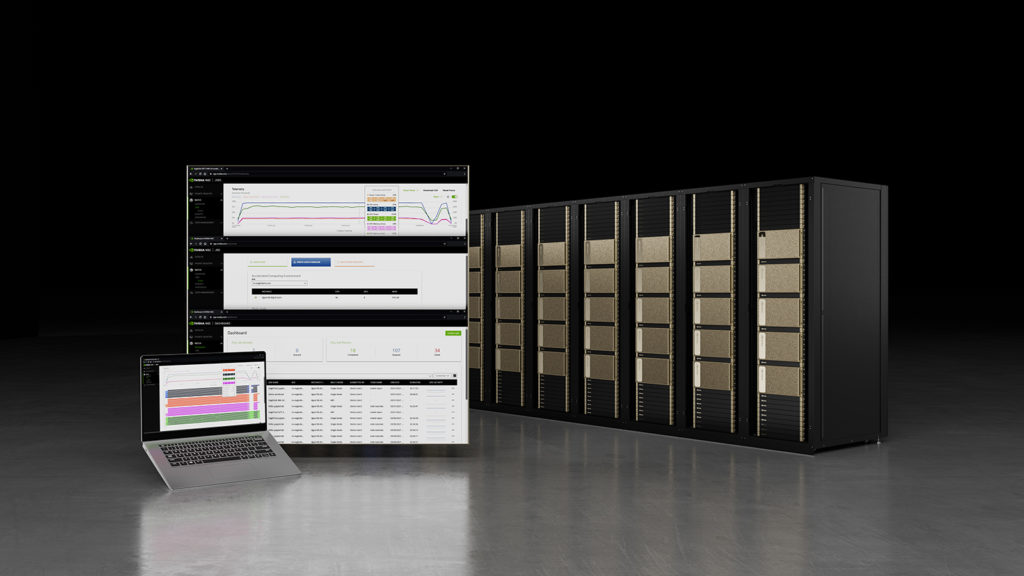

Nvidia Base Command portal views (left), accompanied by a bank of Nvidia DGX machines. Image courtesy: Nvidia

Monthly subscription pricing to Nvidia Base Command Platform starts at $90,000, with a three-month minimum. For that amount, customers get the use of a minimum of three and up to 20 DGXs. The minimum $90,000 monthly pricing does not include fees for the NetApp storage.

While the early access program allowed some customers to kick the tires on the services to start, the limited general release only for U.S. and Canadian customers is being done to scale out the services slowly to ensure that customer expectations can be met as they begin, said Fabel.

Presently, Base Command is only being offered as a hosted service.

“The problem that we were trying to address is … it is relatively straightforward to buy the hardware, you get those SKU numbers, you work with your preferred vendor,” said Fabel. “And typically … you know how to get your hands on data scientists who are now supposed to use this hardware. The problem is, how do you marry those two? How do you get the data scientists to use this hardware or this infrastructure optimally? That is gap that we are seeking to bridge with Base Command Platform, because this is exactly where you waste time as an enterprise.”

By using Base Command services, customers can pay for just the slices of DGX servers that they need to use as needed, without having to pay to purchase them, said Fabel.

“When you look at what customers are actually getting, they are getting a slice of a SuperPod, which is more than a certain number of DGXs,” he said. “It is important to understand that the performance guarantees that you get from a SuperPod reference architecture are more than the sum of its parts, when it all comes together with the right networking, the right switches, the right hardware.”

For enterprises that want to do this kind of work without making the sizable investments to acquire these hardware and software platforms on their own, the Nvidia Base Command Platform services can make a lot of sense, said Fabel.

“The idea is democratization of AI, making something that [has not been] attainable now attainable, and in a predictive, OPEX fashion,” he said.

Karl Freund, founder and principal analyst of Cambrian AI Research, told EnterpriseAI that the general availability of Nvidia Base Command is a notable development for enterprises that are working with AI.

“While seemingly expensive, this service offering lets enterprises start off without major capital outlays for GPU-equipped servers,” said Freund. “They can easily get started with competently-configured hardware and software in an Equinix data center, try out their AI ideas and then decide to continue on cloud or on premises. I think this is a smart idea to ease the on ramp for enterprise AI adoption.”

Another analyst, Rob Enderle, principal of Enderle Group, agreed.

“IT is shifting from a CAPEX to OPEX model, which better ties costs to revenues and provides a better hedge during times of revenue volatility,” said Enderle. “This pay-as-you-go plan will allow an IT organization to become familiar with the technology without massively investing in it upfront, reducing their risk while shortening the time to value significantly.”

In a related announcement, machine learning developer tools startup, Weights & Biases, today unveiled new MLOps software that can be used with Nvidia Base Command Platform. The application features experiment tracking, data versioning, model visualization and more to help large-scale enterprise AI teams visualize and understand their model performance, track data lineage and collaboratively iterate on models together.