d-Matrix Gets Funding to Build SRAM ‘Chiplets’ for AI Inference

Hardware startup d-Matrix says the $44 million it raised in a Series A round this week (April 20) will help it continue development of a novel “chiplet” architecture that uses 6 nanometer chip embedded in SRAM memory modules for accelerating AI workloads. The goal is to deliver an order of magnitude efficiency boost in inference for today’s large language models, which are pushing the envelope of what natural language processing (NLP) can do.

The popularity of large language models such as OpenAI’s GPT-3, Microsoft’s MT-NLG, and Google’s BERT is one the most important developments in AI over the past 10 years. Thanks to their size and complexity, these transformer models can demonstrate an understanding of human language at a level that was previously not possible. They can also generate text so well that the New York Times recently wrote they will soon be writing books.

The surge in adoption of these transformer models has caught the attention of large companies, which are relying on them for a variety of uses, including creating better chatbots, better document processing systems, and better content moderation, to name a few. But delivering the last-mile of connectivity for this AI is a difficult problem that is getting increasing atention.

The surge in adoption of these transformer models has caught the attention of large companies, which are relying on them for a variety of uses, including creating better chatbots, better document processing systems, and better content moderation, to name a few. But delivering the last-mile of connectivity for this AI is a difficult problem that is getting increasing atention.

In particlar, the AI inference problem has caught the attention of a pair of engineers with backgrounds in the semiconductor industry, including CEO Sid Sheth, who cut his teeth at Intel before working at startups, and CTO Sudeep Bhoja, who started at Texas Instruments. Both worked at Inphi (now Marvell) and Broadcom before co-founding d-Matrix in 2019.

“We think this is going to be around for a long time,” Sheth says about the large language models that are now backing Alexa and Siri. “We think people will essentially kind of gravitate around transformers for the next five to 10 years, and that is going to be the workhorse workload for AI compute for the next five to 10 years.”

But there’s a problem. While today’s large langauge models are well-served by GPUs for the training portion, the best computing architecture for the inference portion has yet to be defined. Many customers are running inference workloads on CPUs today, but that’s becoming less feasible as the models get bigger. And that puts customers in a quandary, Sheth says.

“I think what the customers are trying to figure out is, [CPUs] aren’t very efficient, so should I make the jump to GPUs? Should I make the jump to accelerators?” Sheth tells Datanami. “And if I were to make a jump directly to accelerators and skip the GPU, then what is the right accelerator architecture I should jump to? And that’s where we come in.”

Whatever architecture emerges, Sheth doubts it will be the GPU. While GPUs are making inroads for inference, they’re not ideal for the workload, he says.

“The GPU was never really built for inference,” he says. “It was really built for training. It is all about high-performance computing. Inference is all about high-efficiency computing.”

Memory Whole

The first thing d-Matrix did back in 2019 was determine what type of memory it would use for the new in-memory processing architecture that Sheth and Bhoja had in mind. While DRAM is mature and popular for all sorts of computing, the cost of moving data in and out of DRAM was just too great, especially when all the weights in a modern language model with billions of parameters need to be kept in memory in order to support the matrix math required for optimal computing.

“For example, the DRAM access is of the order of 60 picojoules per byte and the compute is 50 to 60 femtojoules. It’s three orders of magnitude [higher],” Bhoja tells Datanami. “So you don’t want to move something from DRAM and compute it once. You would like to have the compute within the memory. And the DRAM processes are not very good at that.”

There had been some interesting developments in flash memory, Bhoja continues. “That is a little more interesting than DRAM. So what you do is you store the weights on the flash cell and you compute on the flash cell,” he says. “But as these large language model become very big, it was not possible to build flash cells that could hold all of them, all the weights in one place.”

Ultimately, the pair couldn’t find a flash technology that was reprogrammable and efficient, so they kept looking.

“The new memory types are interesting, RRAM [resistive RAM] and MRAM [magnetoresistive RAM],” Bhoja says. “But they’re not quite there yet. So when we looked at the gamut of everything, SRAM was the only technology which was mature enough. It was available in mainstream CMOS process. We didn’t have to re-invent a process. We could mix compute within memory and it sort of worked out.”

SRAM, or static random access memory, is faster and more expensive than DRAM. It’s typically used for the cache and internal registers of a CPU, while DRAM is typically used for main memory. While SRAM loses its data when power is turned off, like DRAM, SRAM also has the benefit of not needing to be periodically refreshed, as DRAM does.

SRAM and the POC

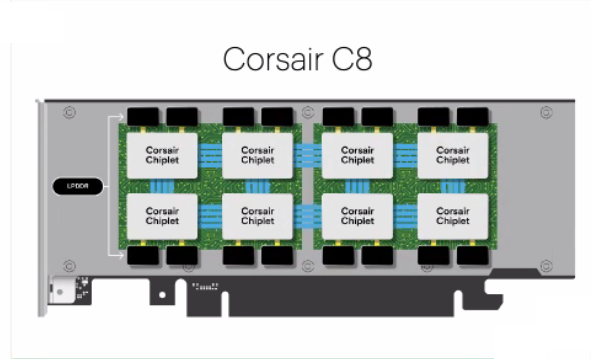

d-Matrix developed a proof of concept called Nighthawk to show that its SRAM in-memory computing could actually work. Nighthawk showed the feasibility of d-Matrix’s “chiplet” approach, where it embeds a chip inside SRAM modules, which sit on cards that plug into the PCI bus.

d-Matrix plans to package its IP in several ways, including as software, as PCIe cards (shown), and eventually servers (Image courtesy d-Matrix)

“We have SRAM and we basically store the weights of the model inside our SRAM array, and then we compute in SRAM,” Bhoja says. “We have mixed compute and memory together. DRAM is not the ideal process for that. SRAM is the ideal process.”

There are tradeoffs between DRAM and SRAM, Bhoja says. As far as a pure memory architecture goes, DRAM is better. But when you want to mix memory and compute, SRAM is better, he says.

“DRAM transistors are much more dense,” he says. “SRAM processors are bigger, so the chips can be bigger. This is the only way we see to accelerate transformers.”

Bhoja says the transformer models are large that it takes an enormous amount of compute to process a single sentence. He says an Nvidia DGX box with eight A100 GPUs can parse half a sentence per second on GPT-3. On a per-watt basis, the inference demands of GPT-3 are eight sentences per second per watt.

“So we are trying to build custom silicon to go solve that problem, the energy efficiency problems of translating transformers,” he says. “There’s a big gap between what you can train and what you can deploy.”

Nighthawk and Jayhawk

The company has completed its first proof of concept for its Nighthawk chiplet architecture. The company has contracted with TSMC to develop these chiplets with a 6 nanometer process. Tests show that d-Matrix’s SRAM technology 10 times more efficient than an Nvidia A100 GPU when it comes to inference workloads, Bhoja says.

d-Matrix has also developed its die-to-die interconnect, called Jayhawk, which will allow multiple servers equipped with Nighthawk chiplets to be strung together for scale-out or scale-up processing.

“Now that both these critical pieces of IP have been proven out, the team is busy executing on…Corsair, which is the product that will be going into deployment at customers,” Sheth says.

The $44 million in Series A that d-Matrix announced today will be instrumental in developing Corsair, which should be ready by the second half of 2023, Sheth says.

The Series A was led by US-based venture capital firm Playground Global, with participation by M12 (Microsoft Venture Fund) and SK Hynix. These new investors join existing investors Nautilus Venture Partners, Marvell Technology. and Entrada Ventures.

Sasha Ostojic, a venture partner at Playground Global, says it’s clear that a breakthrough is needed in AI compute efficiency to serve the burgeoning market for inference among hyperscalers and edge data center markets.

“d-Matrix is a novel, defensible technology that can outperform traditional CPUs and GPUs, unlocking and maximizing power efficiency and utilization through their software stack,” Ostojic says in a press release. “We couldn’t be more excited to partner with this team of experienced operators to build this much-needed, no-tradeoffs technology.”

While it’s targeting hyperscalers with its hardware, the plans call for d-Matrix to eventually offer a small server that even small-and-midsize businesses can deploy to run their AI workloads.

This story originally appeared on EnterpriseAI sister site Datanami.

Related

Alex Woodie has written about IT as a technology journalist for more than a decade. He brings extensive experience from the IBM midrange marketplace, including topics such as servers, ERP applications, programming, databases, security, high availability, storage, business intelligence, cloud, and mobile enablement. He resides in the San Diego area.