Nvidia Launches AI Factories in DGX Cloud Amid the GPU Squeeze

(JLStock/Shutterstock)

Nvidia is now renting out its homegrown AI supercomputers with its newest GPUs in the cloud for those keen to access its hardware and software packages.

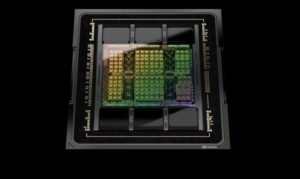

The DGX Cloud service will include its high-performance AI hardware, including the H100 and A100 GPUs, which are currently in short supply. Users will be able to rent the systems through Nvidia’s own cloud infrastructure or Oracle’s cloud service.

“DGX Cloud is available worldwide except where subject to U.S. export controls,” said Tony Paikeday, senior director, DGX Platforms at Nvidia.

The cloud will be available in Nvidia’s cloud infrastructure, which includes its DGX systems located in the U.S. and U.K. DGX Cloud will also be available through the Oracle Cloud infrastructure.

Nvidia announced the wide availability of its DGX Cloud service after initially announcing the service at its GTC conference in March. The Tuesday announcement followed a string of AI-in-cloud announcements last week.

Rival Cerebras Systems is installing AI systems in cloud services run by Middle Eastern cloud provider G42, which will deliver 36 exaflops of performance. Tesla announced it was starting production of its Dojo supercomputer, which will run on its homegrown D1 chips, and deliver 100 exaflops of performance by the end of next year. The benchmarks vary depending on the data type.

Tesla CEO Elon Musk last week talked about shortages of Nvidia GPUs for its existing AI hardware, and that Tesla was waiting for supplies. Users can lock down access to Nvidia’s hardware and software on DGX Cloud, but at a hefty premium.

The DGX Cloud rentals include access to Nvidia’s cloud computers, each with H100 or A100 GPUs and 640GB of GPU memory, on which companies can run AI applications. Nvidia’s goal is to run its AI infrastructure like a factory — feed in data as raw material, and the output is usable information that companies can put to work. Customers do not have to worry about the software and hardware in the middle.

Paikeday also mentioned, “DGX Cloud serves a critical need: dedicated compute for multi-node training of large complex generative AI models like large language models.” Paikeday continued, “Enterprises will also get a deep bench of technical expertise to deploy and operate the environment supporting such workloads.”

The pricing for DGX cloud starts at $36,999 per instance for a month.

That is about double the price of Microsoft Azure’s ND96asr instance with eight Nvidia A100 GPUs, 96 CPU cores, and 900GB of RAM, which costs $19,854 per month. Nvidia’s base price includes AI Enterprise software, which provides access to large language models and tools to develop AI applications.

The rentals include a software interface called the Base Command Platform so companies to manage and monitor DGX Cloud training workloads. The Oracle Cloud has clusters of up to 512 Nvidia GPUs with a 200 gigabits-per-second RDMA network, and includes support for multiple file systems such as Lustre.

All major cloud providers have their own deployments of Nvidia’s H100 and A100 GPUs, which are different from DGX Cloud.

Google earlier this year announced the A3 supercomputer with 26,000 Nvidia H100 Hopper GPUs, which has a setup that resembles Nvidia’s DGX Superpod, which spans 127 DGX nodes, each equipped with eight H100 GPUs. Amazon’s AWS EC2 UltraClusters with P5 instances will be based on the H100.

“We expect DGX Cloud to attract new generative AI customers and workloads to our partners’ clouds,” Paikeday said.

With lock down, also comes lock-in — Nvidia is trying to get customers to use its proprietary AI hardware and software technologies based on its CUDA programming models. It could provide costly for companies in the long run, as they would pay for software licenses and GPU time. Nvidia said investments in AI will benefit companies in the form of long-term operational savings.

The AI community is pushing open-source models and railing against proprietary models and tools, but Nvidia has a stranglehold on the AI hardware market. Nvidia is one of the few companies that can provide hardware and software stacks and services that make practical implementations of machine learning possible.

The interest in Nvidia’s AI hardware comes amid a rush to tap into the promise of generative AI. OpenAI’s ChatGPT demonstrated the capabilities of AI in the form of a chatbot, but new models are now emerging for vertical markets that include health care, insurance, and finance.

This article first appeared on HPCwire.