Explaining AI Decisions to Your Customers: IBM Toolkit for Algorithm Accountability

IBM has introduced a toolkit of algorithms to enable interpretability and explainability of machine learning models – i.e., algorithms for understanding algorithms (have we mentioned lately that AI is a bit complicated?). In simpler terms, IBM’s AI Explainability 360 is designed to help AI users address customers’ (such as bank or insurance company customers) concerns whether AI has treated them fairly and without bias.

“Understanding how things work is essential to how we navigate the world around us and is essential to fostering trust and confidence in AI systems,” said Aleksandra Mojsilovic, IBM Fellow, IBM Research, in a blog. “Further, AI explainability is increasingly important among business leaders and policy makers,” she said, citing an IBM Institute for Business Value survey finding that 68 percent of business leaders believe customers will demand more explainability from AI in the next three years.

One means of addressing AI trust from an internal perspective (i.e., overcoming managers’ reluctance to rely on AI recommendations) is by integrating AI specialists and data scientists into the larger IT and business culture of the organization (see “How to Overcome the AI Trust Gap: A Strategy for Business Leaders”).

IBM’s AI Explainability 360, on the other hand, is focused on explaining AI-based decisions to customers, and the key, Mojsilovic said, is variety. Just as a good teacher, knowing that we all learn differently, expresses a concept in several ways, the key to fostering understanding AI is to explain its decisions in many ways as well, Mojsilovic said.

“To provide explanations in our daily lives, we rely on a rich and expressive vocabulary: we use examples and counterexamples, create rules and prototypes, and highlight important characteristics that are present and absent,” she said. “When interacting with algorithmic decisions, users will expect and demand the same level of expressiveness from AI. A doctor diagnosing a patient may benefit from seeing cases that are very similar or very different. An applicant whose loan was denied will want to understand the main reasons for the rejection and what she can do to reverse the decision. A regulator, on the other hand, will not probe into only one data point and decision, she will want to understand the behavior of the system as a whole to ensure that it complies with regulations. A developer may want to understand where the model is more or less confident as a means of improving its performance. As a result, when it comes to explaining decisions made by algorithms, there is no single approach that works best…. The appropriate choice depends on the persona of the consumer and the requirements of the machine learning pipeline.”

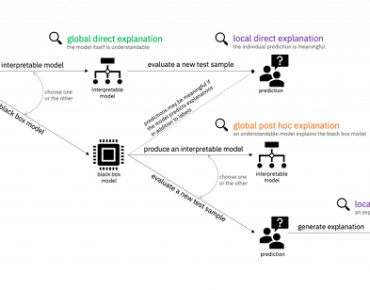

Let’s say a machine learning system recommends that a bank customer be turned down for a car loan. Giving a good explanation is critical to retaining the customer’s good will and for allaying potential anger over assumed bias or other forms of unfairness. AI Explainability goes through a Socratic process of answering questions so that the loan officer, when meeting with the customer, is armed with “case-based reasoning, directly interpretable rules, and post hoc local and global explanations” and can explain the bank’s (i.e., the algorithm’s) justification that begins with:

The toolkit also offers tutorials on how to explain “high-stakes” applications, such as clinical medicine, healthcare management and human resources, and documentation that guides the practitioner on choosing an appropriate explanation method.

IBM is open sourcing the toolkit “to help create a community of practice for data scientists, policy makers and the general public that need to understand how algorithmic decision making affects them,” Mojsilovic said. The initial release contains eight algorithms created by IBM Research, and includes metrics from the community that “serve as quantitative proxies for the quality of explanations.” Beyond the initial release, IBM will encourage contributions of other algorithms from the research community.

The toolkit’s decision tree includes an algorithm called Boolean Classification Rules via Column Generation, a scalable method of directly interpretable machine learning that won the inaugural FICO Explainable Machine Learning Challenge, according to Mojsilovic. A second algorithm, Contrastive Explanations Method, is a local post hoc method highlighting the most important consideration of explainable AI “that has been overlooked by researchers and practitioners: explaining why an event happened not in isolation, but why it happened instead of some other event,” Mojsilovic said.