Cisco Targets Hyperscale With Modular UCS Iron

Five years ago, Cisco Systems got out in front of the pack with converged server and switching and a unified Ethernet fabric for server and storage traffic in its Unified Computing System blade servers. The company eventually added rack servers so it could handle workloads that needed more local memory and disk, and with the launch of the UCS M-Series modular servers today, the company is taking on hyperscale workloads and the custom server makers who tend to build the machines that run them.

Cisco has fought hard to get into the server side of the datacenter business, and has done better than perhaps many had thought it could do against IBM, Hewlett-Packard, Dell, and others in the core, general-purpose systems market. While that general purpose market is shrinking as customers deploy virtualization to drive up utilization on their machines or shift workloads to clouds, which are just virtualized machines someone else owns and operates, the market for systems aimed at hyperscale customers as well as specific kinds of modeling and simulation workloads that can be run on similar iron, is exploding. The demand is choppy on a quarterly basis because the deals are mostly large – just like the supercomputing business – and often tied to specific processor announcement from Intel.

The UCS business based on blade and rack machines has grown to have a $3 billion annual run rate and has 36,500 unique customers. More than three-quarters of the Fortune 500 have UCS iron of some sort running in their datacenters and Cisco has more than 3,600 channel partners helping push its iron. But growth is slowing and Cisco has taken just about all of the share it can away from the tier one server makers that have been its primary rivals. To grow, Cisco has to expand into new markets and that means taking on original design manufacturers (ODMs) located in China and Taiwan, tough competitors like Supermicro that have a server for just about every need, and the tier one server makers like HP and Dell that do custom server engagements for hyperscale customers as well as making general-purpose machines.

It is with this in mind that Cisco has created the M-Series modular servers. Todd Brannon, director of product marketing for Cisco's Unified Computing System line, says that this is the biggest rollout since the original UCS launch back in March 2009, and says that the company is well aware of the need to supply scale-up systems for in-memory processing, the need for scale out systems – what he called enterprise grid or enterprise HPC – in the financial services, oil and gas, electronic design automation, and weather modeling sectors growing much faster. "We just see the increasing use of distributed computing, which is very different from heavy enterprise workloads, where you put many applications in virtual machines on a server node," explains Brannon. "This is about one application spanning dozens, hundreds, or thousands of nodes."

The distinction between enterprise and hyperscale is a bit more complicated, but there is no question that to be a player in the hyperscale arena, Cisco needs to build a new kind of system that reflects the very different needs of the applications that run at hyperscale.

In the enterprise, customers have hundreds to thousands of applications, and while many of them are multi-tiered, running replicas of Web or application tiers and mirrored databases, it is generally one application per server. To virtualize these applications, a hefty system with lots of cores is outfitted with a server virtualization hypervisor, which provides a kind of isolation between the different tiers of the application as well as a rudimentary kind of resource control, and multiple applications are run side-by-side on a single box. With hundreds of boxes you can support thousands of applications. With hyperscale workloads, the customers tend to have tens of applications, not thousands, and they are generally not virtualized – at least not with a heavy hypervisor but perhaps with lighter weight software containers. These applications are parallel in nature and are designed to run over multiple nodes because customers need the apps to scale and because they want the resiliency to be guaranteed up in the software stack and in the redundancy of the data not in the redundancy of hardware inside of a physical server trying to protect a single copy of the data and application against all failure. If a node fails in Hadoop, Memcached, or NoSQL data store cluster, the application should heal around the node failure because data is replicated across multiple nodes.

With the M-Series machines, Cisco is not just creating yet another microserver platform aimed at scale-out workloads, but is actually doing a bit of engineering.

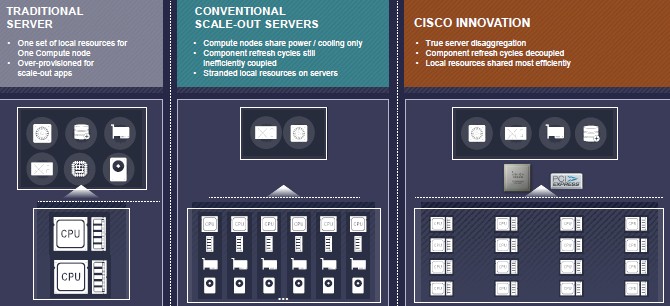

"If you take the covers off and look at a server today, whether it is a rack server or a blade server or even one of the new density optimized machines, what you have is fully conjoined, fixed subsystems," Brannon explains to EnterpriseTech. "You have got a processor combined with a RAID or non-RAID storage controller and PCI-Express and Ethernet I/O, and these are all conjoined. We have broken apart the pieces."

The resulting machine is a disaggregated server, something that the folks at the Open Compute Project and Intel have been talking about for a while. Cisco has actually done it, and it is using the third generation of its homemade "Cruz" ASIC, which provides the virtual interface cards in the UCS blade servers, as a means of linking processor/memory components to other elements of the server. In the UCS blade chassis, that Cruz chip creates two 40 Gb/sec ports between server nodes and the switch embedded in the chassis (which are linked by a fabric extender) that can be carved up into as many as 256 virtual NICs for either bare metal or virtual machine workloads running on the server nodes. The local fabric implemented by the Cruz chip supports network and storage protocols, specifically Ethernet for networking and PCI-Express for storage. The compute sleds have nothing but Xeon processors and their memory, and with the remaining components of a server costing about 30 percent of the price of a server, when customers now go to upgrade compute, they will be able to leave their storage and networking in place.

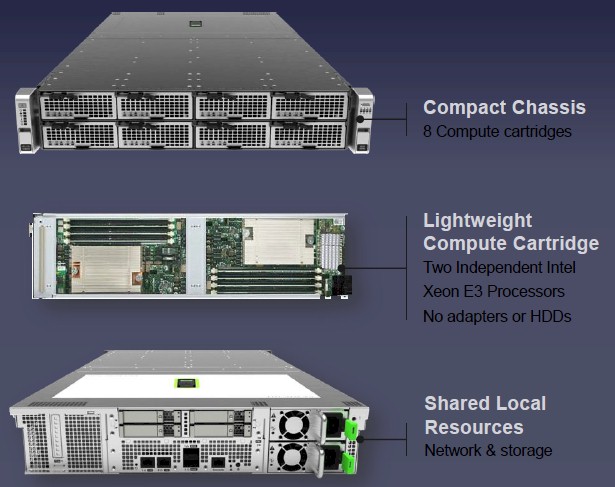

The M-Series chassis has eight compute sleds, what Cisco is calling cartridges. Each cartridge has two "Haswell" family Xeon E3-1200 v3 processors, each comprising a single compute node. These are not to be confused with the Xeon E5-1600 v3 or E5-2600 v3 processors that are widely expected to debut from Intel at its Intel Developer Forum next week, but rather the entry Haswell chips that Intel has had in the field since June 2013.

The first compute node available for the M-Series modular machines is the M142 node, and it supports Xeon E3-1200 v3 processors running at 1.1 GHz (two core), 2.0 GHz (four-core), and 2.7 GHz (four-core). There are many more Xeon E3 v3 processors available from Intel than this. You can't mix and match processor types within a cartridge, but you can mix and match within an enclosure. Each node has four DDR3 memory slots and can support up to 32 GB of main memory using 8 GB stocks and 64 GB using 16 MB sticks.

The back of the M-Series enclosure, technically known as the M4308, has room for four 2.5-inch solid state disks, which are addressable and shared by all of the nodes. Cisco is supporting a mix of flash types and SSD capacities, ranging from 240 GB up to 1.6 TB, for these storage bays. The chassis also has room for a single, shared PCI-Express 3.0 x8 device. It has to be a half-height, half width form factor and can consume no more than 25 watts. In the future, the chassis will support the addition of a PCI-Express 3.0 device in the eighth cartridge bay and storage cartridges in bays three and four if those four SSDs are not enough local storage. SanDisk Fusion ioMemory PCI-Express flash cards will be supported in this PCI cartridge when it becomes available. The chassis has two 1,400 watt power supplies, which are hot-swappable and you don't have to add the second one if you don't want to.

All sixteen nodes in the system are linked to each other over the Cruz fabric, which also creates two 40 Gb/sec Ethernet ports (capable of supporting Fibre Channel over Ethernet out to storage as well as raw Ethernet for other kinds of traffic and iSCSI storage) that are shared by the sixteen nodes. As before, these dual 40 Gb/sec ports coming out of the M-Series enclosure can be virtualized and carved up and allocated to each of the nodes independently. The M-Series rack includes two UCS 6248 fabric interconnects (similar to a top of rack switch) that can link up to 80 nodes; the M-Series tops out at 320 nodes in a single UCS Manager domain. The UCS Central software, which federates UCS Managers into one uber-manager, can span up to 10,000 nodes. You would use top-of-rack Nexus 5000 or end-of-row Nexus 7000 or Nexus 9000 switches to create clusters larger than 320 nodes. (You cannot daisy chain the boxes together and create a mesh network of Cruz chips, although that would be a neat thing.)

The M-Series cartridges will no doubt support the latest Haswell Xeon E5-2600 v3 processors when they become available, although Brannon would not confirm this, and it also seems likely that various forms of accelerators will also be options for the cartridges to link to X86 processors over the Cruz fabric.

The M-Series chassis fits into a standard 19-inch rack. With two UCS 6248 fabric extenders and five enclosures with a total of 80 Xeon E3-1275L v3 nodes – with a total of 320 cores and 2.5 TB of memory spread across the nodes – Cisco is charging $263,832, or about $3,298 per node. This includes licenses to the UCS Manager software. So call it a $1 million for a full rack with those 1,280 cores and 10 TB of memory. The M-Series modular machines will be available in December, and support the latest current releases of Microsoft Windows Server, Red Hat Enterprise Linux, SUSE Linux Enterprise Server, and VMware ESXi.

In addition to the M-Series modular server, Cisco is also rolling out the UCS C3160 rack server, which is a two-socket machine based on the current "Ivy Bridge" Xeon E5-2600 v2 chips from Intel. The machine has eight memory slots per socket and the 4U rack chassis has room for 56 top-loading 3.5-inch SAS drives. The back of the enclosure has room for four more drives, for a total of 60 drives, and with 4 TB disks that gives you 280 TB of capacity in 4U and with 6 TB drives, which are available from a number of different vendors, you can push that up to 360 TB. The UCS C3160 is being positioned as the building block for large scale content storage and data repositories, distributed databases, distributed file systems, and media streaming and transcoding applications. Think OpenStack plus Ceph or Hadoop and you have the right idea. The C3160 has four 1,050 watt power supplies and supports just about every kind of RAID level you can think of on its embedded controller.

The UCS 3160 server will be available in October. With two Xeon E5-2620 v2 processors, 128 GB of memory, two 120 GB SSDs for storage platform boot, and four power supplies, the system costs $35,396. That price does not include any disk drives.

Cisco will obviously be updating the rest of its C-Series rack servers and B-Series blade servers with the Haswell Xeon E5 v3 revamp. Stay tuned.