Domino Expands Generative AI Capabilities with AI Gateway and Vector Data Access

March 8, 2024 -- Generative AI (GenAI) promises to transform many business processes, deliver productivity gains, and unlock significant enterprise value. Companies are bullish on the prospects for GenAI, especially text applications, leveraging Large Language Models (LLMs).

LLMs have revolutionized many business functions and will continue to do so. However, challenges to broader adoption remain. Various surveys indicate only 10% - 15% of companies reported having GenAI applications in production. Many are still investigating or piloting projects. Concerns remain around managing the risk and costs associated with production-scale GenAI applications.

LLMs have revolutionized many business functions and will continue to do so. However, challenges to broader adoption remain. Various surveys indicate only 10% - 15% of companies reported having GenAI applications in production. Many are still investigating or piloting projects. Concerns remain around managing the risk and costs associated with production-scale GenAI applications.

Today, Domino is introducing two new features to help enterprises promote more GenAI projects from pilots to production responsibly and cost-effectively. Here's how.

Guardrails for LLM Access with Domino AI Gateway

Your team wants access to the latest SaaS and open-source LLMs. They know LLMs will help them be more productive and innovative. However, opening access can introduce risk unless you implement appropriate user access guardrails. It would help to have better cost controls for generative model usage. Finally, when a newer, better, or cheaper LLM emerges, switching to it without rewriting your code would be nice.

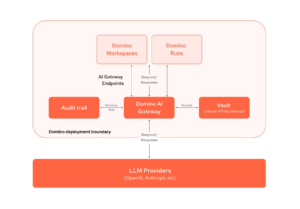

The new Domino AI Gateway enables enterprises to do just that. With AI Gateway, you can closely manage your team's access to commercial LLMs from OpenAI, Anthropic, and others and control what LLMs each user can see and use. Domino AI Gateway enhances security by holding LLM API keys. Controlling the keys ensures that keys never appear in source code or are shared between users. Domino AI Gateway helps control costs by tracking LLM API token usage. That allows you to monitor GenAI expenses against your budget to avoid unwelcome surprises.

The Domino AI Gateway can help your teams access large language models securely and responsibly. It provides enterprise security to ensure that only approved users can see and use model endpoints from commercial providers.

AI Gateway runs as an internal service in Domino. Domino integrated the MLflow Deployments Server into its platform as AI Gateway's foundation. Beyond its governance and security capabilities, Domino now incorporates LLM usage metadata into its audit trail capability. AI Gateway automatically records all model access events, further expanding enterprise compliance with security and audit policies.

Domino users also benefit from MLflow Deployments Server's API. The API lets you write your prompt code once and choose which LLM to direct it to. When you find another LLM that meets your needs, all that is necessary is to configure a new MLflow LLM endpoint. You then update a single line of prompt code, and the new model is online.

In summary, with Domino AI Gateway, enterprises can:

- Govern LLM access throughout the GenAI application lifecycle.

- Manage user access and monitor LLM queries and associated costs.

- Enable teams to responsibly experiment with building GenAI applications using leading-edge SaaS LLM services.

Regain control and choice over LLM providers and easily mix and match models based on your needs.

Enhance Generative AI Reliability with Vector Databases and RAG

Retrieval Augmented Generation (RAG) is a popular approach to building GenAI applications for use cases like enterprise Q&A and chatbots. RAG requires access to vector databases, which can store representations of your corporate documents. LLMs use these representations of your data to cater their results to your needs.

To accelerate RAG application development, Domino is announcing a new Domino Pinecone Vector Database connection. The data connector enables easy, secure access to your vectorized content and helps you keep your knowledge repository up to date without significant effort. Connect directly to vectorized data from within your Domino workspace notebook. Copy and paste the boilerplate code and use your vector data in your RAG application quickly and easily.

Like other Domino Data Sources, the Pinecone data source offers central credential management, secure storage, audit trails, and data lineage tracking. These features expand Domino's AI governance capabilities to RAG-based generative AI applications.

Pinecone is the first vector database supported through Domino's Data Sources, with Qdrant and others following soon.

Domino for Enterprise Generative AI

These two exciting new features join a portfolio of Domino generative AI features and platform capabilities introduced in 2023.

AI Hub was introduced in 2023 and is now available with various generative AI templates. AI Hub is a repository of best practices and pre-built solutions that help enterprises start quickly with Generative AI projects and other machine learning projects. You can easily share and customize them to your requirements. Projects come with scripts, notebooks, and software packages preinstalled. That gives your team everything they need to quickly build generative AI applications.

GenAI templates in Domino AI Hub address common use cases, including:

- Enterprise Q&A: Answer and question applications with RAG (Retrieval Augmented Generation) using the Pinecone database.

- Text summarization: Summaries based on product feedback and responses with emails with LangChain and AWS Bedrock.

- Fine Tuning: Fine-tune open-source models like Llama 2 and Falcon.

- Chatbots: Build LLM chatbots with Streamlit and OpenAI.

In addition to the GenAI capabilities above, Domino offers:

- AI Coding Assistants. Code generation tools give you an on-demand AI coding assistant to help you analyze and develop code more efficiently.

- Fine-tuning Wizard. Jupyter plugin that generates code to accelerate common GenAI development tasks for fine-tuning. It can generate transparent, modifiable code to access and fine-tune foundation models in three simple steps.

- Data Access. Domino’s data access layer lets data scientists access the vast quantities of disparate data required for generative AI from a central interface.

- Responsible GenAI. Govern GenAI with model reviews and validation with auditable, reproducible projects. Register models and customize the model review and validation process to ensure responsible practices.

- Scalable Deployment - Deploy and monitor models, including GenAI LLMs and apps, on-premise or in the cloud.

- Scale-Out Compute. Train and deploy generative AI and apps with high-performance, low-latency computing, such as autoscaling Ray clusters.

- Hybrid and multi-cloud. Run AI workloads in any cloud or on-premise environment to reduce costs, simplify scaling, and protect data. Deploy and monitor models and apps, including GenAI LLMs and apps, on-prem or in the cloud.

- GenAI Cost Controls. FinOps lets you monitor and reduce AI costs through budgeting, alerts, and efficient cost allocation.

With Domino, enterprises can manage GenAI projects end-to-end. Domino helps them overcome the barriers to bringing GenAI initiatives from pilot to production. Domino delivers enterprise GenAI built by everyone, scalable by design, and responsible by default. Learn more about how to make AI and GenAI accessible, manageable, and beneficial for all.

Source: Tim Law, Domino Data Lab