Rules Approved for EU AI Act

The European Union’s AI Act took a big step toward becoming law today when policymakers successfully hammered out rules for the landmark regulation. The AI Act still requires votes from Parliament and the European Council before becoming law, after which it would go into effect in 12 to 24 months.

The AI Act has been in the works since 2018, the same year that the EU’s General Data Protection Regulation (GDPR) went into effect, as European lawmakers seek to protect the continent’s residents from the negative impacts of artificial intelligence.

As we’ve previously reported, the new law would create a common regulatory and legal framework for the use of AI technology, including how it’s developed, what companies can use it for, and the consequences of failing to adhere to requirements.

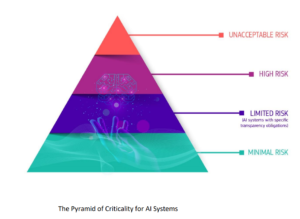

Earlier drafts of the law categorize AI by use cases in a four-level pyramid. At the bottom are systems deemed a minimal risk which would be free from regulation. This would include things like search engines. Above that would be systems with limited risks, such as chatbots, which would be subject to certain transparency requirements.

Organizations would need to gain approval before implementing AI in the high-risk category, which would include things such as self-driving cars, credit scoring, law enforcement use cases, and safety components of products like robot-assisted surgery. The government would set minimum safety standards for these systems, and the government would maintain a database of all high-risk systems.

The EU Act would completely ban certain AI uses deemed to have an unacceptable risk, such as real-time biometric identification systems, social scoring systems, and applications designed to manipulate the behavior or people or “specific vulnerable groups.

The launch of ChatGPT one year ago and the rise of generative AI in 2023 has solidified regulators’ desire to shield people from the harmful aspects of AI. According to an article in the New York Times, EU policymakers adapted the AI Act to account for the emergence of GenAI by adding requirements for large AI model creators “to disclose information about how their systems work and to evaluate for ‘systemic risk.’”

According to the Times, policymakers finally agreed on AI Act rules after three days of negotiations, including a marathon 22-hour session on Wednesday. It’s not clear how soon a draft of the finalized AI Act law would be available to the public. If it’s approved in the European Union’s legislative bodies, it would likely go into force one to two years later.

One of the areas of the new law that had yet to be hammered out before this week’s rulemaking session included penalties. Violations of the GDPR can bring penalties equal to up to 4% of a company’s annual revenue or €20 million, whichever is greater.

Editor's note: This article first appeared in Datanami.

Related

Alex Woodie has written about IT as a technology journalist for more than a decade. He brings extensive experience from the IBM midrange marketplace, including topics such as servers, ERP applications, programming, databases, security, high availability, storage, business intelligence, cloud, and mobile enablement. He resides in the San Diego area.