Nvidia Expands Its AI Strategy With New Hugging Face Integration, Enterprise 4.0, AI Workbench

Nvidia once staked its entire future on the promise of artificial intelligence, Nvidia CEO Jensen Huang told an audience at SIGGRAPH in Los Angeles this week.

“Twenty years after we introduced to the world the first programmable shading GPU, we introduced RTX at SIGGRAPH 2018 and reinvented computer graphics. You didn’t know it at the time, but we did, that it was a ‘bet the company’ moment,” Huang said during his keynote.

The RTX was Nvidia’s reinvention of the GPU, designed to unify computer graphics and artificial intelligence in order to make real-time ray tracing feasible: “It required that we reinvent the GPU, added ray tracing accelerators, reinvented the software of rendering, reinvented all the algorithms that we made for rasterization and programmable shading,” said Huang.

While the company was transforming computer graphics with AI, it was also reinventing the GPU for a brand new age of AI that had not fully come into view until recently. Continuing its progress in enabling AI development in this brave new world, Nvidia announced a flurry of new products and services at SIGGRAPH, including a Hugging Face partnership, an update to its AI Enterprise software, and a new developer toolkit called AI Workbench.

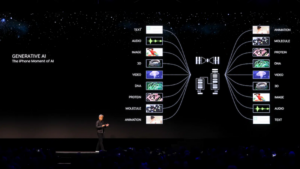

Generative AI Takes Center Stage

AI is a household concept thanks to the explosive popularity of ChatGPT and similar generative AI models that are completely changing the tech landscape. Nvidia has been a critical player in this new market with its developer-focused technology.

“Nvidia is a platform company. We don’t build the end applications, but we build the enabling technology that allows companies like Getty, like Adobe, and like Shutterstock to do their work on behalf of their users,” said Nvidia’s VP of Enterprise Computing, Manuvir Das, in a press briefing.

Pre-trained foundation models like GPT-4 and Stable Diffusion can serve as a good starting point for building applications, but customization is key for businesses building their own AI models, Das said, noting that customization and fine-tuning go a long way in determining the efficacy and the output of a model.

Pre-trained foundation models like GPT-4 and Stable Diffusion can serve as a good starting point for building applications, but customization is key for businesses building their own AI models, Das said, noting that customization and fine-tuning go a long way in determining the efficacy and the output of a model.

Many foundation models are trained with large datasets of public data, leaving them prone to hallucinations and less accurate outputs. Using domain-specific data can vastly improve accuracy, which is a major priority for generative AI use cases in sectors like healthcare and financial services where every detail counts.

Das said Nvidia views leveraging generative AI as a three-step process. The first step is having the right foundation models, trained over months with large amounts of data. The next step is customization, which can be complex: “There are many, many techniques for how to customize, but essentially, you’re producing a model that is infused with domain data, relevant data, and examples, so it can do a much better job,” Das said. The third facet of AI deployment is embedding the models through an API into applications and services to take them into production. Here are Nvidia's newest tools for this three-step process.

Integration with Hugging Face

A new partnership will bring the AI resources of Nvidia’s DGX Cloud, its AI computing platform, to the popular open source machine learning platform Hugging Face.

Those developing large language models and other AI applications will have access to Nvidia's DGX Cloud AI supercomputing within the Hugging Face platform to train and tune advanced AI models. Hugging Face says over 15,000 organizations use its platform to build, train, and deploy AI models using open source resources, claiming its community has shared over 250,000 models and 50,000 datasets.

The DGX Cloud-powered service, available in the coming months, is Hugging Face’s new Training Cluster as a Service meant to simplify creating new and custom generative AI models using Nvidia’s software and infrastructure. Each instance on the DGX Cloud features eight Nvidia H100 or A100 80GB Tensor Core GPUs for a total of 640GB of GPU memory per node.

The DGX Cloud-powered service, available in the coming months, is Hugging Face’s new Training Cluster as a Service meant to simplify creating new and custom generative AI models using Nvidia’s software and infrastructure. Each instance on the DGX Cloud features eight Nvidia H100 or A100 80GB Tensor Core GPUs for a total of 640GB of GPU memory per node.

“People around the world are making new connections and discoveries with generative AI tools, and we’re still only in the early days of this technology shift,” said Clément Delangue, co-founder and CEO of Hugging Face in a statement. “Our collaboration will bring Nvidia’s most advanced AI supercomputing to Hugging Face to enable companies to take their AI destiny into their own hands with open source and with speed they need to contribute to what’s coming next.”

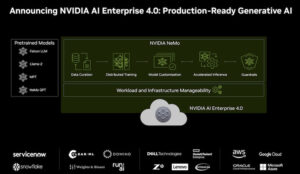

Nvidia AI Enterprise 4.0

The company also announced Nvidia AI Enterprise 4.0, the latest version of its enterprise platform for production AI that has been adopted by companies like ServiceNow and Snowflake.

"This is essentially the operating system of modern data science and modern AI. It starts with data processing, data curation, and data processing represents some 40, 50, 60 percent of the amount of computation that is really done before you do the training of the model," Huang said.

Enterprise 4.0 now includes several tools to help streamline generative AI deployment. One new addition is Nvidia NeMo, a framework the company launched last September that contains training and inferencing frameworks, guardrailing toolkits, data curation tools, and pretrained models.

Enterprise 4.0 now includes several tools to help streamline generative AI deployment. One new addition is Nvidia NeMo, a framework the company launched last September that contains training and inferencing frameworks, guardrailing toolkits, data curation tools, and pretrained models.

Another new inclusion for AI Enterprise 4.0 is the Nvidia Triton Management Service which automates the deployment of multiple Triton Inference Server instances in Kubernetes. Nvidia says the new service enables large-scale inference deployment through efficient hardware utilization. The software application manages the deployment of Triton Inference Server instances with one or more AI models, allocates models to individual GPUs and CPUs, and efficiently collocates models by frameworks.

Nvidia Enterprise is supported on the company’s RTX workstations which have three new Ada-generation GPUs: RTX 5000, RTX 4500, and RTX 4000. The 48GB workstations can be configured with AI Enterprise or Omniverse Enterprise.

Nvidia AI Enterprise 4.0 will also be integrated into partner marketplaces, including AWS Marketplace, Google Cloud, and Microsoft Azure, as well as through Nvidia cloud partner Oracle Cloud Infrastructure, the company said.

AI Workbench: A New Developer Toolkit

Finally, Nvidia unveiled its AI Workbench, a toolkit for creating, testing, and customizing generative AI models on a PC or workstation and then scaling them to any datacenter, public cloud, or DGX Cloud.

There are hundreds of pretrained models now available, and finding the right frameworks and tools when building a custom model for a specific use case can be challenging. Nvidia says its new AI Workbench lets developers pull together all necessary models, frameworks, software development kits and libraries from open source repositories (and its own AI platform) into a unified developer toolkit.

Nvidia says its AI Workbench streamlines selecting foundation models, building a project environment, and fine tuning these models with domain-specific data. Developers can customize models from repositories like Hugging Face and GitHub using this custom data and then share the models across multiple platforms.

Nvidia says its AI Workbench streamlines selecting foundation models, building a project environment, and fine tuning these models with domain-specific data. Developers can customize models from repositories like Hugging Face and GitHub using this custom data and then share the models across multiple platforms.

“This Workbench is a collection of tools that make it possible for you to automatically assemble the dependent runtimes and libraries, the libraries to help you fine tune and guard rail, to optimize your large language model, as well as assembling all of the acceleration libraries, which are so complicated, so that you can run it very easily on your target device,” Huang said in his SIGGRAPH keynote.

For those many companies who are also placing large bets on generative AI, Das says the real power of Nvidia’s platform lies in its flexibility.

“What we really believe in at Nvidia is, once you produce the model, you can put it in a briefcase and take it with you wherever you want,” Das said. “And all you really need is the runtime from Nvidia that you can take with you so that you can run the model wherever you want to run it. And as you deploy the model, you want that software to be enterprise-grade so that you can bet your company on it.”