AMD Has a GPU to Rival Nvidia’s H100

If you have not been lucky enough to find Nvidia’s prized H100 GPU in the cloud or off the shelf, AMD has a challenger waiting in the wings that could be worth considering.

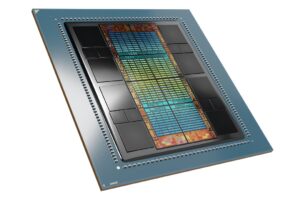

AMD announced the new MI300X GPU, which is targeted at AI and scientific computing. The GPU is being positioned as an alternative to Nvidia’s H100, which is in short supply because of the massive demand for AI computing.

“I love this chip, by the way,” said Lisa Su, AMD’s CEO, during a livestreamed presentation to announce new datacenter products, including new 4th Gen Epyc CPUs.

AMD is on track to sample MI300X in the next quarter. The GPU’s production will ramp up in the fourth quarter.

The MI300X is a GPU-only version of the previously announced MI300A supercomputing chip, which includes a CPU and GPU. The MI300A will be in El Capitan, a supercomputer coming next year to Lawrence Livermore National Laboratory. El Capitan is expected to surpass 2 exaflops of performance.

But to be sure, Nvidia’s H100 has a sound footing in datacenters that AMD may find hard to overcome. Google Cloud last month announced the A3 supercomputer, which has more than 26,000 Nvidia H100 GPUs, and Microsoft is using Nvidia GPUs to run BingGPT, the AI service it has built into its search engine. Oracle and Amazon also offer H100 GPUs through their cloud services. Nvidia’s market cap as of Tuesday stood at around $1 trillion, largely boosted by demand for its AI GPUs.

The MI300X has 153 billion transistors, and is a combination of 12 chiplets made using the 5-nanometer and 6-nanometer processes. AMD replaced three Zen4 CPU chiplets in the MI300A with two additional CDNA-3 GPU chiplets to create the GPU-only MI300X.

“For MI300X to address the larger memory requirements of large language models, we actually added an additional 64 gigabytes of HBM3 memory,” Su said.

The MI300X has 192GB of HBM3 memory, which Su said was 2.4 times more memory density than Nvidia’s H100. The SXM and PCIe versions of H100 have 80GB of HBM3 memory.

Su said the MI300X offered 5.2TBps of memory bandwidth, which she claimed was 1.6 times better than Nvidia’s H100 GPUs. That comparison was made to the SXM version of H100 GPU, which has a memory bandwidth of 3.35TBps.

However, Nvidia has the NVL version of H100 – which links up two GPUs via the NVLink bridge – that has an aggregate 188GB of HBM3 memory, which is close to the memory capacity of MI300X. It also has 7.8TBps bandwidth.

Su cherry-picked the performance results, but did not speak about the important benchmark – the overall floating point or integer performance results. AMD representatives did not provide performance benchmarks.

But Su said MI300A – which has the CPU and GPU – is eight times faster and five times more efficient compared to the MI250X accelerator, a GPU-only chip that is in the Frontier supercomputer.

AMD crammed more memory and bandwidth to run AI models in memory, which speeds up performance by reducing the stress on I/O. AI models typically pay a performance penalty when moving data back and forth between storage and memory as models change.

“We actually have an advantage for large language models because we can run large models directly in memory. What that does is for the largest models, it actually reduces the number of GPUs you need, significantly speeding up the performance, especially for inference, as well as reducing total cost of ownership,” Su said.

The GPUs can be deployed across standard racks with off-the-shelf hardware with minimal changes to the infrastructure. Nvidia’s H100 can be deployed in standard servers, but the company is pushing specialized AI boxes that combine its AI GPUs with other Nvidia technology such as its Grace Hopper GPUs, BlueField data processing units, or the NVLink interconnect.

AMD’s showcase for the new GPU is an AI training and inference server called the AMD Infinity Architecture Platform, which crams eight MI300X accelerators and 1.5TB of HBM3 memory. The server is based on Open Compute Project specifications, which means it is easy to deploy in standard server infrastructures.

The AMD Infinity Architecture Platform sounds similar to Nvidia’s DGX H100, which has eight H100 GPUs and 640GB of GPU memory, and overall 2TB of memory in a system. The Nvidia system provides 32 petaflops of FP8 performance.

The datacenter AI market is a vast opportunity for AMD, Su said. The market opportunity is about $30 billion this year, and will grow to $150 billion in 2027, she said.

“It’s going to be a lot because there’s just tremendous, tremendous demand,” Su said.

Nvidia has a major advantage over AMD in software, with its CUDA development stack and AI Enterprise suite helping in the development and deployment of AI tools. AMD executives spent a lot of time trying to address software development tools, and said its focus was on an open-source approach as opposed to Nvidia’s proprietary model with CUDA.

Like CUDA, AMD’s ROCm software framework was originally designed for high-performance computing, but is now being adapted for AI with libraries, runtimes, compilers, and other tools needed to develop run and tune AI models. ROCm supports open AI frameworks, models, and tools.

The CEO of AI services provider HuggingFace, Clem Delangue, said it was optimizing AI models being added to its repository to run on Instinct GPUs. About 5,000 new models were added to HuggingFace last week, and Huggingface is optimizing all those models for Instinct GPUs.

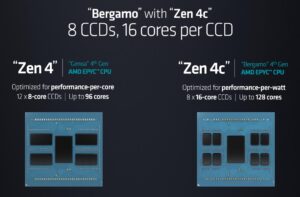

AMD also introduced new CPUs, including a new lineup of 4th Gen Epyc 97X4 CPUs codenamed Bergamo, which is the company’s first family of chips oriented for cloud computing.

Bergamo chips can be compared to Arm-based chips developed by Amazon and Ampere, which are deployed in datacenters to serve up cloud requests. The chips run applications as microservices – think cloud-native applications running on Google and Facebook servers – by breaking up code and distributing it among a vast number of cores.

The Bergamo chips have up to 128 cores based on the Zen4c architecture, which are broken up over eight compute chiplets. The top-line Epyc 9754 has 128 cores, runs 256 threads, and draws up to 360W of power. The chip has 256MB of L3 cache and runs at frequencies up to 3.10GHz. The mid-tier 9754S has similar specifications but runs only one thread per code. The final version Epyc 9734 has 112 cores, runs two threads per core, and draws 320 watts of power.

Meta is preparing to deploy Bergamo in datacenters as its next-generation high-volume computing platform.

“We’re seeing significant performance improvements with Bergamo over Milan on the order of two and a half times,” said Alexis Black Bjorlin, vice president of infrastructure at Meta, during an on-stage presentation.

AMD also announced chips codenamed 4th Gen Epyc chips Genoa-X with 3D V-Cache technology, employing up to 96 CPU cores. The chips will be available in the third quarter.

The fastest chip in the Genoa-X lineup is 9684X, which has 96 cores, supports 192 threads, and draws 400 watts of power. It has 1.1GB of L3 cache and runs at frequencies up to 3.7GHz. The other two SKUs, 9384X and 9184X have 32 and 16 cores respectively, 768MB of L3 cache, frequencies of 3.9Ghz and 4.2GHz respectively. The chips draw 320 watts of power.