Apple’s Homegrown Silicon Emerging in Company’s AI/ML Strategy

Apple's closed environment and in-house hardware and software strategy ensures the company does not have to worry as much as Microsoft or Facebook about building AI factories with a giant battalion of GPUs.

Apple did not talk about its machine learning strategy at last week’s Worldwide Developer Conference where the company revealed a new mixed-reality headset.

Instead, the company talked about how it was building real-world AI features into its devices. The references to AI homed in on one fact: the company is packing more on-device processing capabilities and transformer models to run ML applications.

Apple's control over its software and on-device silicon gives it an advantage in ML and AI delivery. The company is fine-tuning its AI software to its hardware capabilities, which reduces the strain on its data centers to process raw input sent from devices.

"Considering Apple's investment in ML acceleration in Apple Silicon, the strategy appears to prioritize on-device processing, to minimize the amount of data Apple needs to process and store in cloud services," said James Sanders, principal analyst for cloud, infrastructure, and quantum at CCS Insights.

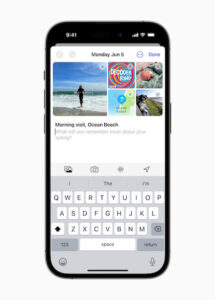

Journal uses on-device machine learning to create personalized suggestions to inspire a user’s journal entry, Apple says. (Source: Apple)

Compare that to Microsoft, which is arming its data centers with GPUs to deliver ML services to client devices, which are largely powered by off-the-shelf hardware.

Apple is using a new transformer model and on-device ML in iOS 17 for features like autocorrect. Apple predicted autocorrect as "state of the art for word prediction, making autocorrect more accurate than ever."

There were also other examples of how Apple is building ML directly into applications.

A new iPhone feature called Suggestions, when enabled, provides suggestions on what memories to include in the Journal application. The on-device feature sounds similar to autocorrect features on mobile devices, but with real-time text input being fed into the on-device ML, which will then provide recommendations.

Apple's scene-stealing Vision Pro headset also uses on-device ML for videoconferencing features. Users can create an animated, but realistic "digital persona" that can represent a user during videoconferences via the mixed reality headset. It is one way to avoid the otherwise real-world appearance of a person wearing a headset, which could be ugly.

The technology uses advanced machine learning techniques to create the digital persona and simulate movement.

"The system uses an advanced encoder-decoder neural network to create your digital persona. This network was trained on a diverse group of thousands of individuals. It delivers a natural representation, which dynamically matches your facial and hand movement," said Mike Rockwell, vice president at Apple, during the speech at WWDC to explain the headset.

Apple also talked about how the homegrown M2 Ultra chip – which is in the outrageously expensive Mac Pro – can "train massive ML workloads, like large transformer models that the most powerful discrete GPU can't even process because it runs out of memory."

To be sure, AI capabilities are already widespread on mobile devices, many of which have neural chips for inferencing. But Apple is not treating AI/ML as a gestalt feature or product, and it is a means by which existing functionality is made better or new functionality is added to existing apps, Sanders said.

Google is also trying to exploit on-device functionality to accelerate AI on client devices. The company has hardware capabilities in its Pixel devices that work with cloud-based AI and ML features. Google in April also announced that it was enabling a protocol called WebGPU – which is the successor to WebGL – to take advantage of hardware resources on client devices for faster AI.

Apple last week detailed a new multimodal transformer model called ByteFormer, which can take in and contextualize audio, video, and other inputs. It transforms audio, visual, or text input into bytes, which enables the development of models which can operate on multiple input modalities. The model was trained on Nvidia A100 GPUs, though it wasn’t clear if the GPUs were in Apple’s data centers.

Sanders said that Apple is exploring generative AI, though it has not been productized yet. Apple maintains a port of Stable Diffusion compatible with the GPU and ML cores of its M-series chips on Mac and iPad. While not feature-complete, it is rather far along, including support for ControlNet and image inpainting, he said.