Researchers Can Get Free Access to World’s Largest Chip Supporting GPT-3

Since ChatGPT took the world by storm, companies opened pocketbooks to explore the tech. It also brought attention to OpenAI’s GPT-3, the large-language model behind that hot chatbot that provides human-like responses to queries.

But the hardware – which is mostly Nvidia GPUs – to train the code is in short supply, or only accessible to companies with deep pockets. But the Pittsburgh Supercomputing Center wants to provide free access to the world’s largest chip for researchers to run transformer models like GPT-3, which Microsoft has adapted for AI technology in its Bing search engine.

The supercomputing center – which is a collaboration between Carnegie Mellon and University of Pittsburgh – is welcoming a new round of proposals to access Cerebras’ CS-2 system, which is widely used to train large language models. The CS-2 is an AI accelerator in PSC’s Neocortex supercomputing system.

A webinar is being held on Tuesday, Feb. 28 from 2:00pm to 3:00pm ET. Participants will need to register on PSC’s website to attend the webinar.

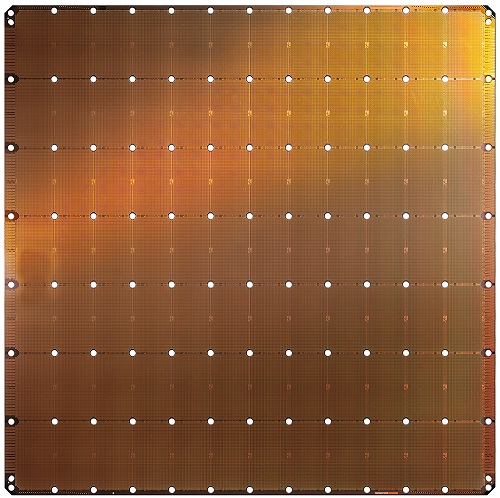

The CS-2 has Cerebras’ Wafer-Scale Engine chip, which is considered the world’s largest chip with 850,000 cores. The chip is being used for both AI, which approximates results through reasoning, and for conventional supercomputing such as National Energy Technology Laboratory’s decarbonization project, which provides more precise results through logical computing.

PSC is welcoming proposals mostly related to artificial intelligence and machine-learning workloads for computing time on Neocortex. Proposals for conventional high-performance computing applications are not as welcome, but applications to bring an algorithmic approach to run conventional HPC workloads are being encouraged. The proposals need to be non-proprietary and only from U.S. research or non-profit entities.

The CS-2 system supports the popular BERT transformer model in addition to GPT-3.

“There’s a huge amount of talk about large language models and about very large language models, and yet the number of organizations that have actually trained one from scratch after a given size of say… 13 billion [paramaters] falls off a cliff. You need a lot of hardware first, whether it is ours or somebody else’s,” said Andrew Feldman, CEO of Cerebras Systems.

Typically, it takes a lot of data, tribal knowledge, and deep machine-learning expertise to run transformer models. It takes weeks to train the data, and the hardware options include GPUs, which are being used by Microsoft for its Bing with AI, and TPUs, which are being used by Google for its Bard chatbots.

PSC’s Neocortex is already being used by research to investigate AI in subjects like biotechnology, visual mapping of the universe and health sciences. The system is also being used to research ways in which to deploy AI on new memory models that have been deployed in the CS-2.

Cerebras’ WSE-2 chip in the CS-2 packs 2.6 trillion transistors, with all relevant AI hardware concentrated in the chip the size of a wafer. The system has 40GB SRAM on-chip memory, 20 petabytes per second bandwidth, and twelve 100 Gigabit Ethernet ports. The CS-2 is connected to a Superdome Flex system from HPE, which has 32 Intel Xeon Platinum 8280L chips with 28 cores each, and running at frequencies up to 4GHz.

By comparison, Nvidia GPUs are generally distributed over a wide number of systems. Nvidia’s GPUs rely on the CUDA parallel-programming toolkit, which is widely used in researching AI, but is closed-source and proprietary.

“I think there are large opportunities for us to displace GPUs and TPUs and do so in a more efficient manner – to use fewer flops to achieve better goals,” Feldman said.

The demand for ChatGPT has overwhelmed OpenAI’s systems, but it is still very early, and the demand for AI computing will be overwhelming.

“Some companies are building little businesses on it, but it’s far from … being used in every support stack, in every customer service stack. We are just still in the first inning,” Feldman said.

The Pittsburgh Supercomputing Center last week named a new director in James Barr von Oehsen, who was previously the associate vice president for the Office of Advanced Research Computing at Rutgers.