Sony’s Computer Vision AI Stack Takes Pixel Analysis to the Edge

Computer vision was among the earliest technologies to wow audiences on the capabilities of artificial intelligence. But the collective public awe shifted with the emergence of large language models that power ChatGPT and search engines from Microsoft and Google.

Computer vision is complicated to implement because of the computing resources needed, the size of data sets, and the models available to create a trained system that can spit out tangible results.

The AI stack starts with collection of raw data and filtering and manipulating pixels. Nvidia uses a workflow that includes customizable denoising and upscaling through neural networks, video data sets, and scripting through its GPUs.

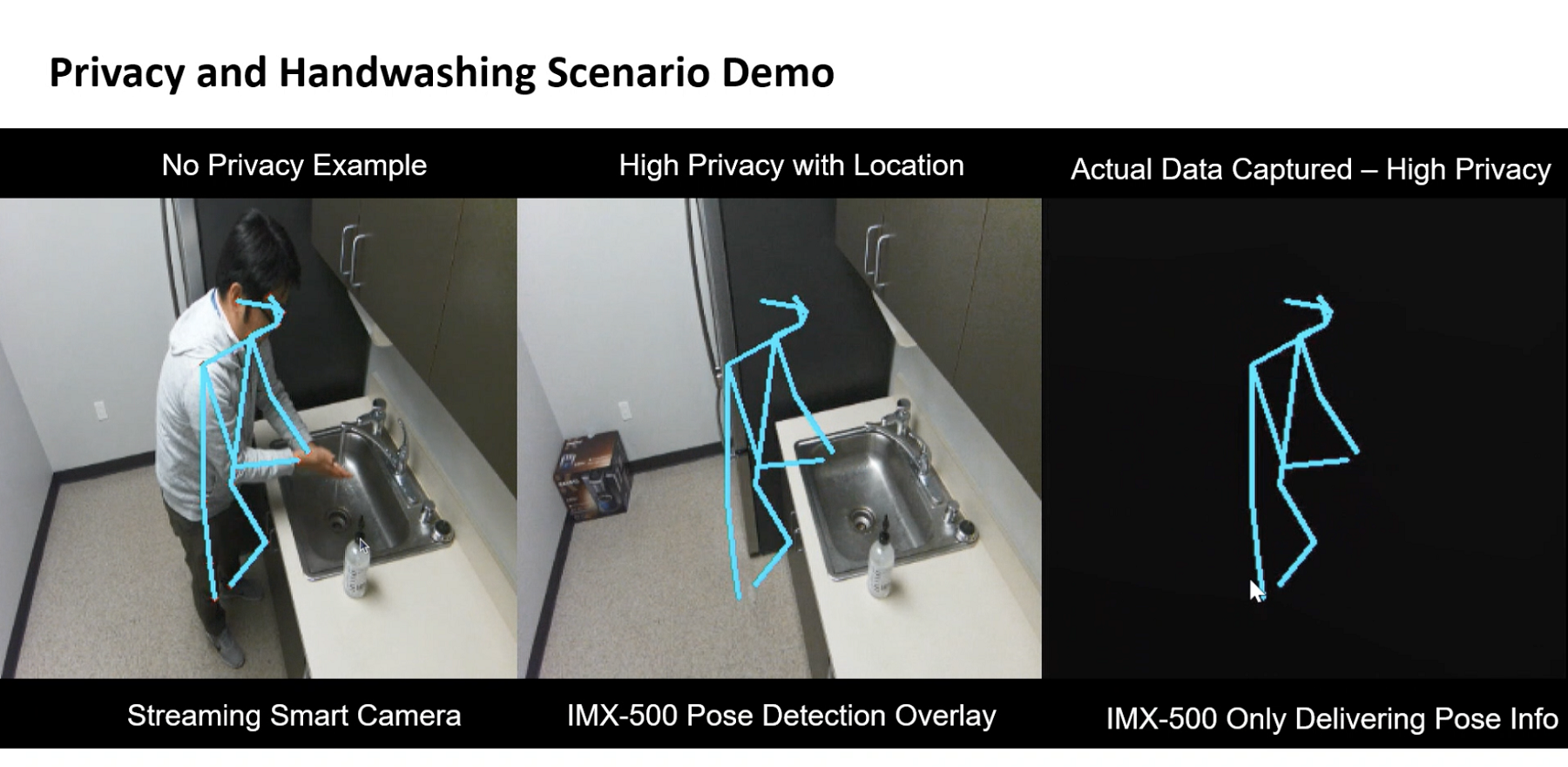

Sony Semiconductor sees another way to advance computer vision by analyzing raw data and pixels on cameras at the edge, and dispatching only the information upstream that is relevant to AI systems.

This technique – which uses the IoT model of inferencing on the edge – reduces the burden on internet bandwidth and computing on GPUs, which usually take on image processing.

It is one thing to analyze a full image and determine an object, and Sony believes it is more economical to go granular and implement a new way to analyze pixels on the camera itself. For that, Sony has introduced a full-stack AI offering for enterprises called Aitrios, which includes an AI camera, a machine-learning model, and development tools.

"AI guys don't care. They want more accurate data. They do not necessarily need more beautiful data. The interpretation of the pixel is quite different," said Mark Hanson, vice president of technology and business innovation at Sony Semiconductor.

The Sony stack collects data via AI cameras called IMX500 or IMX501. The cameras interpret data differently for use in AI. Sony took off logic that reduced the pixel structure – which could be to reduce their size or modify them to meet a specific image resolution – and that exposed more light to the pixel and gave it better sensitivity.

"We put this logic chip that does all the computation – it runs the AI model, essentially. They are really combined together and instead of having to go through a bus structure to some GPU or CPU, everything's inside," Hanson said.

The minute the sensor gets an image it is processed in a few milliseconds. After running the model, the output could be detection of a person, animal, a human pose, in the form of a text string or metadata.

"We started thinking 'I can run these teeny models on the edge, there's a lot of trickery that we can do between the pixel and the logic.'" Hanson said.

"We can expose that stuff to the AI guys that would essentially enable them to do some unique things they cannot do with a standalone camera device vendor, or a GPU or a CPU, because we can take the data feed in a different way and interpret it directly on the chip," Hanson said.

That's more efficient than conventional camera setups in AI retail stores which need equipment like 4K cameras and CAT-5 cables that need to be fed to remote back-ends that process all the data. At the chip level, Qualcomm and MediaTek already have camera modules, but they process and move data differently.

Mobile chips with camera modules go through a MIPI bus into a GPU for acceleration and into a CPU to manage the output and communications. There are many hoops to cross until the image processing is complete. For example, an expensive camera in a car captures an image, and it will need a sophisticated AI to process the information, which ups the GPU usage and power consumption.

By comparison, Sony's camera is a PoE (Power over Ethernet) plugin camera that powers itself, and it has no switches, fans or heat sinks. The fully integrated device includes an MCU that is turning it on and off and communicating over the network topology. The hardware is relatively low power, drawing only 100 milliwatts.

It can run very sophisticated models to the point where it can recognize up to 40 or 50 classifiers just on one little camera. We can have a version ... that runs on Wi-Fi," Hanson said.

Sony's technology is a take on distributed computing that will evolve as AI – and its associating computing elements on the edge and data centers – share the load of computing. It is also a step in the direction of sparse computing, a distributed computing architecture that brings computing closer to data as opposed to the data going to computing, which is seen as being inefficient.

Most people still use cameras in an unconnected way, and don’t want to wait for pictures to be processed by the cloud to see them, said Naveen Rao, CEO and cofounder of MosaicML, which is a machine-learning services company.

"By putting the ability into the camera, it just writes the images to the media with all the corrections. The only reason to do things on a device is because of latency – I need the result very quickly for user experience. And working without connectivity – I could be taking pics in Antarctica and I need the photo processing to still work," Rao said.

Sony's core Aitrios technology includes a stack that enables AI models based on technologies such as TinyML, which is designed to run deep learning on microcontrollers on the edge.

The sensor that collects images can be plugged directly into a cloud model, much like how 5G cells and other sensors collect and feed data into cloud services. Sony is partnering with Microsoft to make the sensor an endpoint to process imaging data on the edge.

The data can be fed directly into larger trained models in cloud services such as Azure, which can also provide access to custom or synthetic datasets that could be plugged into AI training models.

An Aitrios console sits on top and acts like an interface for the camera technology. It looks for cameras, downloads firmware, and manages and deploys updates to the cameras. It also looks for AI models on the marketplace and sends them to the camera.

The technology could be used in retail stores to determine on-shelf availability, or to optimize traffic flow to assess where customers are spending more time on the floor. It can also help understand where people are stealing goods, and send security.

There was no word on whether the camera was qualified for use on Google or Amazon Cloud. The cloud providers are extending the boundaries of their cloud services outside their conventional data centers to include edge devices. That is happening as 5G incorporates specialized networks for sensors to transmit steady streams of low-bandwidth data.