Intel, Habana Labs and Hugging Face Advance Deep Learning Software

Dec. 7, 2022 -- Over the past year, Intel, Habana Labs and Hugging Face have continued to improve efficiencies and lower barriers for adoption of artificial intelligence (AI) through open source projects, integrated developer experiences and scientific research. This work resulted in key advancements and efficiencies for building and training high-quality transformer models.

Transformer models deliver advanced performance on a wide range of machine and deep learning tasks like natural language processing (NLP), computer vision (CV), speech and others. Training these deep learning models at scale requires a large amount of computing power and can make the process time-consuming, complex and costly.

Transformer models deliver advanced performance on a wide range of machine and deep learning tasks like natural language processing (NLP), computer vision (CV), speech and others. Training these deep learning models at scale requires a large amount of computing power and can make the process time-consuming, complex and costly.

The focus of Intel’s ongoing work with Hugging Face through the Intel Disruptor Program, is to scale adoption of training and inference solutions optimized on latest Intel Xeon Scalable and Habana Gaudi and Gaudi2 processors. The collaboration brings the most advanced deep learning innovation from the Intel AI Toolkit to the Hugging Face open source ecosystem and informs innovation drivers in future Intel architecture. Results of this work delivered advancements in distributed fine-tuning on Intel Xeon platforms, built-in optimizations, accelerated training with Habana Gaudi and few-shot learning.

Distributed Fine-Tuning on Intel Xeon Platform

When training on a single node CPU is too slow, data scientists rely on distributed training where clustered servers each keep a copy of the model, train it on a subset of the training dataset and exchange results across nodes via the Intel oneAPI Collective Communications Library to converge to a final model faster. This feature is now natively supported by transformers and makes distributed fine-tuning easier for data scientists.

One example is to accelerate PyTorch training for transformer models on a distributed cluster of Intel Xeon Scalable processor servers. To leverage Intel Advanced Matrix Extensions (Intel AMX), AVX-512 and Intel Vector Neural Network Instructions (VNNI) in PyTorch, hardware features supported in the latest Intel Xeon Scalable processors, Intel has designed the Intel extension for PyTorch. This software library provides out-of-the-box speedup for training and inference.

One example is to accelerate PyTorch training for transformer models on a distributed cluster of Intel Xeon Scalable processor servers. To leverage Intel Advanced Matrix Extensions (Intel AMX), AVX-512 and Intel Vector Neural Network Instructions (VNNI) in PyTorch, hardware features supported in the latest Intel Xeon Scalable processors, Intel has designed the Intel extension for PyTorch. This software library provides out-of-the-box speedup for training and inference.

In addition, Hugging Face transformers provide a Trainer API, making it easier to start training without manually writing a training loop. The Trainer provides API for hyperparameter search and currently supports multiple search backends including Intel’s SigOpt, a hosted hyperparameter optimization service. With this, data scientists can train and get the best model more efficiently.

More information can be found on the Hugging Face blog and documents, “Accelerating PyTorch Distributed Fine-tuning with Intel Technologies,” “Efficient Training on Multiple CPUs” and “Hyperparameter Search Using Trainer API.”

Optimum Developer Experience

Optimum is an open source library created by Hugging Face to simplify transformer acceleration across a growing range of training and inference devices. With built-in optimization techniques and ready-made scripts, beginners can use Optimum out of the box and experts can keep tweaking for maximum performance.

Optimum Intel is the interface between the transformers library and the different tools and libraries provided by Intel to accelerate end-to-end pipelines on Intel architectures. Built on top of the Intel Neural Compressor, it delivers a unified experience across multiple deep learning frameworks for popular network compression technologies, like quantization, pruning and knowledge distillation. In addition, developers can more easily run post-training quantization on a transformer model using the Optimum Intel library to compare model metrics on evaluation datasets.

Optimum Intel is the interface between the transformers library and the different tools and libraries provided by Intel to accelerate end-to-end pipelines on Intel architectures. Built on top of the Intel Neural Compressor, it delivers a unified experience across multiple deep learning frameworks for popular network compression technologies, like quantization, pruning and knowledge distillation. In addition, developers can more easily run post-training quantization on a transformer model using the Optimum Intel library to compare model metrics on evaluation datasets.

Optimum Intel also provides a simple interface to optimize transformer models, convert them to OpenVINO intermediate representation format and to run inference using OpenVINO.

More context can be found on GitHub’s Hugging Face Optimum Intel page and Hugging Face’s Optimum page.

Accelerated Training with Habana Gaudi

Habana Labs and Hugging Face are collaborating to make it easier and quicker to train large-scale, high-quality transformer models. The integration of Habana’s SynapseAI software suite with the Hugging Face Optimum-Habana open source library enables data scientists and machine learning engineers to accelerate transformer deep learning training with Habana processors – Gaudi and Gaudi2 – with a few lines of code.

The Optimum-Habana library features support for a variety of computer vision, natural language and multimodal models. The supported and tested model architectures include BERT, AlBERT, DistilBERT, RoBERTa, Vision Transformer, swin, T5, GPT2, wav2vec2 and Stable-Diffusion. There are over 40,000 models based on these architectures that are currently available on the Hugging Face hub that developers can easily enable on Gaudi and Gaudi2 with Optimum-Habana.

A key benefit of training on the Habana Gaudi solution, which powers Amazon’s EC2 DL1 instances, is cost efficiency – delivering up to 40% better price-to-performance than comparable training solutions, enabling customers to train more while spending less. Gaudi2, built on the same high-efficiency architecture as first-generation Gaudi, also promises to deliver great price performance.

Habana DeepSpeed is also integrated in the Optimum-Habana library and makes it easy to configure and train large language models at scale on Gaudi devices using DeepSpeed optimizations. You can learn more with the Optimum-Habana DeepSpeed usage guide.

The latest release of Optimum-Habana includes support for the Stable Diffusion pipeline from Hugging Face diffusers library, enabling the Hugging Face developer community with cost-efficient test-to-image generation on Habana Gaudi.

More context can be found on the Hugging Face blog “Habana Labs and Hugging Face Partner to Accelerate Transformer Model Training” and the Habana Labs blogs “Memory-Efficient Training on Habana Gaudi with DeepSpeed” and “Generation with PyTorch V-diffusion and Habana Gaudi” and the video “Julien Simon Video: Accelerate Transformer Training with Optimum Habana.”

Few-shot Learning in Production

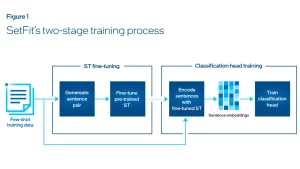

Intel Labs, Hugging Face and UKP Lab recently introduced SetFit, an efficient framework for few-shot fine-tuning of Sentence Transformers. Few-shot learning with pretrained language models has emerged as a promising solution to a real data scientist challenge: dealing with data that has few to no labels.

Current techniques for few-shot fine-tuning require handcrafted prompts or verbalizers to convert examples into a format that's suitable for the underlying language model. SetFit dispenses with prompts by generating rich embeddings directly from a small number of labeled text examples.

Researchers designed SetFit to be used with any Sentence Transformer on the Hugging Face Hub, allowing text to be classified in multiple languages by fine-tuning a multilingual checkpoint.

SetFit doesn't require large-scale models like T5 or GPT-3 to achieve high accuracy. It is significantly more sample-efficient and robust-to-noise than standard fine-tuning. For example, with only eight labeled examples per class on an example sentiment dataset, SetFit was competitive with fine-tuning RoBERTa Large on the full training set of 3,000 examples. Hugging Face found SetFit also achieves comparable results to T-Few 3B, despite being prompt-free and 27 times smaller, making it fast to train and at a much lower cost.

More context can be found on the Hugging Face blog, “SetFit: Efficient Few-Shot Learning Without Prompts.” Register here to hear directly from Hugging Face and Intel about few-shot production and SetFit inference on CPU on Dec. 14.

Open source projects, integrated developer experiences and scientific research are just some of the ways Intel engages with the ecosystem and contributes to reducing the cost of AI. Tools and software accelerate the developer journey to build applications and unleash processor performance. Intel is on a mission to make it easier to build and deploy AI anywhere, enabling data scientists and machine learning practitioners to apply the latest optimization techniques.

Source: Intel