Nvidia Adds Rescale Software Stack to AI Cloud Computing

Nvidia does not have all the internal pieces to build out its massive AI computing empire, so it is enlisting software and hardware partners to scale its so-called AI factories in the cloud.

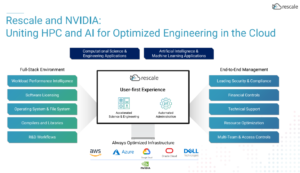

The chipmaker’s latest partnership is with Rescale, which provides the middleware to orchestrate high-performance computing workloads on public and hybrid clouds.

Rescale is adopting Nvidia’s AI technology for what the middleware provider called the world’s first compute recommendation engine, which automates the selection of compute resources available in the cloud for high-performance applications and simulation, said Edward Hsu, chief product officer at Rescale.

“We have over 1,000 applications and versions from leading simulation software providers, we work with all the major cloud providers and specialized architecture providers to really optimize the performance of computational science and engineering workloads,” Hsu said.

Rescale will also offer Nvidia’s AI Enterprise software platform to customers, which includes pre-programmed AI models such as Modulus, which is used for scientific applications and simulation. Rescale can integrate the AI Enterprise with scientific or engineering applications from companies that include ANSYS, Siemens and others for deployment in the cloud.

Companies are starting to use more computationally intensive approaches to science and engineering discovery, and the compute recommendation engine helps customers decide on the type of computing they need to use, Hsu said.

Some considerations on computing requirements for customers include the type of architecture, geographies, data sovereignty, compliance, or regions where more GPU resources are available for applications such as video and artificial intelligence.

“It gets quite complex and what we’ve done is take all our years of taking this information from the metadata that’s been generated from running compute jobs, and applying a combination of proprietary Rescale software, benchmarks [and] TensorFlow. Running on an Nvidia GPU is really developing this compute recommendation engine,” Hsu said.

Major cloud providers already offer virtualization and orchestration technologies. Rescale already partners with all the major cloud providers, and is adding the compute recommendation engine on top of that for customers to optimize the performance of their workloads on these architectures.

More companies are taking a hybrid cloud approach to meet their growing computing needs. Rescale is using Nvidia’s AI Enterprise middleware to match up engineering applications to hardware resources across the clouds.

Hsu described the many pieces of the partnership of Nvidia as a full-stack offering. For example, if a customer is building a turbocharger for cars, the Rescale platform can provide recommendations on parameters to change and the compute resources to use for the fastest and best results.

“The way that we’ve integrated with Rescale is that they can access … all of our software solutions and then run on any of the various cloud Nvidia GPU instances,” said Dion Harris, head of datacenter product marketing at Nvidia, during a press briefing.

Nvidia already dominates the scientific computing space, but Rescale provides an indirect way for HPC customers to adopt its AI Enterprise software to train or utilize AI models.

Nvidia is looking at a future where it ties its hardware and software in a black box. Nvidia is modeling its AI software to work most efficiently on its GPUs. Nvidia’s software stack is based on the CUDA parallel programming framework, and the company is ramping up deploying its latest Hopper based GPUs into the cloud.

The GPU maker last month partnered with Oracle to bring the AI Enterprise software and thousands of GPUs to Oracle’s cloud infrastructure. The company’s GPUs are also being adopted by Meta at scale to run AI applications.

Nvidia’s hardware also works with open-source parallel programming frameworks such as OpenCL, which is managed by The Khronos Group. Intel’s OpenAPI parallel programming framework has a tool called SYCL which strips out CUDA-specific code to run on accelerators from Intel and other chipmakers.