Nvidia Hopper and Intel Sapphire Rapids Up for Grabs in Google Cloud

Google Cloud is putting up the latest chips from Nvidia and Intel in head-to-head competition as the cloud provider advances the computing infrastructure available to customers.

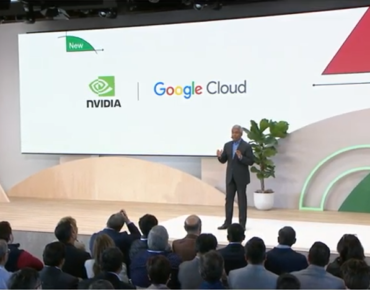

"With Moore's law slowing new infrastructure, advances are required to deliver performance improvements," said Thomas Kurian, CEO of Google Cloud, during a keynote today at the Google Cloud Next summit.

Kurian announced an extended partnership with Nvidia to bring its latest technologies to support AI workloads. The services also include managed services from Nvidia and AI models from Google, Kurian said

Google Cloud will offer the latest H100 GPUs based on the newer Hopper architecture, which offers improved performance for AI workloads with AI-optimized computing units, new instructions, architectural tweaks and improvements in the CUDA parallel programming framework. Google Cloud currently offers GPU instances based on Nvidia’s A100, which are based on the Ampere architecture.

The chip maker has a two-way relationship with Google on AI frameworks, supporting TensorFlow, and providing support for its CUDA-based managed AI services deployed to GPUs on Google Cloud. For example, Nvidia's open-source Rapids AI framework is used by data scientists on Nvidia GPUs in Google Cloud.

Kurian also said it was bringing Intel’s upcoming Sapphire Rapids server chips, which is also called 4th Gen Xeon Scalable processor, to its cloud service. The chip will link up to the infrastructure processing unit developed jointly by Google and Intel, called Mount Evans. The offering will be part of the new C3 virtual machine instances, which was also announced at Google Cloud Next.

The C3 instances will include 200 gigabits per second networking, which is "the highest available in the cloud," Kurian said. The instances are virtual machines that can take on workloads that require a heavy dose of storage and networking, with the Sapphire Rapids CPU offloading computing to the IPU.

"Developers gain accelerated data movement for fast spin-up and shutdown of services, hardware assisted queuing to help load balance millions of incoming requests and accelerated distributed networking communications," said Janet George, vice president and general manager for the cloud and enterprise solutions group, in a breakout session at Google's cloud summit.

The C3 instances are targeted at high-performance computing, AI, gaming, web serving, and media transcoding applications, George said.

Mount Evans is based on PCIe 4.0, has 200Gbps Ethernet, and includes NVMe storage.

Intel recently announced the Intel Developer Cloud, its homegrown cloud service on which customers can test the latest chips and software tools. Intel declined to comment if customers testing applications with Sapphire Rapid chips on Intel's Developer Cloud could deploy the application directly to Google Cloud's C3 instances.

Google didn't announce a new version of its Tensor Processing Unit, which is known as TPU. The company is currently on TPUv4, which Kurian said was now generally available through the cloud service.

Kurian said TPUv4 "runs large scale training workloads up to 80% faster and 50% cheaper," though didn’t elaborate on what rival chip the TPU was being compared against. Kurian shared the example of LG, which uses the TPUs to train an AI model with 300 billion parameters that outperforms other comparable hardware.

Google Cloud also announced its next-generation high-performance storage called Hyperdisk which "delivers 80% higher IOPS by decoupling compute instance sizing from storage performance," Kurian said.

The C3 VMs with Hyperdisk have four times more throughput and 10 times more IOPS than C2 instances, Google said in a press release. The speed up will benefit customers, who won’t have to choose VMs based on storage performance for applications such as databases, Google said.