Cerebras Proposes AI Megacluster with Billions of AI Compute Cores

Chipmaker Cerebras is patching its chips – already considered the world’s largest – to create what could be the largest-ever computing cluster for AI computing.

A reasonably sized “wafer-scale cluster,” as Cerebras calls it, can network together 16 CS-2s into a cluster to create a computing system with 13.6 million cores for natural language processing. But wait, the cluster can be even larger.

“We can connect up to 192 CS-2s into a cluster,” Andrew Feldman, CEO of Cerebras, told HPCwire.

The AI chipmaker made its announcement at the AI Hardware Summit, where the company is presenting a paper on the technology behind patching together a megacluster. The company initially previewed the technology at last year’s Hot Chips, but expanded on the idea at this week’s show.

Cerebras has claimed that a single CS-2 system – which has one wafer-sized chip with 850,000 cores – had trained an AI natural language processing model with 20 billion parameters, which is the largest ever trained on a single chip. Cerebras’ goal is to train larger models, and in less time.

“We have run the largest NLP networks on clusters of CS-2s. We have seen linear performance as we add CS-2s. That means that as you go from one to two CS-2s the training time is cut in half,” Feldman said.

Larger natural-language processing models help in more accurate training. The largest models currently have more than a billion parameters, but are growing even larger. Researchers at Google have proposed new NLP model with 540 billion parameters and neural models that can scale up to 1 trillion parameters.

Each CS-2 system can support models with more than 1 trillion parameters, and Cerebras previously told HPCwire that CS-2 systems can handle models with up to 100 trillion parameters. A cluster of CS-2 such systems can be paired up to train larger AI models.

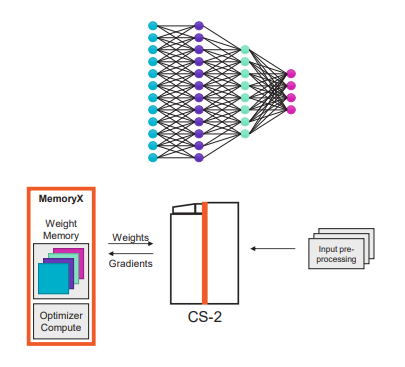

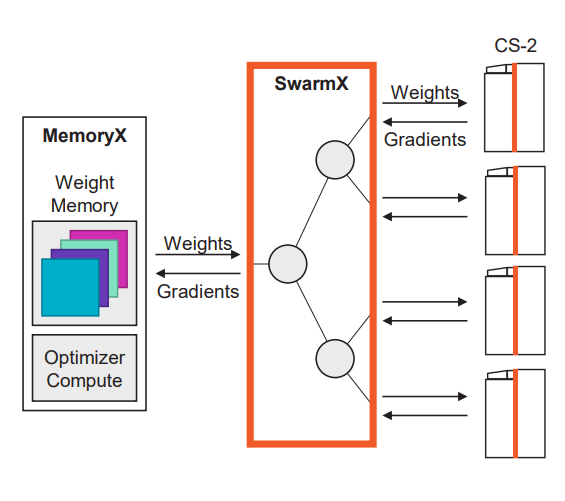

Cerebras has introduced a fabric called SwarmX that will connect CS-2 systems in the cluster. The execution model relies on a technology called “weight streaming,” which disaggregates the memory, compute and networking into separate clusters, which makes the communications straightforward.

AI computing depends on the model size and training speed, and the disaggregation allows users to size up the computing requirements to the problems they are looking to solve. In each CS-2 system, the model parameters are stored in an internal system called MemoryX, which is more of a memory element in the system. The computing being done on the 850,000 computing cores

“The weight streaming execution model disaggregates compute and parameter storage. This allows computing and memory to scale separately and independently,” Feldman said.

The SwarmX interconnect is a separate system that glues together the massive cluster of CS-2 systems. SwarmX operates at a cluster level, which is almost similar to the MemoryX operates at the single CS-2 system – it decouples the memory and computing elements in the cluster, and is able to scale up the number of computing cores available to solve larger problems.

“SwarmX connects MemoryX to clusters of CS-2s. Together the clusters are dead simple to configure and operate, and they produce linear performance scaling,” Feldman said.

The SwarmX technology takes the parameters stored in MemoryX and broadcasts it across the SwarmX fabric to multiple CS-2s. The parameters are replicated across the MemoryX systems in the cluster.

The cross SwarmX fabric uses multiple lanes of 100GbE as transport, and on-chip Swarm fabric is based on in-silicon wires, Feldman said.

Cerebras is targeting the CS-2 cluster system at NLP models with more than 1 billion parameters, even though one CS-2 system is enough to solve a problem. But Cerebras states that moving from one CS-2 to two CS-2s in a cluster cuts the training time in half and so forth.

“Together the clusters … produce linear performance scaling,” Feldman said, adding, “a cluster of 16 or 32 CS-2 could train a trillion-parameter model in less time than today’s GPU clusters train 80 billion parameter models.”

Buying two CS-2 systems could put customers back by millions of dollars, but Cerebras in the presentation argued that such systems are cheaper than the GPU model in clusters, which can’t scale up as effectively and draws more energy.

Cerebras argued that GPU cores need to operate identically across thousands of cores to get a coordinated response time. Calculations also need to be distributed among a complex network of cores, which can be time consuming and inefficient in power consumption.

By comparison, SwarmX divides data sets into parts for training purposes, and creates a scalable broadcast which distributes the weights among the CS-2 systems in a cluster, which sends back the gradients to the coordinated MemoryX cache systems across the cluster.

Switching over a training an NLP model from one CS-1 system to a cluster requires just changing the number of systems in a Python script.

“Large language models like GPT-3 can be spread over a cluster of CS-2s with a single keystroke. That’s how easy it is to do it,” Feldman said.