HPE to Ship First Inference Server with Qualcomm Chip in August

HPE will ship a first server aimed at AI inferencing in August with a chip from Qualcomm.

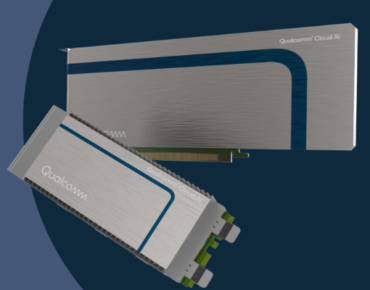

The server, which will be part of the Edgeline 8000 platform, will run on Qualcomm's Cloud AI100 chip, which is targeted at artificial intelligence on the edge. Fabricated on the 7nm process node, the Cloud AI100 chip has up to 16 "AI cores" and supports FP16, INT8, INT16, FP32 data formats.

"I think one of the key things that attracted us to the Qualcomm technology was that it's about a 4x improvement in power performance. When it comes to inference, you are actually often scaling your workload significantly more than you are in training," said Arti Garg, chief strategist for AI strategy at HPE, in an interview with EnterpriseAI at the Discover tradeshow being held in Las Vegas.

The Qualcomm chip comes in three form factors. The dual M.2 for edge and embedded applications offers 70 peak TOPS (tera operations per second) inside a 15 watt TDP, while the standard dual M.2 supplies 200 peak TOPS operating at a max 25 watts. A half-height, half-length (HHHL) PCIe card delivers 400 peak TOPS in a 75 watt package.

In the next year or two, there will be more investments from HPE in systems focused on inferencing. Companies will eventually get very sensitive to operational costs, and power-performance is an important metric in inference.

"A lot of times you're doing inference on the factory floor or in a retail setting, and you don't have the kind of power that you would have available in a data center. That's why it's interesting to us to look at those sort of alternative architecture," Garg said.

At the Discover tradeshow, HPE focused a lot on AI training in high-performance computing and via GreenLake software offerings.

While much of HPE's strategy is focused on training, there's "a lot more to come on the inference side, a lot more interesting things. To me strategically, it's very important that we have both the model training and the model inference and deployment," Garg said.

HPE's focus is on AI and to provide the computational backbone to solve very large problems.

HPE has built the world's official fastest supercomputer, called Frontier, which is deployed at the Oak Ridge National Laboratory. The computer scored an HPL-AI benchmark of 6.86 exaflops, making it the fastest AI computer in the world, and three times faster than the world's second fastest computer, Fugaku, which had a benchmark of around 2 exaflops.

"We think that the data center is probably going to have more than one processor architecture, there may be other hybrid storage, different speeds of storage and things like that. We also want to be exploring what are some of the cutting-edge new architectures and processors designed specifically to support some AI workloads," Garg said.

One example of an HPE experiment with AI processors includes a system built with an AI chip from Cerebras Systems, which is deployed at the Leibniz Supercomputing Centre in Germany. HPE paired its Superdome Flex server with Cerebras' AI system, which has a chip called CS-2.

The company is also playing a role as a system integrator to fill the gap between academia -- where much of the cutting-edge AI research is taking place -- and the corporate world, where real-world AI implementations are taking place.

HPE last year acquired Determined AI, which is powering the machine learning development environment and the software platform for newer systems focused on ML development.

“The purpose of the ML development environment is really essentially making it easy for data scientists to collaborate and train deep learning models,” Garg said.