Intel Unveils Help for Busy Cloud Service Provider CPUs With Its New Infrastructure Processing Units

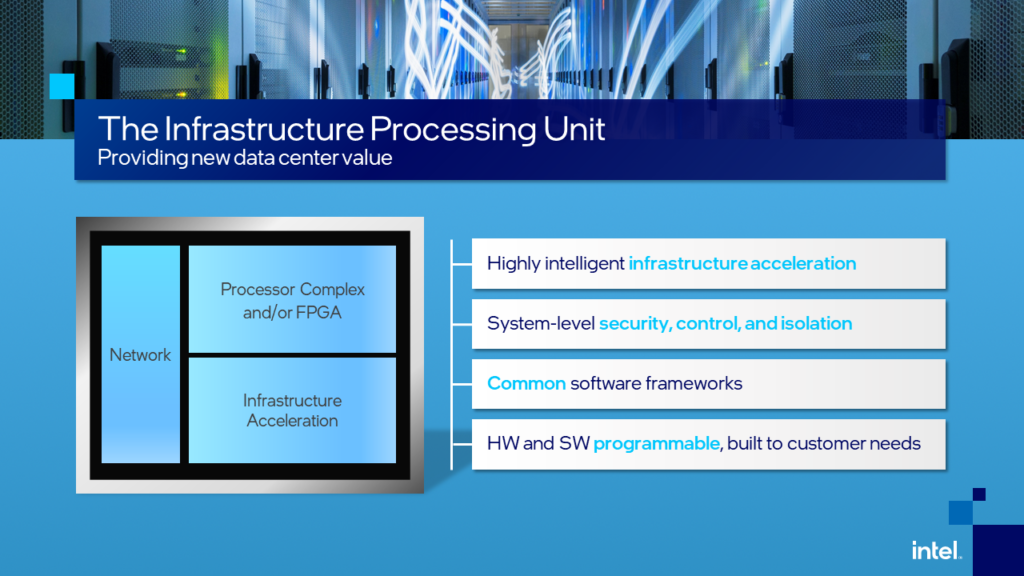

To boost the performance of busy CPUs hosted by cloud service providers, Intel Corp. has launched a new line of Infrastructure Processing Units (IPUs) that take over some of a CPU’s overhead to let it do more processing for revenue-generating tasks.

The IPUs are described by Intel as programmable networking devices that are designed and built to relieve CPUs of some of their responsibilities, like how a GPU frees CPUs up from graphics processing needs.

Created to help hyperscale customers reduce CPU overhead, the first of the new FPGA-based IPU platforms are already shipping and are being used by multiple cloud service providers, while additional ASIC-based IPU designs are still under development and testing, according to the company. Early customers using the IPUs include Microsoft, Baidu, JD.com and VMware.

The IPUs were announced June 14 (Monday) by Intel at the Six Five Summit, which is being held through June 18 by Futurum Research and Moor Insights & Strategy.

In an Intel video describing the new hardware, Guido Appenzeller, the chief technology officer for the company’s data platforms group, said the devices are dedicated infrastructure processors that can run the infrastructure functions of the cloud for cloud service providers. The cloud service provider’s software runs on the IPU, while the revenue-generating customer software runs on the CPU, he said.

“So, a bank’s financial application, running on the CPU, would now be cleanly separated from the cloud service provider’s infrastructure running on the IPU,” said Appenzeller. The advantage for cloud operators is that accelerators built into the IPUs can process these improvements efficiently, he said.

“This optimizes performance, and the cloud operators can now rent 100 percent of the CPU to its guests, which also helps to maximize revenue,” he said. In addition, the IPU lets guests fully control the CPUs, even adding their own hypervisor if desired.

“But the cloud is still fully in control of the infrastructure, and it can sandbox functions, such as networking, security and storage,” said Appenzeller. “And the IPU can replace local disk storage that is directly connected to the server with virtual storage connected via the network. That greatly simplifies data center architecture while adding a tremendous amount of flexibility.”

Intel’s IPU improves data center efficiency and manageability and is built in collaboration with hyperscale cloud partners.

The new IPUs expand upon Intel’s SmartNIC capabilities to help reduce the complexity and inefficiencies in modern data centers, according to the company. The new devices are seen as one the pillars of Intel’s cloud strategy.

According to Intel, research from Google and Facebook has shown that 22 percent to 80 percent of CPU cycles can be consumed by microservices communication overhead, which is one of the issues being targeted by IPUs.

According to Intel, research from Google and Facebook has shown that 22 percent to 80 percent of CPU cycles can be consumed by microservices communication overhead, which is one of the issues being targeted by IPUs.

Additional models of FPGA-based IPU platforms are expected to be released in the future.

Intel’s view of evolving data centers envisions what the company calls an “intelligent architecture where large-scale distributed heterogeneous compute systems work together and is connected seamlessly to appear as a single compute platform,” the company said in a statement. “This new architecture will help resolve today’s challenges of stranded resources, congested data flows and incompatible platform security. This intelligent data center architecture will have three categories of compute — CPU for general-purpose compute, XPU for application-specific or workload-specific acceleration, and IPU for infrastructure acceleration — that will be connected through programmable networks to efficiently utilize data center resources.”

Jack E. Gold, the president and principal analyst with J. Gold Associates, told EnterpriseAI that the announcement of Intel’s IPU is an important step, but that “the IPU is perhaps more of a renaming than a totally new concept.”

An IPU takes specific calculations away from the CPU to lessen its burden and help it produce faster calculations, said Gold. “The IPU is meant to relieve the burden of the CPU on things like storage networks, network connectivity, etc. These functions take a significant portion of CPU cycles to accomplish normally, so being able to do so outside the CPU means the CPU can be doing calculations instead of managing connections and data movement.”

This is especially true when too much cloud-based traffic is involved, along with services such as virtualized storage capability and even Network Functions Virtualization, said Gold.

“The functions are built on the basics of the Intel SmartNIC capability, so it is more of an enhancement than a totally new product category,” he said. “And because it uses FPGAs, it is relatively easy to preconfigure and optimize the accelerators needed for the data movement functions, leaving the compute intensive functions where they belong—in the CPU.”

There is competition in this area, said Gold.

“Of course, other companies such as Nvidia are also building special off-the-CPU optimization components, so Intel is not the only one trying to reduce the loads on the CPU,” he said. “But overall, this type of product can offer significant cost and performance advantages.”