Intel, Lenovo Join Forces on HPC Cluster

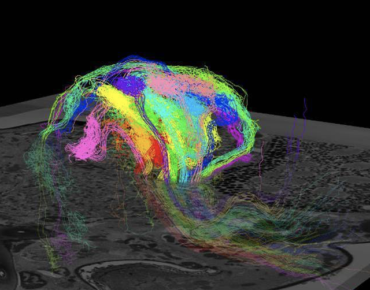

Source: Flatiron Institute/CCB, Janelia Farm/HHMI, Micro Insect Lab/Moscow State University

An HPC cluster with deep learning techniques will be used to process petabytes of scientific data as part of workload-intensive projects spanning astrophysics to genomics.

AI partners Intel (NASDAQ: INTC) and Lenovo (OTCMKTS: LNVGY) said they are providing the Flatiron Institute of New York City with high-end servers running on Intel second-generation Xeon Scalable processors and the chip maker’s deep learning framework dubbed DL Boost. Intel touts its latest generation of Xeon processors as the only CPU with both AI and HPC acceleration built in.

The HPC infrastructure is being used to process huge genomic sequence data files on one end of the spectrum and 100,000 small files in a single directory on the other.

Flatiron’s HPC cluster running AI and other high-end workloads includes 17,000 processor cores in Lenovo’s ThinkSystem SD530 servers. Along with analyzing large, varied data sets, the combination is being used to simulate complex physical processes, the technology partners said this week.

The Lenovo “dense rack” servers integrate the hardware manufacturer’s Neptune thermal transfer module technology running Intel’s Platinum 8268 processor along with Xeon chips delivering its DL Boost deep learning technology. The 8268 introduced earlier this year is a 24-core server microprocessor based on Intel’s Cascade Lake architecture.

In April, Intel released a higher-end version known as Cascade Lake-AP (Advanced Performance) that incorporates its Optane persistent memory technology. Along with HPC applications, the 9200 processor with up to 56 cores also targets AI and advanced analytics.

The new HPC capability enabled Flatiron scientists to crunch petabytes of data, opening up new research opportunities. Previously, for example, researchers could only sequence about 2 percent of genomic data. Now they are able to sequence thousands of families at once, making it possible to pinpoint abnormal genes or to develop precision medicines.

The Flatiron configuration includes an HPC cluster with as much as five times more memory than a typical HPC configuration. In another memory-intensive example, researchers used the Flatiron HPC cluster to simulate the visual system of a wasp, a reconstruction that required 500 Gb of RAM.

Space is tight in New York City, meaning thermal management of the HPC cluster was a must. The Intel-Lenovo HPC platform includes a dense, air-cooled system with much greater memory capacity.

“Cosmological simulations like galaxy formation and black hole creation require hundreds of thousands of cores connected to each other to run. Science should dominate our researchers’ time, not computation,” said Ian Fisk, a co-director at the Flatiron Institute. “Our goal is that the limiting factor in research should be the carbon-based systems rather than the silicon-based systems.”

In August, Intel and Lenovo announced a multi-year commitment to accelerate the convergence of AI and HPC into the exascale era. The partnership focuses on three key areas: systems and solutions, software optimization for HPC and AI convergence, and ecosystem enablement.

Related

George Leopold has written about science and technology for more than 30 years, focusing on electronics and aerospace technology. He previously served as executive editor of Electronic Engineering Times. Leopold is the author of "Calculated Risk: The Supersonic Life and Times of Gus Grissom" (Purdue University Press, 2016).