Microsoft Turbocharges Transactions With ‘Hekaton’ In-Memory

Microsoft is joining the in-memory database party with the release to manufacturing of SQL Server 2014 and its "Hekaton" feature. The new SQL engine has been optimized for in-memory processing, and can boost application performance by as much as a factor of 25.

Hekaton is not a bolt-on product like many in-memory databases out there, but is rather a complete revamping of the SQL processing engine inside of the SQL Server database management system that has been tuned to put database tables, stored procedures, and other database features in main memory instead of out on disk drives. Putting these parts of the database in main memory can radically speed up their performance and also cut down on expensive, bulky, and slow disk drives.

EnterpriseTech attended a briefing at Microsoft's Bellevue, Washington offices last week ahead of the release of the code, with the top brass in Microsoft's Data Platform group on hand to walk through the feeds and speeds of SQL Server 2014 and how it fits into on-premise, cloud, and hybrid datacenters.

The shift from disk-based databases to in-memory was just about inevitable, explained Tracy Daugherty, who is principal group program manager lead for SQL Server 2014 and one of its two cloud variants, Windows Azure SQL Virtual Machines. (Daugherty is not in charge of Azure SQL Database, the database as a service platform Microsoft hosts on its cloud and the third variant of the database that the company sells.)

The update of the engine inside of SQL Server 2014 is a big deal, as is any major such change in any database. The last time Microsoft did a big overhaul of this magnitude was fifteen years ago with SQL Server 7.0. Microsoft saw the need to move the database in-memory for a number of different reasons, and the SQL Server team and Microsoft Research got to work with testing out a number of different approaches to speeding up databases.

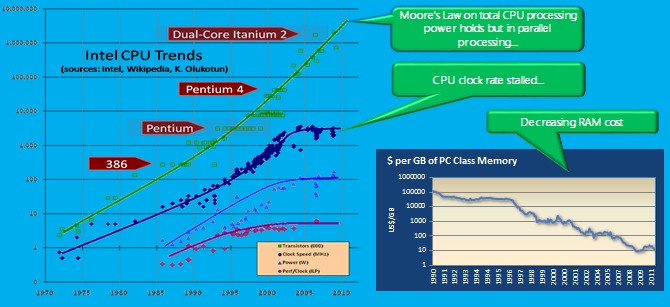

"In 2007, we realized that CPU clock speed was not going up any more, that this magic carpet ride was over, but memory prices were dropping." Daugherty explained. Pulling data from main memory is orders of magnitude faster than pulling it from disk drives because it is inherently faster as well as being a lot closer to the CPUs in the system. "Other people were building separate in-memory databases, but we decided to do something very different, which was to do it inside of SQL Server. If any of you have ever redone your kitchen while living in your home, you know how hard this can be. Building it this way is phenomenal for customers, but very hard for us."

The change in memory pricing is dramatic. Back when SQL Server was still an upstart in the database world in the early 1990s, main memory cost something on the order of $100,000 per gigabyte, and prices remained high for quite a long time. Hence, database management systems were designed to spread data across disk subsystems and had some clever caching algorithms to use main memory and caches to help boost the database performance a bit. Now, very fast server memory with error correction scrubbing costs somewhere between $10 and $20 per gigabyte. In-memory processing is now affordable thanks to Moore's Law, and it is making up for the fact that clock speeds on processors are stuck in a gear below 4 GHz, for the most part.

Strictly speaking, SQL Server 2014 will represent the third in-memory technology that Microsoft has added to its database management system. Back with SQL Server 2012, Microsoft added the "Apollo" column store, which is a quasi-in-memory technology as well as a shift from row-oriented data to column orientation. This can significantly speed up certain kinds of queries where data can be naturally grouped and compressed with a number of algorithmic and data compression techniques. Microsoft updated this code last year, and Hekaton represents the third – and most impressive – in-memory update.

Microsoft put a preview of SQL Server 2014 out last June at its TechEd customer event in New Orleans, with an initial focus on the Hekaton in-memory processing. (This was a Community Technology Preview, in the Microsoft lingo, and was CTP1 to be precise.) Last October, nearly all of the features of SQL Server 2014 were completed and Microsoft let CTP2 fly in the middle of the month, both for download for local testing and as a virtual machine image on the Windows Azure cloud. Since that time, there have been 200,000 downloads of CTP2, and about 10 percent of them are SQL Server images running on the Azure cloud. Microsoft had only promised that the final SQL Server 2014 would come to market sometime in the first half of 2014. As it turns out, the bits for the shiny new database management system will be generally available on April 1 (no kidding), and Microsoft will be hosting a coming out party on April 15 (something to look forward to on American tax day).

There are many interesting aspects to the Hekaton in-memory feature of SQL Server 2014, but perhaps the most interesting is that it does not require any special hardware, as does SAP's HANA in-memory database, and it does not require that all database tables, indexes, stored procedures, and other features to be converted to in-memory, again as does SAP HANA. IBM's BLU Acceleration for DB2 is used to bring portions of database tables into memory and combines it with a columnar data store to accelerate queries, but most of the database resides on disk as applications run. (The BLU feature for DB2 was initially only available on AIX systems, but is now available for Linux on X86 servers.) Hekaton is a bit different in that Microsoft has created tools to show the hot tables in the database and allows database admins to convert these to the Hekaton in-memory format, leaving other tables alone on disk where they are.

"We give you the flexibility to choose," says Daugherty. When EnterpriseTech asked if people would be inclined to convert all of their database tables and related files to in-memory storage, Daugherty said that "most people at this point think they have to convert everything, but then we explain that they don't have to and then they change their minds."

The reason is simple. While memory might be four orders of magnitude less costly than it was 25 years ago, it is still by no means free. While the latest Xeon E7 v2 processors from Intel support 64 GB memory sticks to reach their full 1.5 TB of memory capacity per socket, those 64 GB memory sticks are not widely available and are very expensive and in most cases 32 GB sticks are still expensive, too. It is still around $22 per gigabyte for 16 GB memory sticks, which tops out a four-socket machine like the ProLiant DL580 Gen8, with 1.5 TB of main memory, at a cost of just over $34,000 for the memory. That's a little more expensive than the server chassis and four reasonably fast twelve-core processors.

Inside Hekaton

The Hekaton in-memory database effort was a research project at Microsoft three years ago to accelerate online transaction processing, and Kevin Liu, principal lead program manager for SQL Server, was asked to head up the effort to commercialize this approach.

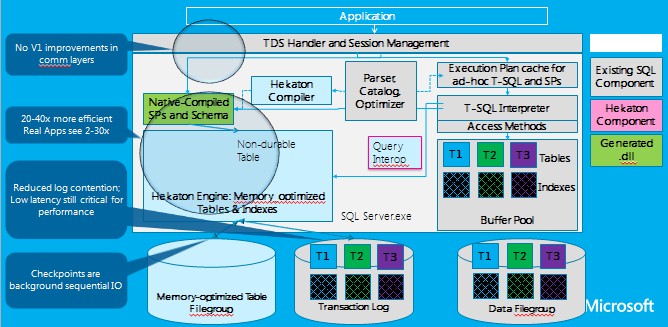

The new Hekaton engine is quite a bit different from the plain vanilla SQL Server engine, and you can continue to use both engines on the same machine, by the way. You move the hot tables to in-memory, or if you want to go completely in memory, you can do that, too. Here is a block diagram of how the two SQL engines are interrelated:

Way back when in SQL Server 2005, Microsoft allowed for a database table in the buffer pool to be pinned in main memory, and it is important to realize that this is not what Hekaton is doing. For various reasons, said Liu, that pinning approach did not yield the kinds of performance increases that companies expect from in-memory processing.

Hekaton changes so many things that it is basically unrecognizable as a variant of SQL Server's engine, but the beautiful thing is that applications that are dependent on SQL Server are blissfully unaware of the difference. You convert tables to Hekaton format using a few clicks after a tool tells the admin which ones are best candidates for such conversion, and applications run faster. A lot faster. (More on that in a moment.)

Hekaton doesn't store indexes like a regular database does, but rather creates them on the fly from data that is resident in memory. The architecture for the in-memory SQL Server engine does not do write-ahead logging. Significantly, it has no locks on rows, no page latches (as a normal database has for memory pages), and no spinlocks. It has a new multi-version, optimistic concurrency control mechanism that has full ACID database properties without using the old mechanisms.

Gutting the database engine is important. A row lock can eat something on the order of 10,000 compute cycles, and a page latch can consume several thousand CPU cycles, according to Liu. But the new concurrency mechanism, called interlock compare exchange, eats something between 10 and 20 CPU cycles. So the CPU is kept busier rather than kept waiting. To squeeze even more performance out of Hekaton, T-SQL statements (this is the extended variant of the SQL language that Microsoft and Sybase use) are compiled to machine code, stored procedures and their queries become C functions, and other aggressive optimizations are done at compile time.

All of these changes were made to make better use of multicore processors and to leverage the compute in CPU cores as much as possible.

The communication layers in SQL Server – TDS Handler and Session Management in the chart above – are unchanged with SQL Server 2014. The T-SQL interpreter can hand off queries to the Hekaton engine, or if you want more performance, you can run the queries through a new parser and compiler that will create natively compiled stored procedures and schemas. The important thing is that the old and new engines both exist inside of SQL-Server.exe. Applications are none the wiser that there are two engines.

Here is an important thing to also consider: When Microsoft provides data on Hekaton performance, it is comparing in-memory OLTP with the processing of data that is already fully warmed up in the buffer pools and that has already been brought in off disk drives. This, says Liu, is a fair comparison and that comparing it to cold data coming off disks is not. (Keep that in mind when you evaluate other in-memory technologies.)

"Most people assume incorrectly that Hekaton is just using more memory, but Hekaton has a different way of using memory," explains Liu. "You assume the data is resident in memory, and the data structures and the way you manage memory is all different. That is how you get the performance here. As a matter of fact, if you look at it as a per-unit of warmed-up data, Hekaton is actually more efficient than traditional buffer pools. In the most crude way, you can think of Hekaton as an in-memory cache, and that is the easy part. Building T-SQL on top, and having the fully ACID database properties on the bottom, that is the hard part."

Hekaton V1 has some limitations, and customers need to be aware of them. First of all, it is only really designed for X86 machines with one or two sockets. The NUMA tuning for a two-socket machines is fine, but Microsoft still has work to do to tune up the main memory to work across four, eight, and possibly more sockets in a single system. The database does not yet support large objects, or LOBs, which will come in a future release of Hekaton. Ditto for triggers, constraints, foreign keys, and sparse columns; the native compiler does not yet support outer joins, either.

At the moment only eight separate indexes per table can be created in Hekaton. All memory-optimized tables and memory internal structures must reside in memory. A database – meaning a collection of interrelated tables, stored procedures, indexes, and such – can have no more than 256 GB of main memory allocated to it. To be even more precise, Liu says that each database should have a memory pool that is tuned specifically for its data, meaning that you don't overallocate. You can run multiple databases on a single physical server, of course. Microsoft says that between 5 and 10 percent of a database is typically hot at enterprise shops, and that 256 GB limit implies a 2 TB database size, larger than most actual databases out there in the real world, according to Liu.

One last thing about Hekaton: the Analysis Migration and Reporting Tool for Hekaton, which Daugherty was showing off in a demo, can move tables, but it cannot move stored procedures automagically although you can move stored procedures by hand. The automated migration of stored procedures and support for other database functions will come in a future release.

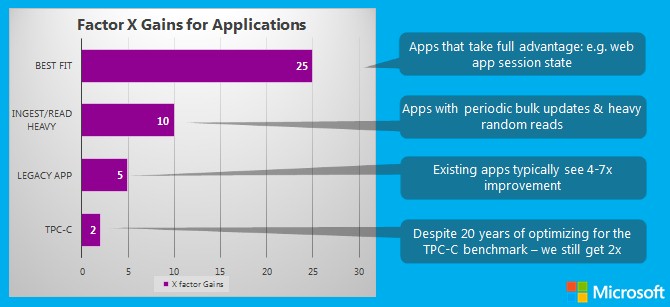

So what is the performance gain that customers can see from the Hekaton in-memory engine inside of SQL Server 2014. The answer is: it depends, and it depends on a lot of different things. Here are the results from early adopter customers:

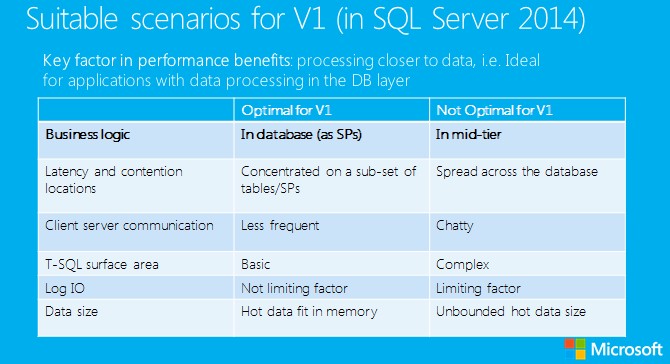

Liu says that the Hekaton engine can chew through SQL statements and find or update data something on the order of 20 to 40 times faster than the earlier SQL Server engine. But the upper layers of the database move slowly still and are actually impeding performance. (This will change in future releases.) Even with that, applications that have most of the logic outside of the database will see the smallest performance gains, while those that use stored procedures to encapsulate the application inside the database will see much larger improvements. A workload derived from the TPC-C online transaction processing test, which is decades old and optimized for disk-based databases, is probably the worst of all possible workloads for Hekaton acceleration, and as you can see from the chart above, even it runs twice as fast,

In a demo run by Daugherty, which simulates an online game store that simulates around 1 million concurrent users per day who are browsing around the site, it took something on the order of 3.9 seconds to buy a game during the simulation and 6.3 seconds to update the hotlist to recommend other games for each user. The system processed something on the order of 1,000 transactions per second. By moving several hot tables in SQL Server to Hekaton, identified by Microsoft's migration tool, transaction rates went well above 22,000 transactions per second and response times were significantly reduced. The purchase transaction only took 0.15 seconds (a factor of 25X improvement) and the recommendation hotlist updated in 0.7 seconds (a 9X improvement).

SQL Server 2014 also has a new feature for the old database engine called SSD Buffer Pool extension, which allows for the buffer pool in that engine to be dumped off to flash-based storage when the main memory gets constrained. This can be flash cards hanging off the PCI-Express bus or SSDs hanging off a disk controller. Hekaton does not use buffer pools, so this SSD offload can't help it, but on OLTP workloads it can help boost throughput by as much as a factor of three. It is not optimized for data warehousing workloads.

Microsoft is not changing its pricing for SQL Server 2014. It is exactly the same as SQL Server 2012, which was introduced with per-core pricing like that from IBM for DB2 and Oracle for 11g and 12c. SQL Server 2014 Enterprise Edition has the Hekaton in-memory processing engine. The SSD Buffer Pool extension, which allows for the buffer pool in the regular SQL Server engine to be dumped off to flash-based storage when the main memory gets constrained, is available in both SQL Server 2014 Standard Edition, which costs $1,793 per core, and Enterprise Edition, which costs $6,874 per core.