How Cool Is Your Data Center?

Do datacenters really need all that expensive but highly efficient equipment to control temperature and humidity? Increasingly, datacenter operators seem to be re-thinking the benefits of running hotter: saving money by eliminating precision cooling equipment, cutting power usage, and lowering the carbon footprint.

But members of the Green Grid Association feel that not enough datacenter managers or IT users are warming to the trend. Some of the reluctance seems to be due to inertia. Some standard operating procedures date back to the 1950s, even though the reasons the practices were initially put in place are now a bit vague. There is evidence, for example, that the long-held practice of running IT equipment in rooms kept at 200C to 220C (680 to 71.60F) was first adopted in order to ensure that punch cards would remain usable.

But members of the Green Grid Association feel that not enough datacenter managers or IT users are warming to the trend. Some of the reluctance seems to be due to inertia. Some standard operating procedures date back to the 1950s, even though the reasons the practices were initially put in place are now a bit vague. There is evidence, for example, that the long-held practice of running IT equipment in rooms kept at 200C to 220C (680 to 71.60F) was first adopted in order to ensure that punch cards would remain usable.

Over the years, the equipment manufacturers have steadily improved their products to

expand their operating range. But many datacenter operators have been reluctant to

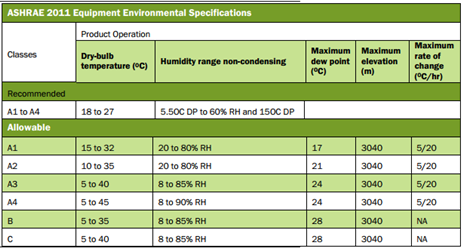

relax their practices because uptime is just too important. When an ASHRAE committee—including some equipment makers—updated its environmental guidelines for data centers in 2008, broadening the “Recommended” and “Allowable” temperature and moisture ranges for datacenters, the Green Grid Association found that most datacenter operators were reluctant to adopt the full allowable ranges.

Datacenter managers still have many concerns. Manufacturers, they say, are sometimes vague about equipment warranties and service. Exactly how much will performance degrade or failure rates increase under warmer temperatures? Will there be enough time to respond to cooling failures if the datacenter is already running at a higher temperature? How significant will the cost savings be? Will the savings from running hotter be offset by increasing costs elsewhere, such as increased maintenance, server fans running constantly, or the need to replace equipment more often?

Every datacenter manager knows, from anecdotal evidence, that servers in hot spots tend to fail more frequently. But there has been a lack of quantifiable data on the longevity of IT equipment operating under different temperatures. The datacenter managers themselves were not in a position to themselves quantify the risks.

In 2011, ASHRAE updated its guidelines again, further broadening the temperature and humidity ranges for IT equipment in datacenters. So this time, Green Grid members themselves decided to dig through some data for some quantification.

Green Grid member Steve Strutt, CTO for Cloud Computing at IBM UK and Ireland, with help from Green Grid members from Cisco, Thomson Reuters, and Colt Technology

Services, looked at recently published ASHRAE data to explor the hypothesis that warmer data centers will prove more efficient and will not seriously affect reliability or server availability.The results have now been published in a white paper, “Data Center Efficiency and IT Equipment Reliability at Wider Operating Temperature and Humidity Ranges.”

Running hot

The data does indicate that temperature makes a difference, sometimes a significant one, depending primarily on the age and type of equipment, which is likely to be a range of products in everything but a brand new plant. A higher percentage of servers fail, for example, at higher temperatures. But the paper concludes that, with the

right practices, data centers can still be run efficiently, with lowers costs, at higher temperatures, and suggests ways that datacenter operators can determine how to get the most efficiency out of their facilities.

One study the Green Grid looked at was a 2011 ASHRAE paper that looked at reliability data from multiple hardware vendors. ASHRAE compared server failure rates at the standard 200C to the failure rates at other temperatures. Without revealing the exact failure rate, ASHRAE set the failure rate at the 200C “norm” at 1.0. When servers were continuously run at 270C, the failure rate increased to about 1.25. At 350C, the failure rate was about 1.6.

Is that significant? The Green Grid paper puts it this way: If you have a datacenter with 1,000 servers, and an average of 10 servers undergo some kind of failure per year operating at 200C, you can expect an additional 6 of the 1,000 servers to fail at 350C—“a measurable impact on the availability of the population of servers,” according to the authors.

But that assumes continuous operation at the higher temperatures. In reality, the temperature of the data center will fluctuate, so will only be at the maximum temperatures used in the study part of the time.

At times the temperature may drop below 200C, where the failure rated decreases. The failure rate at 17.50C, for example, drops to about 0.8. That suggests an approach to mitigating the failures from the high-end of the operating range. “Short-duration operation at up to 270C could be balanced by longer-term operation at just below 200C to theoretically maintain the normalized failure rate of 1,” the authors write. Good preventative maintenance would be likely to reduce that failure rate even more.

Still, the final impact of the increased failure rate comes down to the individual practices of the datacenter operators, and the paper's analysis cannot quantify the results in general terms. It notes, for example, that the definition of “fail” varies among operators. Some may define it as “any unplanned component failures (fault)” while others may define the start of a failure at the time of a predictive failure alert. That means datacenter operators must consider the ASHRAE data within the context of their own practices. Organizations with excellent change and maintenance processes will see less impact from increased failure rates. Similarly, datacenters with highly virtualized and

cloud environments may see no substantial impact from a higher failure rate.

Power consumption

The 2012 ASHRAE study also shows that as the temperature rises, servers consume more power. Server fans spin faster and spend extra time cooling CPUs and other

components. There's also silicon leakage current in server components. The leakage current increases linearly with the rise in temperature, but power usage by the fans increases faster as temperatures increase. At the top of the power ranges for the

server classes tested—350C for Class A2 and 400C for class A3—causes the devices to use up to 20% more power than at the baseline of 200C.

Not all servers are alike, however. Newer servers handle the increase in inlet temperatures more efficiently. Servers from different vendors also show different levels of efficiency. 1U rack servers are less efficient because of their smaller size and higher fan speeds.

Interestingly, although the servers use more power, the overall PUE goes down. The Green Grid paper does not advise using increased server power consumption to manipulate the PUE.

Server airflow also increases non-linearly with temperature. Class A2 servers require up to 2.5 times the volume of airflow than that at 200C. Class A3 servers were not tested, but the assumption is that they will also be more efficient than A2 servers. This study implies the need for different data center designs in order to allow for higher airflow volume.

Finally, the paper suggests an approach to calculating the net change in overall reliability by allowing an increase in temperature, based on the data in the ASHRAE papers. The system is complex and depends on the datacenter's current efficiency, the type and brand of servers used and other factors. But it purports to give estimates on the effect temperature will have on hardware failure rates, datacenter energy consumption, datacenter location and other factors.

The paper can be downloaded here. The calculations and advice begin on page 25.