Tensor

Nvidia’s Speedy New Inference Engine Keeps BERT Latency Within a Millisecond

Disappointment abounds when your data scientists dial in the accuracy on deep learning models to a high degree but are then eventually forced to gut the model for inference ...Full Article

Nvidia, Google Tie in Second MLPerf Training ‘At-Scale’ Round

Results for the second round of the AI benchmarking suite known as MLPerf were published today with Google Cloud and Nvidia each picking up three wins in the at-scale ...Full Article

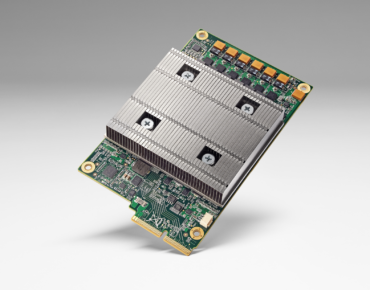

Google Pulls Back the Covers on Its First Machine Learning Chip

This week Google released a report detailing the design and performance characteristics of the Tensor Processing Unit (TPU), its custom ASIC for the inference phase of neural networks (NN). Google ...Full Article