Monster API Launches the ‘Airbnb of GPUs’ with $1.1M Pre-seed

(cybermagician/Shutterstock)

A new platform from a Palo Alto-based startup aims to level the AI development playing field. Monster API has launched a platform it claims to be the “Airbnb of GPUs” and has secured $1.1 million in pre-seed funding.

Generative AI has captured global attention and applications are rapidly being developed for everything from content creation to code generation. Recent Gartner research found that by 2026, 75% of newly developed enterprise applications will incorporate AI- or ML-based models, up from less than 5% in 2023. However, the process of developing AI applications with machine learning is an expensive and complex prospect for many companies looking to join the AI boom.

But what if you could run the latest AI models on a cryptomining rig or an Xbox? That’s the question Monster API co-founder Gaurav Vij had after facing huge AWS costs at his computer vision startup. His brother and co-founder Saurabh Vij, a former particle physicist at CERN, recognized the potential of distributed computing while contributing to projects like LHC@home and folding@home.

Inspired by these experiences, the brothers say they sought to harness the computing power of consumer devices like PlayStation, gaming PCs, and crypto mining rigs for training ML models. The company claims that after multiple iterations, the brothers successfully optimized these consumer GPUs for ML workloads, leading to a 90% reduction in Gaurav Vij's monthly cloud costs at his startup.

“We were jumping with excitement and felt we could help millions of developers just like us building in AI,” said Gaurav Vij in a release.

Monster API claims it gives developers access to the latest AI models including Stable Diffusion, Whisper AI, LLaMA, and StableLM with highly available REST APIs through a one-line command interface, with no complex setup involved. The platform also has a no-code fine-tuning solution that specifies hyperparameters and datasets.

Monster API’s development stack consists of an optimization layer, a compute orchestrator, a “massive” GPU infrastructure, and pre-configured inference APIs. Additionally, Monster API says its containerized instances come pre-configured with CUDA, AI frameworks, and libraries for a seamless managed experience.

The company asserts that its decentralized computing approach enables access to tens of thousands of powerful GPUs like A100s, on-demand, to train breakthrough models at substantially reduced costs. The platform allows developers to access popular foundation AI models at one-tenth the cost of traditional cloud providers like AWS, the company asserts. Monster API claims that in one case, an optimized version of the Whisper AI model run on its platform led to a 90% reduction in cost compared to running it on AWS.

The company asserts that its decentralized computing approach enables access to tens of thousands of powerful GPUs like A100s, on-demand, to train breakthrough models at substantially reduced costs. The platform allows developers to access popular foundation AI models at one-tenth the cost of traditional cloud providers like AWS, the company asserts. Monster API claims that in one case, an optimized version of the Whisper AI model run on its platform led to a 90% reduction in cost compared to running it on AWS.

“By 2030, AI will impact the lives of 8 billion people. With Monster API, our ultimate wish is to see developers unleash their genius and dazzle the universe by helping them bring their innovations to life in a matter of hours,” said Saurabh Vij. “We eliminate the need to worry about GPU infrastructure, containerization, setting up a Kubernetes cluster, and managing scalable API deployments as well as offering the benefits of lower costs.”

“One early customer has saved over $300,000 by shifting their ML workloads from AWS to Monster API's distributed GPU infrastructure,” Saurabh Vij continued. “This is the breakthrough developers have been waiting for: a platform that's not just highly affordable but it is also intuitive to use.”

Monster API offers its billing based on API calls instead of “pay by GPU time.” APIs automatically scale to handle increased demand and the decentralized GPU network enables geographic scaling, the company says.

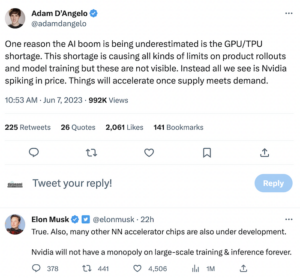

(Source: Twitter)

Access to decentralized GPUs could be appealing, as some have suggested there is a GPU shortage affecting AI adoption. Quora CEO Adam D’Angelo recently tweeted his opinion that the AI boom is being underestimated because of a GPU/TPU shortage.

“This shortage is causing all kinds of limits on product rollouts and model training, but these are not visible. Instead, all we see is Nvidia spiking in price. Things will accelerate once supply meets demand,” he wrote. Elon Musk agreed, adding that many other neural network accelerator chips are also under development.

In the meantime, Monster API says that by harnessing decentralized compute resources, it can provide developers with a scalable, globally accessible, and affordable platform for generative AI. The company is offering a free trial and subscription, found here, and those interested in learning more or joining the developer community can get involved in the company’s Discord.

“Generative AI is one of the most powerful innovations of our time, with far-reaching impacts,” said Sai Supriya Sharath, Carya Venture Partners managing partner. “It’s very important that smaller companies, academic researchers, competitive software development teams, etc. also have the ability to harness it for societal good. Monster API is providing access to the ecosystem they need to thrive in this new world.”