Esperanto Works to Fill the Software Gap for RISC-V AI Servers

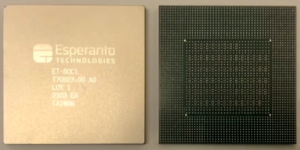

Esperanto Technologies is working to accelerate software support for its novel, high-performance AI chips. The company has ported a large-language model (LLM) from Meta to its RISC-V-based ET-SoC-1, which includes 1,088 cores. This gives researchers the option to evaluate powerful LLMs on servers that are not based on x86 or Arm architectures.

RISC-V is an emerging architecture that can be licensed for free and which is noted for its ground-up design and minimalist approach. Apple and Nvidia include RISC-V controllers in their chips, and Qualcomm has talked about the architecture’s promise for mobile chips. Intel has helped create computing boards based on RISC-V, though recently pulled financial support for the architecture as part of its cost-cutting effort. RISC-V cores are popular in microcontroller applications, where it is eroding the market share of aging Arm-based designs. Esperanto is among a handful of companies trying to push RISC-V into the high-performance computing space. The company was founded by Dave Ditzel, who previously founded Transmeta.

RISC-V is an emerging architecture that can be licensed for free and which is noted for its ground-up design and minimalist approach. Apple and Nvidia include RISC-V controllers in their chips, and Qualcomm has talked about the architecture’s promise for mobile chips. Intel has helped create computing boards based on RISC-V, though recently pulled financial support for the architecture as part of its cost-cutting effort. RISC-V cores are popular in microcontroller applications, where it is eroding the market share of aging Arm-based designs. Esperanto is among a handful of companies trying to push RISC-V into the high-performance computing space. The company was founded by Dave Ditzel, who previously founded Transmeta.

But RISC-V is facing an uphill battle moving up to performance applications such as PCs and servers, as a lack of software support has limited adoption. Getting the hardware is easy, but building the software and data around the hardware is a challenge, explained Roger Kay, principal analyst at Endpoint Technology Associates.

Now, Esperanto is working to fill that software gap with its homegrown AI tools.

The company has ported Meta’s Open Pre-Trained Transformer model – which is considered roughly equivalent in size and performance to OpenAI’s GPT-3 – to its chips. The model is designed to run on evaluation servers with 8 or 16 ET-SoC-1 cards that can be plugged into PCIe slots, which provides acceleration from up to ~17,000 total cores.

Esperanto's software will provide customers the tools needed to try a different AI hardware product, said Jim McGregor, principal analyst at Tirias Research. The servers will be used for inferencing, and customers will get a better sense of performance and power consumption, McGregor added.

Companies backing RISC-V have marketed the architecture as a low-power option for high-performance computing applications. AI is ruled by Nvidia, and some companies may be interested in checking out the power and performance provided by RISC-V, McGregor argued.

The experimental AI servers include dual Intel Xeon host processors, though further specifics were not available. Esperanto did not respond to requests to talk to company representatives about the hardware and software. The servers will run all standard industrial AI models, and customers will have the "ability to bring their own models and data," the company said in a statement.

RISC-V is still many years away from being a viable alternative at scale to x86 or Arm in supercomputing or AI. Intel has reiterated that it will provide the ability to build RISC-V CPUs alongside GPUs in its chiplet designs, but that's quite a ways away. The European Processor Initiative (EPI) is funneling money to develop RISC-V chips through research centers like the Barcelona Supercomputing Centre (BSC). But SiPearl, which is building supercomputing chips for Europe’s first exascale supercomputers, says RISC-V isn’t ready for primetime.

Small chip companies are dropping like flies amid the funding crunch and a challenging economic environment, and are taking extra steps to make their chips attractive to hyperscalers and customers, McGregor said.

AI accelerators typically have matrix multipliers, and the ET-SoC-1 has vector and tensor instructors for AI. "The ET-SoC-1 chip is designed to compute at peak rates between 100 and 200 TOPS and to be able to run ML recommendation workloads while consuming less than 20 W of power," the company said in a slide deck presented at Hot Chips 33.