IBM Introduces ‘Vela’ Cloud AI Supercomputer Powered by Intel, Nvidia

Nearly five years ago, Oak Ridge National Laboratory launched the IBM-built Summit supercomputer, powered by IBM and Nvidia hardware, to the top of the Top500 list. Now, the erstwhile HPC juggernaut is launching a new supercomputer that reflects the company’s shift in direction: Vela, an AI-focused, cloud-native supercomputer with Intel and Nvidia hardware.

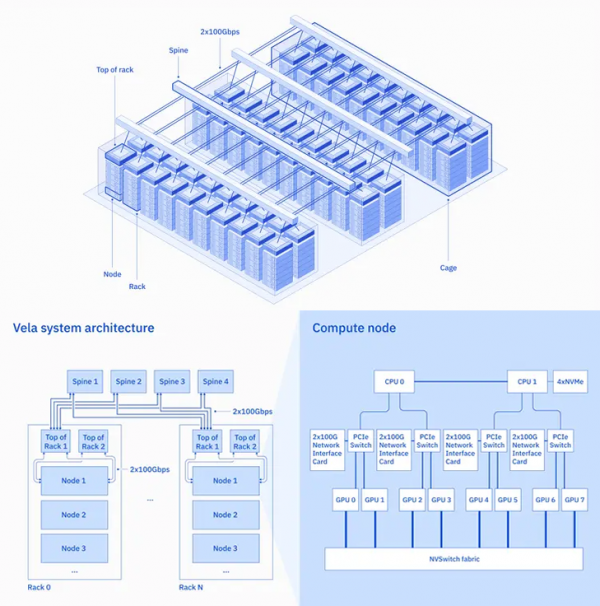

Specs first. Each of Vela’s nodes is equipped with dual Intel Xeon “Cascade Lake” CPUs (notably forgoing IBM’s own Power10 chips, introduced in 2021), octuple Nvidia A100 (80GB) GPUs, 1.5TB of memory and four 3.2TB NVMe drives. In its blog post announcing the system, IBM said the nodes are networked via “multiple 100G network interfaces,” and that each node is connected to a different top-of-rack switch, each of which, in turn, is connected to four different spine switches, ensuring both strong cross-rack bandwidth and insulation from component failure. Vela is “natively integrated” with IBM Cloud’s virtual private cloud (VPC) environment.

Vela, which has been online since May of last year, consists of 60 racks (per Forbes) and an unspecified number of nodes; however, one might be inclined to trust the above diagram – accurate in all other respects – and guess six nodes per rack, for a total of 360 nodes and 2,880 A100 GPUs.

IBM designed Vela with AI firmly in mind – and, in particular, the development of foundation models, which IBM describes as “AI models trained on a broad set of unlabeled data that can be used for many different tasks.” To that end, the company opted for substantial memory at every level: the larger-memory variant of the A100 and substantial DRAM and NVMe, all suited for caching AI training data and related tasks.

Interestingly, IBM made the choice to enable virtual machine (VM) configuration on Vela, arguing that while bare-metal is preferred for AI performance, VMs provide more flexibility. To ameliorate the performance impacts, IBM says that they “devised a way to expose all of the capabilities on the node … into the VM,” reducing virtualization overhead to less than 5%.

In the announcement, IBM also made several pointed remarks vis-a-vis the elements of traditional HPC it was eschewing with Vela. The authors wrote that “traditional supercomputers” with elements like high-performance networking hardware “weren’t designed for AI; they were designed to perform well on modeling or simulation tasks, like those defined by the U.S. national laboratories[.]” The authors even called out Microsoft’s Azure AI supercomputer, built for OpenAI, as an example of a “traditional design” that drives “technology choices that increase cost and limit deployment flexibility.”

“Given our desire to operate Vela as part of a cloud, building a separate InfiniBand-like network – just for this system – would defeat the purpose of this exercise,” the authors explained. “We needed to stick to standard Ethernet-based networking that typically gets deployed in a cloud.”

For now, IBM is only offering Vela to the IBM Research community, with the company describing the system as its new “go-to environment” for IBM researchers working on AI. However, IBM also hinted that Vela is a proof of concept for a larger deployment plan.

“While this work was done with an eye towards delivering performance and flexibility for large-scale AI workloads, the infrastructure was designed to be deployable in any of our worldwide data centers at any scale,” the authors wrote. And: “While the work was done in the context of a public cloud, the architecture could also be adopted for on-premises AI system design.”