Intel Officially Launches Sapphire Rapids with Built-in Acceleration

Intel's Sapphire Rapids chip. Image courtesy of Intel.

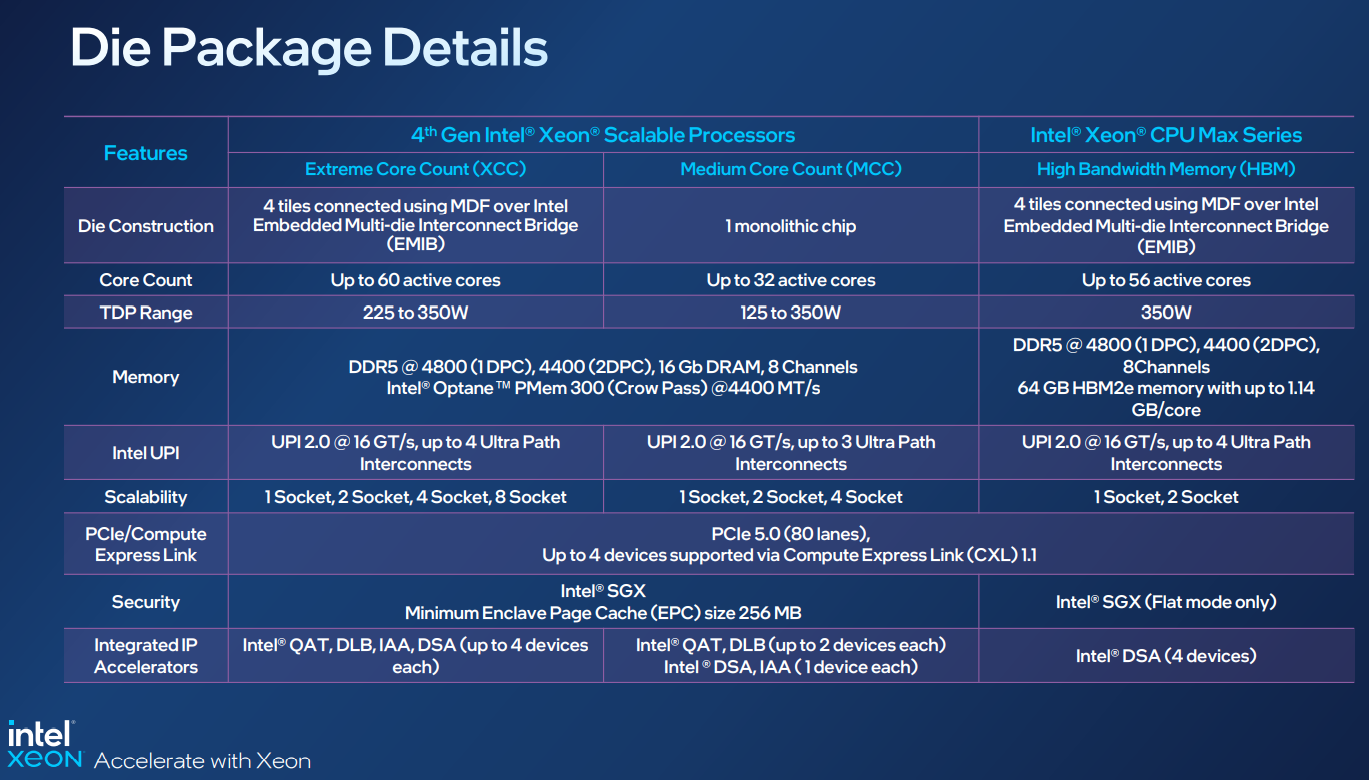

After a number of delays, Intel has launched its fourth-generation Intel Xeon Scalable processor, codenamed Sapphire Rapids, the successor to Ice Lake. Manufactured on the Intel 7 node (formerly known as 10nm) and sporting up to 60 Golden Cove cores per processor plus new dedicated accelerator cores, the platform offers a 1.53x average performance gain over the prior generation and a 2.9x average performance per watt efficiency improvement for targeted workloads using the new accelerators, according to Intel.

The launch, held today as a global livestreamed watch party, also included the recently remonikered Max series CPU and GPU, which were previously called “Sapphire Rapids HBM” and “Ponte Vecchio,” respectively.

The Sapphire Rapids family includes 52 SKUs (see chart) grouped across 10 segments, inclusive of the Max series: 11 are optimized for 2-socket performance (8 to 56 cores, 150-350 watts), 7 for 2-socket mainline performance (12 to 36 cores, 150-300 watts), 10 target four- and eight- socket (8 to 60 cores, 195-350 watts), and there are 3 single-socket optimized parts (8 to 32 cores, 125-250 watts). There are also SKUs optimized for cloud, networking, storage, media and other workloads.

The lineup for the “HPC Optimized” Xeon Max series SKUs includes 32-, 40-, 48-, 52- and 56-core versions. All five of these 2-socket parts top out at 350 watts, and list pricing runs from $7,995 for the 32-core 9462 to $12,980 for the 56-core 9480. There are two SKUs more expensive than the 9480 Max series: the 60-core 8490H, which runs a cool $17,000, and the 48-core 8460H at $13,923.

At a press event in Hillsboro, Oregon, last month, Intel Senior Fellow Ronak Singhal referenced the wide span of SKUs, saying: “Customers will say you guys have too many SKUs, can you guys reduce the number of SKUs, but can you add these three SKUs that are really, really important? So we have this push and pull with our customers.”

New capabilities in fourth-gen Intel Xeon Scalable processors include PCIe 5.0, DDR5 memory, and support for CXL 1.1.

Intel has introduced new Advanced Matrix Extensions (AMX) in Sapphire Rapids for acceleration of AI workloads, such as recommendation systems, NLP, and image recognition. In company testing, Sapphire Rapids parts achieved up to 10x higher PyTorch performance for real-time inference and training using AMX's BF16 processing compared with using FP32 on previous generation chips.

The 56-core 8480+ top-of-bin two-socket (non-HBM) part – with 40% more cores than its Ice Lake counterpart – achieved gen-over-gen performance uplifts across a number of benchmarks, delivering a 1.5x improvement on Stream Triad, a 1.4x improvement for HPL and a 1.6x improvement on HPCG. Intel testing across a dozen-plus real-world applications (including WRF, Black Scholes, Monte Carlo and OpenFoam) showed similar speedups, with the greatest gain for a physics workload, CosmoFlow (2.6x).

The Max series CPU is the first x86 processor with integrated High Bandwidth Memory. It offers a 3.7x gain in performance for memory-bound workloads, according to Intel, and requires 68 percent less energy than “deployed competitive systems.” On the AlphaFold2 application, the Xeon Max CPU showed a 3x speedup over the Ice Lake processor in Intel testing. Notable for HPC benchmark watchers, the Max series processor achieves a nearly 2.4x speedup on HPCG and a 3.5x speedup for Stream Triad, compared with the DDR-only Sapphire Rapids equivalent. The HBM in the Max series CPU offered no performance improvement for the High Performance Linpack benchmark.

The Max series “Ponte Vecchio” GPU, also launched today, contains over 100 billion transistors in a 47-tile package with up to 128 Xe HPC cores. Depending on the form factor, it supports up to 128GB HBM2e memory and delivers up to 52 peak FP64 teraflops. Combining the Max series GPU with the Max series CPU platform (in a three to one GPU:CPU ratio) offers a 12.9x performance boost for LAMMPS molecular dynamics workloads, compared with an Ice Lake platform without GPUs, according to benchmarking conducted by Intel. The addition of Max GPUs (six GPUs added to a 2-CPU server) translated into a 9.9x boost versus a Max series CPU-only platform for the same workload. The high bandwidth memory on the host CPUs enabled a 1.55x performance improvement compared to using DDR5 only.

Built-in acceleration and new licensing options

Sapphire Rapids introduces four new dedicated accelerators (in addition to AVX-512, which debuted with the Xeon Phi “Knights Landing” product in 2016):

Intel Advanced Matrix Extensions (Intel AMX) accelerates deep learning (DL) inference and training workloads, such as natural language processing (NLP), recommendation systems, and image recognition.

Intel Data Streaming Accelerator (Intel DSA) drives high performance for storage, networking, and data-intensive workloads by improving streaming data movement and transformation operations.

Intel In-Memory Analytics Accelerator (Intel IAA) improves analytics performance while offloading tasks from CPU cores to accelerate database query throughput and other workloads.

Intel Dynamic Load Balancer (Intel DLB) provides efficient hardware-based load balancing by dynamically distributing network data across multiple CPU cores as the system load varies.

With a new service called Intel On Demand (formerly referred to as software-defined silicon, SDSi) customers will have the option to have some of these accelerators turned on or upgraded post purchase. “On Demand will give end customers the flexibility to choose fully featured premium SKUs or the opportunity to add features at any time throughout the lifecycle of the Xeon processor,” Intel stated. Pricing will vary depending on the license model. On Demand currently applies to the following features: Intel Dynamic Load Balancer, Intel Data Streaming Accelerator, Intel In-Memory Analytics Accelerator, Intel Quick Assist Technology and Intel Software Guard Extensions. Note the Max series CPUs and the socket-scalable (-H tagged) SKUs do not have On Demand capability; nor does the 8-core single-socket part (3408U).

Sapphire Rapids ecosystem partners include AWS, Cisco, Dell Technologies, Fujitsu, Google Cloud, HPE, IBM Cloud, Inspur, Lenovo, Microsoft Azure, Nvidia, Oracle, Supermicro, VMware and others. Intel reports more than 30 Max series CPU system designs are coming to market and 15 system designs based on the Max series GPU are also in development.

A version of this article first appeared on HPCwire.

Related

With over a decade’s experience covering the HPC space, Tiffany Trader is one of the preeminent voices reporting on advanced scale computing today.