Nvidia Introduces New Ada Lovelace GPU Architecture, OVX Systems, Omniverse Cloud

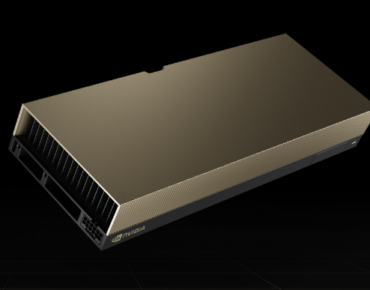

Ada Lovelace L40 GPU

In his GTC keynote today, Nvidia CEO Jensen Huang launched another new Nvidia GPU architecture: Ada Lovelace, named for the legendary mathematician regarded as the first computer programmer. The company also announced two GPUs based on the Ada Lovelace architecture – the workstation-focused RTX 6000 and the datacenter-focused L40 – along with the Omniverse-focused, L40-powered, second-generation OVX system. Finally, Nvidia announced the launch of Omniverse Cloud, a new software and infrastructure-as-a-service.

Ada Lovelace

Ada Lovelace is not a subset of Nvidia’s Hopper GPU architecture (announced just six months prior), nor is it truly a successor – instead, Ada Lovelace is to graphics workloads as Hopper is to AI and HPC workloads. “If you’re looking for the best for deep learning, for AI, that’s Hopper,” said Bob Pette, Nvidia’s vice president for professional visualization. “The L40 is great at AI, but not as fast as Hopper, and it’s not meant to be. It’s meant to be the best universal GPU and – more importantly – the best graphics GPU that’s out there.”

“Ada Lovelace is indeed an entirely different architecture,” Pette stressed. “And this is a little unique for us. [We] very quickly went from Ampere, to Ampere and Hopper, to Ampere and Hopper and Ada Lovelace, and we continue to try to move things fast, but please don’t confuse that this is a variant of Hopper. It was designed from the ground up.”

The “Ada-generation” RTX 6000 and the L40 share the same 608mm die and use Nvidia’s 4nm custom process via TSMC (Hopper, for reference, also uses Nvidia’s 4nm custom process). The new Ada Lovelace GPUs boast 76.3 billion transistors, 18,176 next-gen CUDA Cores, 568 fourth-gen Tensor Cores, 142 third-gen RT Cores and 48GB of GDDR6 memory. And, while both have a max power consumption of 300W, the 6000 uses active fan cooling while the L40 uses passive cooling; the L40 also supports secure boot with root of trust. These are both PCIe dual slot cards and do not leverage NVLink. Nvidia said that the RTX 6000 would be available in a couple of months from channel partners with wider availability from OEMs late this year into early next year to align with developments elsewhere in the industry. L40 availability is anticipated to begin in December.

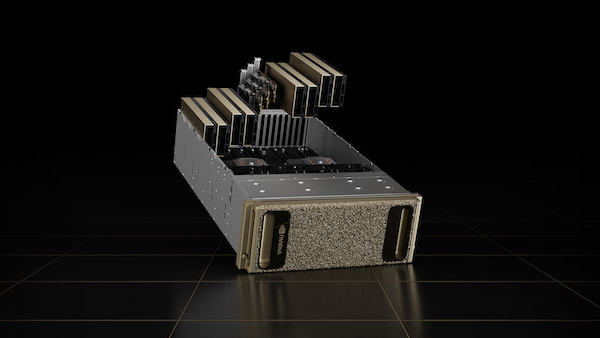

OVX

Huang also announced the second generation of its OVX computing system for Omniverse: “Today, we’re announcing the OVX computer with the new Ada Lovelace L40 datacenter GPU,” he said in his keynote. “With a massive 48GB frame buffer, OVX, with eight L40s, will be able to process giant Omniverse virtual world simulations.”

Nvidia launched the first iteration of the OVX system in March, touting it as purpose-built for running digital twin simulations within the Omniverse Enterprise 3D simulation and design platform. The second generation OVX system features an updated GPU architecture and enhanced networking technology.

Each OVX server node is powered by eight Ada Lovelace L40 GPUs, dual Intel Ice Lake 8362 CPUs, three Nvidia ConnectX-7 400Gb/s SmartNICs, and 16TB NVMe storage. Nvidia estimates the second generation OVX systems will be available by early 2023 from Inspur, Lenovo, and Supermicro, with Gigabyte, H3C and QCT offering them in the future.

Nvidia says the L40 GPUs will deliver advanced capabilities to Omniverse workloads, including accelerated ray-traced and path-traced rendering of materials, physically accurate simulations and photorealistic 3D synthetic data generation.

In addition to new GPUs, the OVX system has received a networking upgrade as well. Its ConnectX-7 SmartNICs can provide up to 400Gb/s total bandwidth and includes support for 200G networking on each port with in-line data encryption. The company says this upgrade from the previous ConnectX-6 Dx 200-Gbps NICs will provide enhanced network and storage performance for the precise timing synchronization needed to power digital twin simulations.

Scalability is also addressed as OVX can be scaled from a single pod of eight OVX servers up to a SuperPOD of 32 servers connected by Nvidia’s Spectrum-3 Ethernet platform.

“In the case of OVX, we do optimize it for digital twins from a sizing standpoint, but I want to be clear that it can be virtualized. It can support multiple users, multiple desktops, or Omniverse create apps or view apps. It’s very fungible,” said Pette.

Among the first customers to receive OVX systems are automakers BMW Group and Jaguar Land Rover.

“Planning our factories of the future starts with building state-of-the-art digital twins using Nvidia Omniverse,” said Jürgen Wittmann, head of innovation and virtual production at BMW Group. “Using Nvidia OVX systems to run our digital twin workloads will provide the performance and scale needed to develop large-scale photorealistic models of our factories and conduct true-to-reality simulations that will transform our manufacturing, design and production processes.”

“Nvidia OVX and DRIVE Sim deliver a powerful platform that enables us to simulate a wide range of real-world driving scenarios to safely and efficiently test our next generation of connected and autonomous vehicles as well as to recreate the customer journey to demonstrate vehicle features and functions,” said Alex Heslop, director of electrical, electronic and software engineering at Jaguar Land Rover. “Using this technology to generate large volumes of high-fidelity, physically accurate scenarios in a scalable, cost-efficient manner will accelerate our progress towards our goal of a future with zero accidents and less congestion.”

In May, Nvidia unveiled an Arm-based OVX blueprint system employing a Grace Superchip alongside four GPUs and a BlueField-3 DPU. In an email to HPCwire, Nvidia confirmed that the Grace Superchip-powered OVX model is still on track.

Omniverse Cloud

Nvidia also announced its first software and infrastructure-as-a-service offering, Nvidia Omniverse Cloud, which it calls a comprehensive suite of cloud services for artists, developers and enterprise teams to design, publish, operate, and experience metaverse applications anywhere. With Omniverse Cloud, users can collaborate on 3D workflows without the need for local compute power.

Omniverse Cloud’s services run on the Omniverse Cloud Computer which is a computing system consisting of Nvidia OVX for graphics and physics simulation, Nvidia HGX for AI workloads, and the Nvidia Graphics Delivery Network, a distributed datacenter edge network.

Examples of new Omniverse Cloud services include Omniverse App Streaming, a service for users without RTX GPUs to stream Omniverse applications such as Omniverse Create or the reviews and approvals app Omniverse View. There is also Omniverse Replicator, a 3D synthetic data generator for researchers, developers, and enterprises that integrates with Nvidia’s AI cloud services. Other cloud services include Omniverse Nucleus Cloud, Omniverse Farm, Nvidia Isaac Sim, and Nvidia DRIVE Sim.

Early supporters of Omniverse Cloud include marketing services firm WPP, Siemens, and RIMAC Group, part of the newly formed Bugatti Rimac company.

Omniverse Farm, Replicator, and Isaac Sim containers are available today on Nvidia NGC for self-service deployment on AWS using Amazon EC2 G5 instances. Omniverse Cloud will also be available as Nvidia managed services via early access by application.