Nvidia Adds Liquid-cooled PCIe Cards to Server GPU Lineup

Nvidia liquid-cooled PCIe GPU.

Nvidia is bringing liquid cooling, which it typically puts alongside GPUs on the high-performance computing systems, to its mainstream server GPU portfolio.

The company will start shipping its A100 PCIe Liquid Cooled GPU, which is based on the Ampere architecture, for servers later this year. The liquid-cooled GPU based on the company’s new Hopper architecture for PCIe slots will ship early next year.

The new A100 PCIe GPU has the same hardware as the conventional air-cooled A100 PCIe version, but it includes direct-to-chip liquid cooling. The GPU fits into a single PCIe slot, and is half the width of the air-cooled version, which requires a dual slot.

Like the air-cooled version, the new liquid-cooled form factor has 80GB of GPU memory, memory bandwidth at 2 terabytes per second and maintains a TDP of 350 watts. It offers double precision floating point performance of 9.7 teraflops, and single-precision floating point performance – which is more relevant to AI calculations – of 19.5 teraflops.

About “40 percent of the energy used by datacenters goes to cooling and one of the directions the industry is moving [toward] for energy efficient cooling is liquid,” said Paresh Kharya, said senior director of product management for accelerated computing at Nvidia, during a press briefing.

Kharya said datacenters consume 1 percent of the world’s electricity, and that energy efficiency was a big consideration for the company when introducing products. He said GPUs are more power efficient than CPUs for workloads such as AI that need acceleration.

“Even the mainstream enterprise datacenters are looking at options for liquid cooling in datacenter infrastructures,” Kharya said.

But GPUs in general draw a lot of power, and instead of focusing on power efficiency at the chip level, Nvidia is putting liquid cooling on top of the GPU.

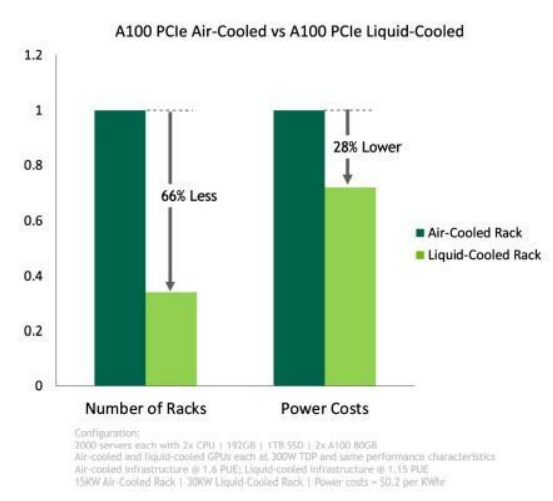

Switching from air-cooled A100 PCIe to liquid-cooled A100 PCIe configurations can reduce rack space up to 66 percent and lower power consumption by 30 percent, an Nvidia spokeswoman said.

Rack space is saved by obviating the need for a heatsink, enabling the A100 GPUs with liquid cooling to use just one PCIe slot, where air-cooled GPUs fill two, Kharya said.

The ability to pack more GPUs at the rack level and a better cooling mechanism helps servers run more workloads and achieve a more sustained output, said Kevin Krewell, principal analyst at Tirias Research.

“Water cooling is more efficient transferring heat away from the card. It also allows for a single slot card, while air cooled are double width. There is more plumbing required, but there are a number of rack solutions,” Krewell said.

About a dozen server makers, including Asus, Foxconn, Gigabyte, Inventec and Supermicro, plan to ship liquid-cooled PCIe GPU systems later this year.

Nvidia also said its liquid-cooled server based on the Hopper architecture, called HGX H100, will ship in the fourth quarter this year.

“Our HGX A100 with liquid-cooled options using the A100 SXM form factor have been in production from Nvidia partners for some time. The newly announced A100 PCIe Liquid Cooled GPU is sampling now and partners will be offering qualified mainstream servers later this year,” an Nvidia spokeswoman said.

The chipmaker, which is largely known for its GPUs, is now positioning itself as a one-stop-shop for AI products that include hardware, software and services. The company is offering software suites and programming frameworks targeted at verticals such as automotive, manufacturing, health care, security and robotics. The software is designed to work the fastest on its homegrown GPUs and servers.

Alternately, Nvidia is offering what it calls “AI factories” where its homegrown supercomputers solve AI problems and spit out customized products for companies.

Nvidia announced the liquid-cooled GPUs at the Computex trade show being held in Taipei. The company also announced that hardware companies will start shipping systems based on its Arm-based Grace CPU early next year.

Related

With over a decade’s experience covering the HPC space, Tiffany Trader is one of the preeminent voices reporting on advanced scale computing today.