Inspur Information Announces Seven Titles in MLPerf Training v1.1

SAN JOSE, Calif., Dec. 6, 2021 -- The open engineering consortium, MLCommons released its latest Training v1.1 results. Inspur Information submitted NF5488A5 and NF5688M6 server results for all 8 single-node closed division tasks, winning 7 of them.

MLPerf, established by MLCommons, is an AI performance benchmark that has become an important reference for customers purchasing AI solutions. For Training v1.1, 14 organizations participated. Results for 180 closed division and 6 open division tasks were submitted.

The closed division is very competitive because it requires the use of reference models to allow for an objective, apples-to-apples comparison of competing submissions. The benchmarks covered eight representative machine learning tasks, including Image classification (ResNet50), Medical Image Segmentation (U-Net 3D), and Natural Language Processing (BERT).

Inspur’s NF5688M6 was the top performer in 4 tasks: Natural Language Processing (BERT), Object Detection Heavy-Weight (Mask R-CNN), Recommendation (DLRM), and Medical Image Segmentation (U-Net 3D). NF5488A5 was the top performer in 3 tasks: Image classification (ResNet50), Object Detection Light-Weight (SSD), and Speech Recognition (RNNT).

A full stack AI solution leads to AI training speed breakthroughs

A full stack AI solution leads to AI training speed breakthroughs

Inspur AI servers led single node performance in the MLPerf v1.1 due to their unique software and hardware optimizations. Compared to Training v1.0, Inspur’s AI training speed in Medical Image Segmentation, Speech Recognition, Recommendation, and Natural Language Processing increased by 18%, 14%, 11% and 8% respectively.

This enhanced speed allows Inspur AI servers to process 12,600 images in SSD, 8,000 pieces of speech in RNNT, or 27,400 images in ResNet50 tasks per second.

In ResNet50, Inspur optimized the pre-processing of images, used a DALI framework, and ran decoding on the GPU to prevent CPU bottlenecks. These continuing optimizations have allowed Inspur to top the ResNet50 rankings for last three benchmarks.

Inspur's leading performance in the MLPerf benchmarks is due to its superior system design and full-stack optimizations in AI. At the hardware level, the Inspur PCIe Retimer Free design allows for high-speed interconnection between the CPU and GPU to allow bottleneck-free IO transmission in AI training for improved efficiency. For high-load multi-GPU collaborative task scheduling, data transmission between NUMA nodes and GPUs is optimized and calibrated to ensure that data IO in training tasks is not blocked. Inspur uses A100-SXM-80GB (500W) GPUs, the highest powered GPU in the industry. This led to the development of an advanced cold plate liquid cooling system to ensure that these high-power GPUs can work stably at full power, guaranteeing full system performance.

MLPerf 2021 officially concludes with Inpur Information winning 44 titles

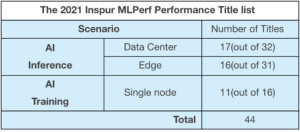

MLPerf Training v1.1 Results mark the conclusion of MLPerf 2021. As the winner of 44 titles in 2021, Inspur showcased its leading AI training and inference performance.

Inspur AI servers NF5488A5, NF5688M6 and edge server NE5260M5 won 18, 15 and 11 titles respectively.

NF5488A5 is one of the first A100 servers to be launched. It supports 8x 3rd Gen NVlink A100 GPUs and 2 AMD Milan CPUs in 4U space that supports both liquid and air cooling technologies.

NF5688M6 is an AI server optimized for large-scale data centers with extreme scalability. It supports eight A100 GPUs, two Intel Ice Lake CPUs, and up to 13 PCIe Gen4 IO expansion cards.

NE5260M5 can be customized with various high-performance CPU and AI acceleration cards. The chassis depth of 430mm is half of a normal server. This combined with vibration and noise reduction optimizations and rigorous reliability testing makes it ideal for edge computing.

About Inspur Information

Inspur Information is a leading provider of data center infrastructure, cloud computing, and AI solutions, and is a top two server manufacturer worldwide. Through engineering and innovation, Inspur Information delivers cutting-edge computing hardware design and extensive product offerings to address important technology segments such as open computing, cloud data centers, AI, and deep learning. Performance-optimized and purpose-built, our world-class solutions empower customers to tackle real-world challenges and custom workloads. To learn more, please go to https://www.inspursystems.com.

Source: Inspur Information