Cerebras Systems Expanding its Wafer-Scale Computing Tech with $250M in New Funding

Wafer-scale compute vendor Cerebras Systems has raised another $250 million in funding – this time in a Series F round that brings its total funding to about $720 million.

The latest cash infusion was led by Alpha Wave Ventures and Abu Dhabi Growth and includes participation by Altimeter Capital, Benchmark Capital, Coatue Management, Eclipse Ventures, Moore Strategic Ventures and VY Capital, according to the company.

The money will drive the company’s next global market moves as it seeks to bring its wafer-scale technologies to power more enterprise and scientific users in their AI pursuits. The company says it will use the cash to expand its business, hire more designers and engineers and continue to develop its AI product pipelines. Cerebras said it hopes to expand its workforce to 600 by the end of 2022, up from almost 400 today.

“The Cerebras team and our extraordinary customers have achieved incredible technological breakthroughs that are transforming AI, making possible what was previously unimaginable,” Andrew Feldman, the CEO and co-founder of Cerebras Systems, said in a statement. “This new funding allows us to extend our global leadership to new regions, democratizing AI, and ushering in the next era of high-performance AI compute to help solve today’s most urgent societal challenges – across drug discovery, climate change, and much more.”

“The Cerebras team and our extraordinary customers have achieved incredible technological breakthroughs that are transforming AI, making possible what was previously unimaginable,” Andrew Feldman, the CEO and co-founder of Cerebras Systems, said in a statement. “This new funding allows us to extend our global leadership to new regions, democratizing AI, and ushering in the next era of high-performance AI compute to help solve today’s most urgent societal challenges – across drug discovery, climate change, and much more.”

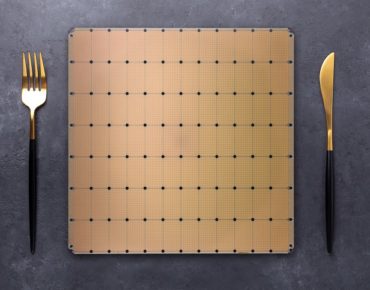

Cerebras debuted its first wafer-scale chip, which was the largest chip ever built, in August of 2019 as a 46,225 square millimeter silicon wafer packing 1.2 trillion transistors. Cerebras said the dinner plate-sized chip’s 400,000 AI-optimized cores could train models 100 to 1,000 times faster than the then-market-leading AI chip, Nvidia’s V100 GPU.

The company’s second wafer-scale chip, the Wafer Scale Engine-2 (WSE-2) debuted in April of 2021, packing what the company said was twice the specifications and performance into the same 8-inch by 8-inch silicon footprint. The WSE-2 includes 2.6 trillion transistors and 850,000 cores. The new chip, made by TSMC on its 7nm node, delivers 40 GB of on-chip SRAM memory, 20 petabytes of memory bandwidth and 220 petabits of aggregate fabric bandwidth. Gen-over-gen, the WSE-2 provides about 2.3X the performance of the original chips according to all major performance metrics.

To drive its new engine, Cerebras designed and built its next-generation system, the CS-2, which it bills as the industry’s fastest AI supercomputer. Like the original CS-1, CS-2 employs internal water cooling in a 15 unit rack enclosure with 12 lanes of 100 Gigabit Ethernet.

To drive its new engine, Cerebras designed and built its next-generation system, the CS-2, which it bills as the industry’s fastest AI supercomputer. Like the original CS-1, CS-2 employs internal water cooling in a 15 unit rack enclosure with 12 lanes of 100 Gigabit Ethernet.

Both Cerebras’ first and second generation chips are created by removing the largest possible square from a 300 mm wafer to create 46,000 square millimeter chips roughly the size of a dinner plate.

James Kobielus, senior research director for data communications and management at TDWI, a data analytics consultancy, told EnterpriseAI that the latest $250 million in funding, combined with the company’s previous investment money, “is the largest I can recall for an AI hardware chip company that has not yet gone IPO.” An IPO is likely only a matter of time, he added, probably in 2022.

“It is clear that the investment community is eager to fund AI chip startups, given the dire shortage of chips right now in the world economy and the criticality of embedded hardware-accelerated AI functionality to so many high-end products,” said Kobielus. “What is most compelling about Cerebras is their hardware focus on accelerating very large language models, which stand at the forefront of the embedded NLP that is critical to digital assistants, conversational UIs, and so many other mass-market usability innovations in today’s self-service economy.”

The company’s products are taking on GPUs, which are the dominant AI chip technology today, he said. “Cerebras’ CS-2 systems, powered by its WSE-2 chip, specializes in accelerating very large language models, and has in recent months boosted the size of such networks that are feasible by 100x or more, and now executes GPT-3 models of 120 trillion parameters,” said Kobielus. “We are also seeing application of very large language model tech, such as GPT-3 and BERT, to video processing and other application domains. With this fresh funding, Cerebras is in position to address these opportunities in NLP, speech recognition, smart cameras, and other domains head-on.”

Chirag Dekate, an AI infrastructure, HPC and emerging compute technologies analyst with Gartner, agreed.

“The next phase of AI evolution will comprise greater specialization of systems and hardware, coupled with deeper integration of AI applications in enterprise value chains,” said Dekate. “Several vendors, including Cerebras, are poised to address the emergent market opportunity around high performance and throughput AI. Greater specialization of hardware and systems to address performance and scaling of advanced AI workloads will be crucial to maximizing value capture.”

In September, Cerebras announced that it was launching a new Cerebras Cloud platform with partner Cirrascale Cloud Services, to provide access to Cerebras’ CS-2 Wafer-Scale Engine on Cirrascale’s cloud. The physical CS-2 machine – sporting 850,000 AI optimized compute cores and weighing in at approximately 500 pounds – is in a Cirrascale datacenter in Santa Clara, Calif., but the service will be available around the world, providing access to the CS-2 to anyone with an internet connection and $60,000 a week to spend training very large AI models.