Natural Language Processing Use Growing, But Still Needs Accuracy and Usability Gains: Study

Even as natural language processing (NLP) budgets are continuing to grow within many enterprises, the technology still needs critical refinements that will bolster its accuracy and make it easier to use by non-data scientists for a wide range of business projects.

Those are some of the conclusions of a new global online study of the NLP marketplace which shows that while the technology is gaining steam that there are still areas where more work is needed. NLP is a branch of AI that trains machines to recognize human language and turn it into usable data that can be used for a myriad of tasks.

The second annual report, the 2021 NLP Industry Survey, Insights and Trends: How Companies Are Using Natural Language Processing, was conducted by Gradient Flow, an independent data science analysis and insights company, for John Snow Labs, an AI and NLP vendor in the healthcare industry. John Snow Labs is the developer of widely-used Spark NLP library.

Out of 655 respondents, 60 percent said their NLP budgets grew by at least 10 percent in 2021, while another 33 percent said they saw a 30 percent increase, according to the 21-page report. Another 15 percent of the respondents said their NLP budgets more than doubled during the year.

Out of 655 respondents, 60 percent said their NLP budgets grew by at least 10 percent in 2021, while another 33 percent said they saw a 30 percent increase, according to the 21-page report. Another 15 percent of the respondents said their NLP budgets more than doubled during the year.

The 2021 NLP Industry Survey collected responses online from June 10 to August 13 from IT professional in a wide range of industries, company sizes, stages of NLP adoption and geographic locations. Respondents were invited to participate via social media, online advertising, the Gradient Flow Newsletter and outreach to partners.

When asked about the major challenges experienced by enterprises when using NLP today, 40 percent of the respondents said that accuracy was the most important requirement when evaluating NLP products. Twenty-four percent said the most important requirement was production readiness, while 16 percent were looking for excellent scalability.

Some 39 percent of the respondents said they have difficulty in tuning their NLP models, while 36 percent cited concerns with the costs of the technology, according to the survey.

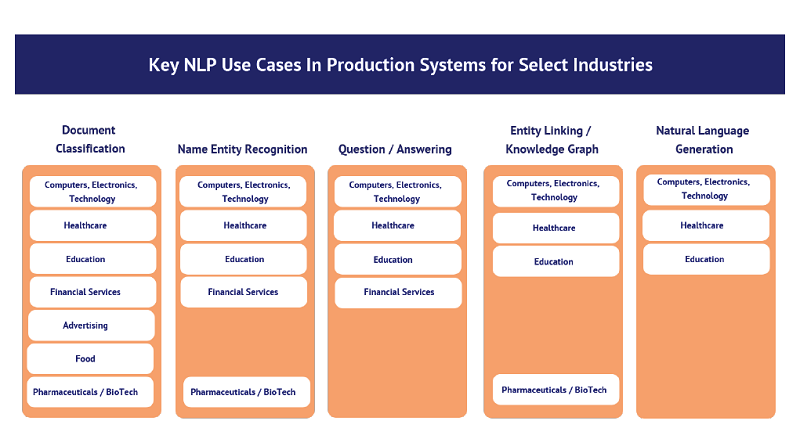

The top four industries using NLP represented by survey respondents include healthcare at 17 percent, technology at 16 percent, education at 15 percent and financial services at 7 percent.

David Talby, the CTO and founder of John Snow Labs, told EnterpriseAI that addressing these customer concerns and requirements remains important to his company and to the NLP marketplace.

The comments from survey respondents are evidence that NLP systems are still not turnkey enough for users and that the systems still require a lot of in-house efforts to tune models or put together an efficient pipeline to make it all work for enterprises, said Talby.

“The way I read the issues around accuracy is that those issues usually come when people may miss those steps,” which they do not realize are needed at this point, he said. Instead, companies often try to use out-of-the-box models within an NLP system, only to find that they are not sufficient without custom tuning, he said.

That is when the find that the accuracy is lower than needed.

“What you really need is the ability, the knowledge and the software to make it very easy to train and tune your models to your specific needs,” said Talby. “This is where a lot of new tools are being built now. A lot of social data annotation tools, AutoNLP and active learning tools are coming into play.”

Another popular need is data annotation tools that include active learning and transfer learning capabilities which allow the NLP to learn what is important to human users so it can gain the abilities to replicate those human annotation skills, he said.

“The goal is how fast can you take a domain expert like this and create a model that basically learns from them,” said Talby. “The challenge is that most people are not data scientists. We need to give them user interfaces where they annotate the data to get documents. But then in the backend, you want automated software that says you can do transfer learning with some form of ... language model, and basically give you a tuned model for the specific problem that you want to solve.”

One problem the industry is dealing with is that some NLP users today are saying that accuracy is not good, but what they mean is that it is not good straight out-of-the-box, he said. Those expectations must be readjusted so they know that they need to add customization to reach their goals, but that such tuning must be made easier to do.

One problem the industry is dealing with is that some NLP users today are saying that accuracy is not good, but what they mean is that it is not good straight out-of-the-box, he said. Those expectations must be readjusted so they know that they need to add customization to reach their goals, but that such tuning must be made easier to do.

“Our goal is to help companies actually put this to good use in production,” said Talby.

Ultimately, the NLP industry needs to educate users to help make the technology more transparent and valuable to them, he said.

“It is not about more powerful computers or more powerful GPUs or about larger models,” said Talby. “The problem is that people really do not know how to use the tools. It is like when the internal combustion engine was invented. We needed to go the distance from that to everyone having a car. First, they needed to make the cars easy to use. Then people had to learn to drive. We are not there yet for NLP. There is a lot of work we are doing. Every driver cannot be a mechanical engineer. I think this is really where the industry is at.”

None of these transitions are a surprise, said Talby.

“This is actually an area that is still emerging, and people are still figuring it out,” he said. “And they have many other [business and IT] priorities [as well]. NLP is basically working with text and being able to answer questions about the text is becoming a very big thing.”

Talby admitted that the industry is not quite there yet in achieving all these goals, but that they want to make the coming adjustments and improvements with humans in the loop to make it all work well.

“This is where the challenges are,” he said. “We have papers coming out. There are use cases. Three years ago, this was just not possible, and now look – it is possible. But there are still a lot of innovations that are required to make it easy to use, to work in the real-world setting and to deal with exceptions, the biases and deal with scale.”