Enterprises Needing Accelerated Data Analytics and AI Workloads Get Help from Nvidia and Cloudera

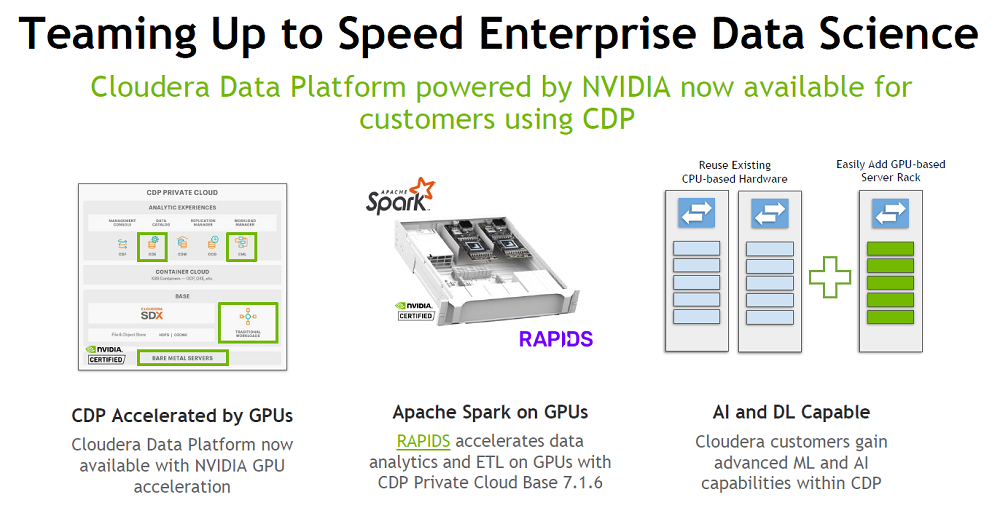

In April, Nvidia and Cloudera unveiled a new partnership effort to bring together Nvidia GPUs, Apache Spark and the Cloudera Data Platform to help customers vastly accelerate their data analytics and AI workloads in the cloud.

After a few months of fine-tuning and previews, the combination is now generally available to customers that are looking for help in speeding up and better managing their critical enterprise workloads.

“What we've announced and made available is the packaging of that solution in Cloudera’s CDP private cloud-based data platform,” Scott McClellan, senior director of Nvidia’s data science product group, said in an online briefing with journalists on Aug. 3. “All the users can get access … via the same mechanisms they use in general to install Spark 3.0 in their Cloudera Data Platform” architecture.

Enabling the combination of technologies is the work that Nvidia has been doing in the past few years to bolster GPU acceleration and transfer parent acceleration using GPUs of Apache Spark workloads in the upstream Apache Spark community, said McClellan.

Customers have been asking for this kind of integration to help them ease the process in their workflows, said McClellan.

“From an IT perspective, we are seeing quite a bit of demand to just simplify the whole experience,” he said. Many enterprises have moved such operations to the cloud because of the “no-touch, self-service” nature of the cloud, he added. “We want to bring a lot of that same experience to enterprises in a hybrid model which is a key focus of the Cloudera Data Platform. There is high demand from IT environments for simple integration and solutions like this.”

Sushil Thomas, the vice president of machine learning at enterprise data cloud vendor Cloudera, agreed.

“[Customers] are screaming for GPUs so they can do all of that work a lot faster,” said Thomas. “The partnership we have will help a lot. And then on the data engineering side, SQL and GPUs and accelerated SQL access is always big as well. There it is more of what is possible and what was not possible before … all the work Nvidia has done in the Spark ecosystem and before the integration that we have done for that within the current platform. Of course, faster SQL, using the latest hardware, is always requested.”

The April partnership announcement from Nvidia and Cloudera laid out plans for the integration of Nvidia’s accelerated Apache Spark 3.0 platform with the Cloudera Data Platform to allow users to scale their data science workflows. The two companies have been working together since 2020 to deploy GPU-accelerated AI applications using the open source RAPIDS accelerator suite of software libraries and APIs, which give users the ability to execute data science and analytics pipelines entirely on GPUs across hybrid and multi-cloud deployments.

The latest Spark 3.0.3 version of Spark is the first release offering GPU acceleration for analytics and AI workloads. Apache Spark is a unified analytics engine for big data processing, with built-in modules for streaming, SQL, machine learning and graph processing.

RAPIDS is licensed under Apache 2.0 and involves code maintainers from Nvidia who continually work on the code for the project. RAPIDS includes support for multi-node, multi-GPU deployments, enabling vastly accelerated processing and training on much larger dataset sizes.

The product integration is aimed at enterprise data engineers and data scientists who are looking to overcome bottlenecks created by torrents of increasingly unstructured data. GPU-accelerated Spark processing accessible via the cloud aims to help break logjams that slow the training and deployment of machine leaning models.

“Nvidia has already done a lot of work in Apache Spark to transparently accelerate Spark workloads on GPUs where possible,” said Thomas. “Cloudera integrates this functionality into Cloudera Data Platform so that all of our customers and all of their data have access to this acceleration, without making any changes to that application. That is important so customers do not have to go in and rewrite things for their existing applications to work.”

To use the services, a customer can add a rack of GPU servers into their existing cloud cluster and the Nvidia hardware and Cloudera take care of the work, said Thomas.

“Any Spark SQL workloads that can now take advantage of GPUs will use them without any required application changes,” he said. “And this is in addition to any machine learning workloads that will also be accelerated by the GPUs.”

This all allows customers to do more on the machine learning side, he said. “This means more compute power on model training and getting to better model accuracy on the data engineering side. It means accelerated processing with 5x or [higher] full stack acceleration for a data science workload. This means you can do five times more in the same data center footprint, which is a huge gain.”

By integrating Nvidia GPUs, Spark and the Cloudera Data Platform for users, it will allow enterprises to get the benefits of the combination without having large IT staffs that specialize in Spark, RAPIDS and other technologies that may not be as familiar, said McClellan.

“We see a big driver for mainstream enterprise that do not have … a team of Spark committers or a team of skilled solution integrators,” he said. “They should be able to get the same solutions that are more turnkey.”

Tony Baer, principal analyst with dbInsight LLC, told EnterpriseAI that for enterprises, such pre-packaged technology partnership offerings are becoming a trend.

“It is Cloudera’s and Nvidia’s attempt to show that in private cloud, you can get all of the optimizations that you could get running from the cloud providers that have their own machine learning services running on their custom hardware,” said Baer. “It will eliminate a lot of the blocking and tackling at the processing level” for customers.

This does not mean, however, that customers will wash their hands completely of the involved processes, said Baer. “That still leaves all the blocking and tackling that they still must otherwise do with the rest of the machine learning lifecycle, from building the right data pipelines to choosing the right data sets, problem design, algorithm selection, etc. This just eliminates the processor part, just like with the cloud machine learning services.”

In June, Cloudera was acquired for $5.3 billion in a move that will make it a private company. The deal, which is expected to close in the second half of 2021, will sell the company to affiliates of Clayton, Dubilier & Rice and KKR in an all cash transaction.