Xilinx Expanding Its Versal Chip Family With 7 New Versal AI Edge Chips Aimed at Edge Workloads

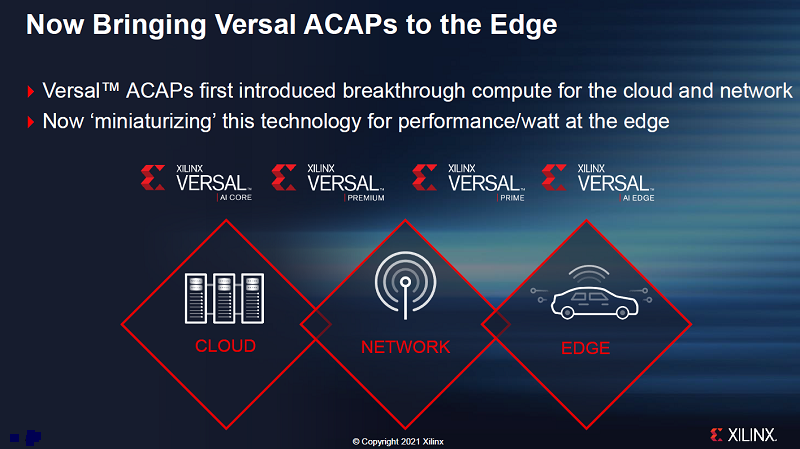

FPGA chip vendor Xilinx has been busy over the last several years cranking out its Versal AI Core, Versal Premium and Versal Prime chip families to fill customer compute needs in the cloud, data centers, networks and more.

Now Xilinx is expanding its reach to the booming edge computing marketplace, unveiling seven new chips in its latest Xilinx Versal AI Edge chip family. Expected to arrive in the first half of 2022, the Versal Edge AI chips are targeted to help customers with a wide range of real-time workloads from autonomous vehicles to robotics applications, industrial applications smart vision systems, medical uses, unmanned drones and even surgical robots. Versal AI Edge series design documentation and support is available to early access customers.

Built on the Versal family’s ongoing 7nm process technology, the Versal AI Edge chips use Xilinx’s existing Adaptive Compute Acceleration Platform (ACAP) architecture and its Vivado, Vitis and Vitis AI software applications to provide developers with the tools they need to create new edge designs for their companies, Rehan Tahir, Xilinx’s senior product line manager, told EnterpriseAI.

Vivado provides design tools for hardware developers, while Vitis is a unified software platform for software developers. Vitis AI is used by data scientists. Also available are domain-specific operating systems, frameworks and acceleration libraries to round out the platform’s toolsets.

The Versal ACAP architecture, which debuted in 2018, brought needed technology to the company’s earlier Versal chip families and is now being miniaturized to provide performance and low power requirements in a wide range of edge environments, said Tahir.

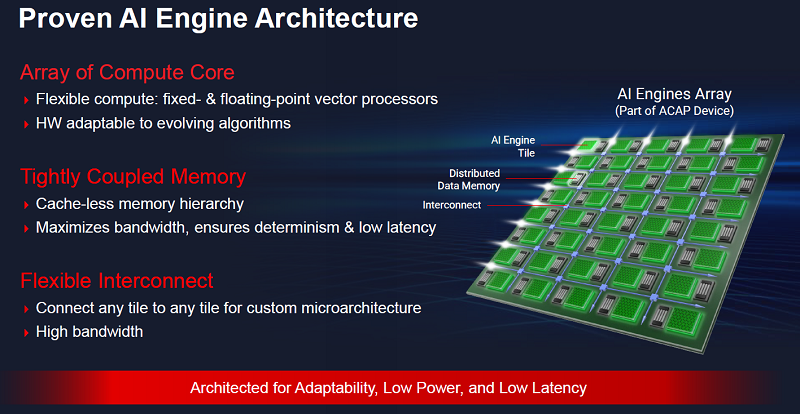

ACAPs are adaptive SoCs which combine scalar engines for embedded compute, adaptable engines for sensor fusion and hardware adaptability, and intelligent engines for AI inference. Also included are fast memory and interfaces that help deliver acceleration for applications.

“We have taken a lot of learnings from these [earlier] devices, and we are also bringing some optimizations to then make sure that these [new] devices are the perfect fit with the edge,” he said.

"Before 2018, there was no such thing as ACAPs,” said Tahir. “We introduced it with [the first] Versal chips. It is a category of device, adaptive SoCs. It is not just a pure FPGA. There are processors, there is programmable logic, there is other stuff going on. ACAP is the product category. If you had to say is this an ASIC or is this an ACAP, then clearly it is an ACAP because it has that programmability as part of the programmable logic that you don't have it in an ASIC or an ASSP.”

Tahir said that puts the Versal AI Edge series in with other AI chips under a broad umbrella. “An AI chip to me is a broad term. AI chips include GPUs, it includes ASSPs that have some type of AI acceleration mechanism, and it absolutely includes these ACAPs because we have these AI engines specifically geared for AI applications. That is the whole reason those AI engines are there.”

Traditional FPGAs often get a bad rap because they can be hard to program, said Tahir. “And we are trying to solve that problem [with the Versal AI Edge series]. And through our Vitus and Vitus AI [software], the goal is to have everything there and make it as easy as possible for the developers.”

Xilinx claims the new Versal AI Edge chip family provides 4x performance-per-watt over GPUs and 10x greater compute density over its own previous generation of chips, the Xilinx Zynq UltraSCALE+ MPSoCs (multiple processor system-on-chips), while also providing reduced latency and flexible architectures. The Zynq UltraSCALE+ chips were built on 16nm process nodes, compared to the 7nm process nodes of the new Versal AI Edge series.

Versal AI Edge Specs

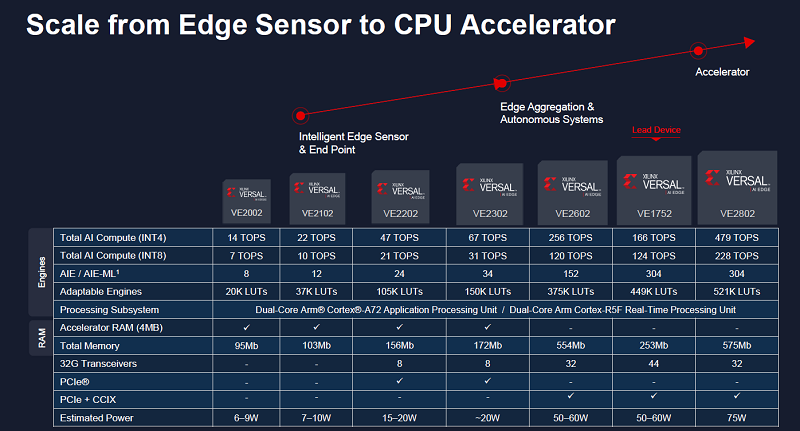

The Versal AI Edge series starts with the VE2002 chip, which consumes six to nine watts of power, provides 14 TOPS of INT4 or 7 TOPS of INT8 performance, and includes 95MB of total memory. The VE2102 chip consumes seven to 10 watts of power, provides 22 TOPS of INT4 or 10 TOPS of INT8 performance, and includes 103MB of memory, followed by the VE2022 chip, which consumers 15 to 20 watts of power and provides 47 TOPS of INT4 or 21 TOPS of INT8 performance, along with 156MB of memory.

Next up is the Versal Edge AI VE2302 chip, which consumes less than 20 watts of power, provides 67 TOPS of INT4 or 31 TOPS of INT8 performance, and includes 172MB of memory.

Rounding out the line-up are the VE2602, which consumes 50 to 60 watts of power while providing 256 TOPS of INT4 or 120 TOPS of INT8 performance along with 554MB of memory; the VE1752, which consumes 50 to 60 watts of power while providing 166 TOPS of INT4 or 124 TOPS of INT8 performance along with 253MB of memory; and the VE2802, which consumes 75 watts of power and provides 479 TOPS of INT4 or 228 TOPS of INT8 performance. The VE2802 includes 575MB of memory.

All seven Versal AI Edge chip models feature dual-core Arm Cortex-A72 CPUs and dual-core Arm Cortex-R5F processors for meeting real-time application requirements.

The VE2002 includes eight AI engine/ML engine tiles, while the VE2101 includes 12 tiles. The VE2202 includes 24 tiles, the VE2302 includes 34, the VE2602 includes 152 tiles and the VE2802 includes 304 tiles. TheVE1752 is equipped with 304 tiles, but they are only AI engine tiles.

The chips range in size from 19mm x 19mm for the VE2002 to 35mm x 35mm for the VE1752.

Full Programmability for Flexibility

Because they are FPGAs with Xilinx’s ACAP architecture built in, they are fully software and hardware programmable, which can even be done on-the-fly, said Tahir.

“There's the programmable logic that is inside of our devices, what we call the adaptable engines,” he said. “What makes Xilinx unique from ASSPs (Application-Specific Standard Products) and GPUs is that our chips are programmable and those are not. You can go in and customize how you want to do the localization, how you want to do the mapping, the sensor fusion.”

In addition, there are Arm-based real-time and application processors on the chips, which allow developers working on autonomous vehicles to make decisions that will influence the vehicle and how it can be controlled and interface with the driver, said Tahir.

“The whole application is accelerated because we have the programmability and the adaptive engines, we have the AI/ML compute capability in the intelligent engines, and then we've got the processors to act in scalar engines,” he said.

Xilinx already has successful engagements with nearly all automotive companies today, said Tahir. “The Versal AI Edge series will only continue that success.”

Standing Out from the Crowd

There is plenty of competition in the overall AI chip marketplace today, particularly when it comes to the rapidly expanding edge market, said Tahir. Competitors include Nvidia, Texas Instruments, Mobileye, Qualcomm, NXP and Renesas, all of which are either focusing on the low end or the high end, he said.

To help Xilinx differentiate itself from the field, the company’s complete platform – from its Versal AI Edge chip line to the accompanying software and tools – work across all the new chips. Some competitors, like Nvidia, use different platforms to support different chips, meaning customers cannot make simple transitions from one chip to another without having to change platforms and architectures as well, which can be complicated, said Tahir.

“Scalability matters because you have [different] performance buckets,” he said. “We have a unique combination of both the low-power, low-end devices, mid-range and high-end, all on a single platform, a single software stack, a single process node, a single set of models and libraries. You design once and then you can scale up and down as you see fit.”

Analysts Weigh in on Versal AI Edge

The new Versal AI Edge chips will be arriving at a good time, Dave Altavilla, principal analyst for HotTech Vision and Analysis, told EnterpriseAI.

“The Xilinx Versal AI Edge series of adaptable accelerators appear to be a well-crafted solution to the insatiable demand for intelligent processing, machine vision and AI inference in various edge devices and networks, from robotics to autonomous driving, UAVs and industrial automation,” said Altavilla. “With the ability to be both hardware and software adaptable in the field and on-the-fly in real-time, Xilinx’s Versal AI Edge can deliver critical enabling and adaptable processing solutions that otherwise couldn’t be enabled with traditional fixed-function technology. Couple Versal AI Edge with Xilinx’s full stack market-specific application programming model with native tools, libraries and frameworks that do not require developers to be hardcore FPGA experts, and the total platform solution that Xilinx brings to the table is very strong. “

Another analyst, Jim McGregor of Tirias Research, agreed.

“Everyone is focused on edge AI right now, everything is going towards AI, but you realize you cannot send everything to the cloud, especially when you have to process information in real-time,” said McGregor. “So, it is important to have solutions that are flexible for the edge so we can process stuff in real-time or near real-time, whether it is from sensor data or whether it is some user interface or something like that. Having solutions like that is critical.”

The advantage for Xilinx of having an FPGA as part of the new chip family is flexibility in programming, he said. “This is going to – unlike the traditional use for FPGAs, which is really just for design and development – this gives somebody the flexibility of designing a solution around the application with an FPGA, and then go straight into production.”

And as companies work with AI, it is not just about the hardware, said McGregor. “It is about the software, and that is where a lot of people get tripped up. What is important here is that you have a flexible, or as they like to call it, adaptable hardware platform, that has a full software solution behind it. They are trying to bring all that down in terms of hardware and software for embedded or IoT applications.”

What Xilinx is doing is like the approach of Qualcomm, which over time added different engines to its Snapdragon processor line, said McGregor. Xilinx is using AI engines, digital signal processing (DSP) engines and more, like Qualcomm’s strategy.

“All of those Qualcomm engines work together to process different types of AI workloads,” he said. “It is very much what Xilinx is trying to bring to all the other types of applications, being able to run not just a general purpose workload but be able to run AI and other things. You can use that DSP just for the sensor processing, and then feed that information into the AI engines to run the neural network to make it actual decisions. And then the FPGA can be programmed as that core CPU, if you will, to actually tell the entire system what it is supposed to be doing.”

McGregor said he sees Xilinx’s approach in speeding up the programming capabilities of FPGAs as a big benefit for potential customers.

“With a traditional FPGA, one of the downfalls was it took so long to reprogram it,” he said. “It can take at best a couple seconds. Xilinx has gotten much better at that to where they can program some of their FPGAs in microseconds. And that is very significant because that reduces one of the key barriers of FPGAs – being able to reprogram it from one workload to the next.”

Xilinx Technologies Attracted AMD's Attention

Things got even more interesting for Xilinx in the marketplace in October of 2020, when it announced that it is being acquired by chipmaker AMD for $35 billion in an all-stock transaction. The acquisition helps AMD keep pace during a time of consolidation in the semiconductor industry. GPU rival and market leader Nvidia acquired Mellanox (interconnect) and continues to make its way through a deal to buy Arm (processor IP).