Easing Machine Learning for Developers, Google’s Vertex AI Managed ML Platform Now Available

Google Cloud has been busy over the last few years building a wide range of AI and machine learning tools for developers to help them find real-world uses for AI and ML within their enterprises.

But as the tools proliferated, the AI and ML workflows, modeling, experimentation and more began getting complicated. Something was needed to make sense of the mess.

With just such a fix in mind, Google Cloud has unveiled its new Vertex AI managed ML platform, which is designed to streamline and accelerate ML modeling and maintenance to help overwhelmed enterprises get a better handle of their ML and AI initiatives. The platform is now generally available.

Unveiled at the company’s Google I/O virtual conference on May 18 (Tuesday), Vertex AI uses almost 80 percent fewer lines of code to train a model compared to competing platforms, according to Google. The platform is also built to serve data scientists and ML engineers so they can use it to implement and manage machine learning operations (MLOps) for their projects.

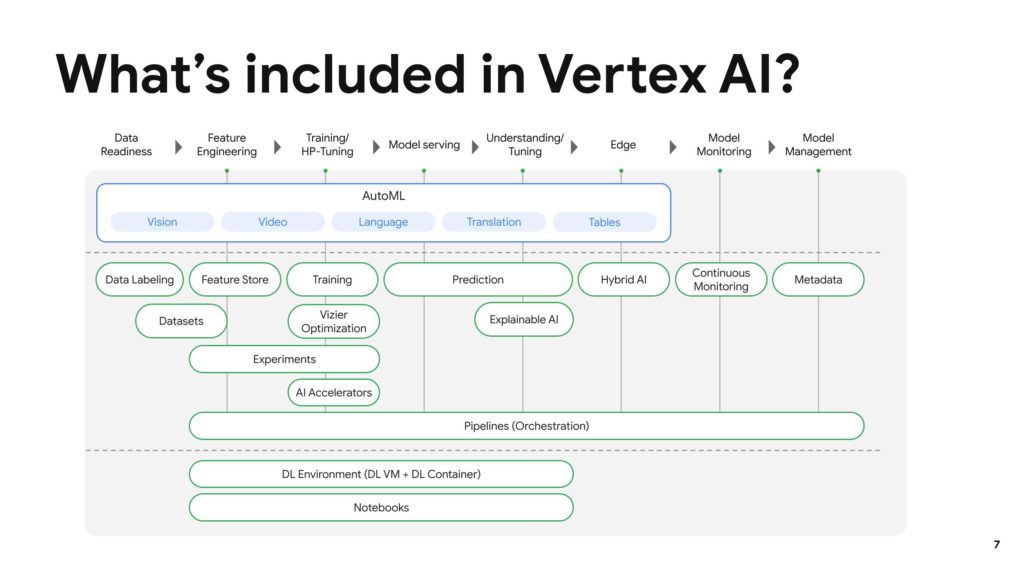

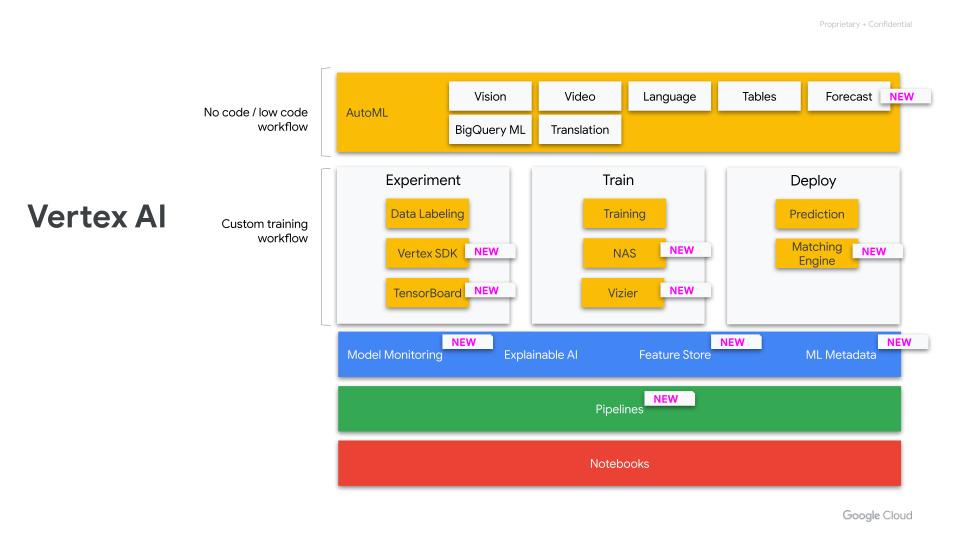

Vertex AI, together with Google Cloud services, uses one unified UI and API to simplify the process of building, training and deploying ML models at scale, according to Google. Within Vertex AI, users can move models from experimentation to production more quickly while also discovering patterns and anomalies, making needed predictions and decisions and gaining agility as they create their workflows.

Manage models with confidence by removing the complexity of self-service model maintenance and repeatability with MLOps tools like Vertex Continuous Monitoring and Vertex Pipelines to streamline the end-to-end ML workflow.

Built for Enterprises

Vertex AI was designed to solve two serious problems faced by AI platform companies including Google Cloud, Craig Wiley, director of the Vertex AI project told EnterpriseAI.

The first was that Google Cloud had already deployed a large number of AI and ML tools and services to stay abreast of the market and what developers needed, but they often had poor compatibility between them, said Wiley.

“We had customers saying, ‘hey, I want more control or I want more automation’ and we were kind of stuck,” he said. “So, the first piece was to entirely refactor the platform for improved compatibility. We often talk about this idea of moving left and right in the workflow. But what if you could also move up and down among abstractions with ease? So that was the goal to lay the foundations. Vertex AI is the chassis on which we could build that capability and improve that capability over time.”

The other large problem that Vertex AI seeks to solve is to change the focus from building the models, which has long been a main concern for ML, to helping enterprise users to find real, measurable and provable value or ROI in using ML in their operations, said Wiley.

When ML services began launching regularly starting in 2016, they promised the ability to create a prediction stack once training was completed and that it would be the end of the work, he said.

“And we all know that that's not the case,” he said. “There is a tremendous amount of work that has to go into what's your model behind that API. How do you get the data to it, and is the data from the same sources that you trained it on, or did you train it on the data warehouse and now you're using production data to feed the model?”

With these issues in mind, Google Cloud began asking how it could help customers solve these problems and work with ML that can actually help them with their workflows and projects. That’s where the idea for Vertex AI came from two years ago, said Wiley.

“So now all of a sudden MLOps moves from a lower level set of tools that the ML engineer has to deal with to a set of concepts that become front and center,” said Wiley. “Concepts like pipelines and feature stores become primary ideas, not secondary notions, in how we think machine learning should be managed at scale. On a high level, this makes all these things easier for developers to do because it's a platform that addresses those needs that they didn't have before.”

Vertex AI is designed as a series of fully-managed services within Google Cloud to help accelerate ML use for customers that can help them attain positive ROI. It is built only for Google Cloud users.

Mark Boost, the CEO at Kubernetes platform vendor Civo, told EnterpriseAI that he expects the new Vertex AI platform to be a useful tool for the marketplace.

“Google’s Vertex AI platform is a great example of what’s possible when you listen to developers and deliver something they need,” said Boost. “Developers do not want to get bogged down in complexity, they want tools that give them a clear route to delivering real business solutions.”

For some time, that has been one of the great promises of cloud computing, that it would allow developers the luxury of spending less time worrying about infrastructure and more time building products, said Boost.

“But too often the reality isn’t there yet,” he said. “Google’s announcement points to a definitive shift in the industry towards developer-centric solutions. The big hyperscalers are beginning to wake up to fact that developers do not want to get bogged down in complexity. They want tools that give them a clear roadmap from entry to delivering ROI to the business.”

These changes are even more important for AI, which has fantastic potential but continues to have a high barrier of entry when it comes to returning business value, said Boost. “The more firms can offer developers effective tools to cut down on drag points in the AI lifecycle like experimentation and model training, the faster developers can start to deliver on the benefits of AI right across the enterprise."

Will Help Overwhelmed Data Scientists: Analyst

Analyst James Kobielus, the senior research director for data management at research firm TDWI, said that developers are flocking to integrated MLOps tooling to speed modeling, training and serving of more complex AI applications to cloud, mobile, edge and other platforms.

“As one of the principal providers of AI services to enterprises everywhere, Google has been building a powerfully integrated set of MLOps cloud services for every possible AI, deep learning, machine learning, and natural language processing use case,” said Kobielus. That work started with the Google Cloud AutoML service two years ago, which was a first foothold into this market, he added.

“Now, with the GA of Vertex AI, Google has a comprehensive MLOps solution that can compete more effectively with Amazon SageMaker, Microsoft Azure Machine Learning and other cloud-based MLOps solutions,” said Kobielus. “What’s most impressive about Vertex AI is Google’s focus on offering, under a single user interface and API, a low-code tool that, they claim, can greatly reduce the amount of code needed to build, train, productionize, and maintain ML models.”

And since data scientists everywhere are stretched painfully thin with expanding workloads, they’re seeking out MLOps offerings that offer productivity multipliers, said Kobielus. “Many of the components of the Vertex AI launch were already present in Google Cloud’s solution portfolio in some form, but they gain value from being unified into a common architecture. Where MLOps is concerned, the most important element of this announcement, is Vertex Pipelines the continuous monitoring, experimentation, and labeling service that is a rebranding of Google Cloud’s AI Platform Pipelines. It represents a key accelerator for the collaborative productivity of teams in involved in linked DataOps, MLOps, and DevOps workflows.”