Nvidia Unveils 2 New AI Server GPUs and Upcoming Certified Enterprise AI Systems at GTC21

Nvidia GTC21 – With a goal of bringing easier AI to the enterprise, Nvidia is releasing two new AI-targeted GPUs and a line of Nvidia-certified servers from partner manufacturers that are built to run the Nvidia AI Enterprise software suite out of the box.

Details of the new products were unveiled April 12 (Monday) by Nvidia CEO Jensen Huang in a keynote broadcast as part of Nvidia’s spring GTC21 (GPU Technology Conference), which is being held virtually due to the COVID-19 pandemic.

The new GPUs, the Nvidia A30 and the Nvidia A10 are both Tensor Core GPUs built for mainstream enterprise servers. The A30 is aimed at AI inferencing and enterprise workloads, while the A10 is aimed at bringing mainstream graphics and video together with AI services.

Also unveiled was the company’s first-ever data center CPU, named Grace for the late computer programming pioneer and U.S. Navy Rear Admiral Grace Hopper, and a myriad of new AI software and services, all of which are aimed at helping customers to use AI for cybersecurity, autonomous vehicles, workflows and more.

All of these components are being added to bring Nvidia together as a full stack computing platform company that can help customers solve new problems and enter new markets as AI moves from early work in theory and development into real-world uses, Huang said in his keynote.

“The next wave is enterprise, and industrial edge where AI can revolutionize the world's largest industries, from manufacturing, logistics, agriculture, healthcare, financial services, and transportation,” said Huang. “There are many challenges to overcome,” especially in industrial edge and autonomous system applications, but they also offer the largest opportunities for AI to make an impact. “Trillion dollar industries can soon apply AI to improve productivity and invent new products, services and business models. We have to make AI easier to use, to turn AI from computer science to computer products.”

The New A30 and A10 GPUs

The Nvidia A30 GPU is built for AI inference at scale and for mainstream enterprise workloads, according to the company, making it capable of rapidly re-training AI models with TF32, as well as accelerate high-performance computing applications using FP64 Tensor Cores. Multi-Instance GPU (MIG) and FP64 Tensor Cores combine with 933 gigabytes per second (GB/s) of memory bandwidth in a low 165W power envelope, all running on a PCIe card. The A30 includes 24GB of HBM2 memory and a PCIe Gen4 64GB/s interconnect.

The A30 GPU is a toned-down version of the Nvidia A100, Justin Boitano, general manager of enterprise and edge computing at Nvidia, said in a pre-briefing on April 8.

The Nvidia A10 GPU is built to bring together mainstream graphics and video with AI services. Included is 24GB GDDR6 memory and a PCIe Gen4 64GB/s interconnect. The A10 is a toned-down version of the Nvidia A40, according to Boitano.

New Nvidia-Certified Enterprise Servers

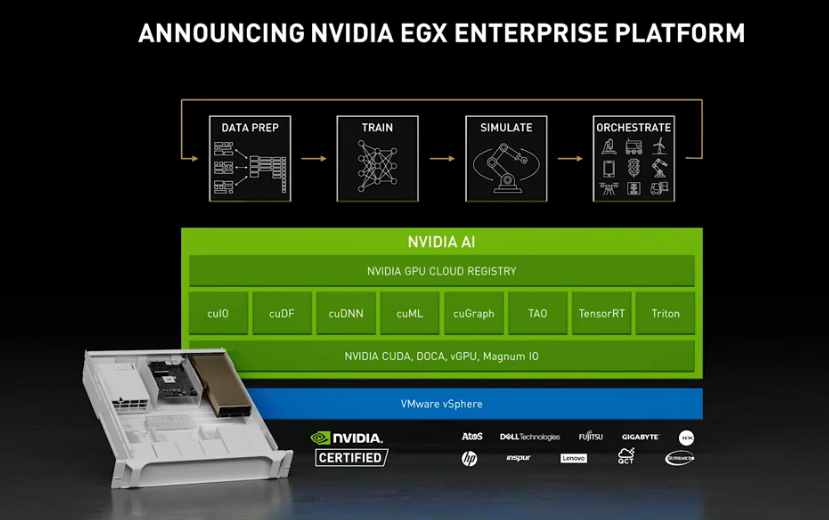

Both of the new GPUs are available in the group of some 50 new Nvidia-certified enterprise AI server models that were also announced by Huang during his keynote. The latest servers are certified to run the Nvidia AI Enterprise software suite, which was announced in March. The Nvidia AI Enterprise software suite is integrated with VMware’s latest vSphere 7 Update 2 virtualization platform to make it easier for enterprises to virtualize their expanding AI workloads.

The first new Nvidia-Certified systems using the A30 and A10 GPUs will be available later this year from Nvidia partners including Atos, Dell Technologies, GIGABYTE, H3C, Inspur, Lenovo, QCT and Supermicro.

As the lure and promise of AI for enterprises continues to spread, Nvidia, like other companies, has been looking to find new ways to serve customers, Huang said.

“One segment of computing we've not served is enterprise computing,” and that’s where the integration with VMware vSphere 7 and the latest certified servers will help, said Huang. Some 70 percent of the world's enterprises are running VMware, he added.

“All of the Nvidia optimizations for compute and data transfer are now plumbed through the VMware stack,” he said. “So AI workloads can be distributed to multiple systems and achieve bare metal performance,” giving users major benefits.

The complete platform, from certified servers to the Nvidia AI Enterprise software, is called the Nvidia EGX platform, according to Huang. “The enterprise IT ecosystem is thrilled. Finally, the 300,000 VMware enterprise customers can easily build an AI computing infrastructure that seamlessly integrates into their existing environment. In total, over 50 servers from the world's top server makers will be certified for Nvidia EGX Enterprise.”

Among the first Nvidia customers incorporating these new A30- and A10-based systems into their data centers are Lockheed Martin and Mass General Brigham.

Grace, the First-Ever Nvidia Data Center CPU

In an announcement that might have been the most unexpected in the keynote, Huang announced Nvidia’s first-ever, Arm-based, data center CPU, called Grace in honor of the famous American programmer Grace Hopper. The announcement of the new Arm CPU follows Nvidia’s 2019 declaration of intent to fully embrace Arm and its September 2020 announcement to acquire Arm for $40 billion.

Grace is expected to debut in 2023 in two HPC centers that are leading the way. The Swiss National Supercomputing Centre (CSCS) and the U.S. Department of Energy’s Los Alamos National Laboratory are the first to announce plans to build Grace-powered supercomputers, which will both be supplied by HPE.

Using future-generation Arm Neoverse cores and next-generation NVLink technology, Grace has been custom designed for tight coupling with Nvidia GPUs to power the very largest AI and HPC workloads, according to Nvidia.

The chip, combined with Nvidia’s GPUs and high-performance networking from its Mellanox division gives Nvidia “the third foundational technology for computing, and the ability to re-architect every aspect of the data center for AI,” said Huang.

For Nvidia, A Timely Enterprise Push

In a pre-briefing on April 8, Manuvir Das, Nvidia’s head of enterprise computing, told EnterpriseAI.news that the company’s decision to target deeper enterprise use of AI with more tools is well-timed.

“From a technology point of view, we believe that the software we are providing has matured enough that we can provide enterprise grade versions and we can do that with all the right support, including SLAs and that kind of stuff,” said Das. Nvidia started with its roots in hardware, and then moved into software tools for AI, he said.

“Now we are ready for phase three, which is to be a serious enterprise grade software vendor, just like VMware provides vSphere or Microsoft provides Exchange Server and how SAP provides their stack,” said Das. “This is the next phase of evolution from us, saying, “it's industrial strength AI that’s ready for enterprise customers. And, the fact is, AI done in a DIY fashion is challenging for a lot of enterprise customers.”