Here’s An AI-Powered, Voice-Activated Backpack That Aims to Help Visually-Impaired People ‘See’

An open-source AI initiative has come up with a prototype system in the form of a voice-activated backpack that promises to help the visually impaired perceive and navigate their surroundings.

The project, which is sponsored by Intel Corp., draws not only on AI hardware and software but also on open-source development kits and critical input from potential users. Those insights gave developers at the University of Georgia a better sense of the physical as well as economic challenges faced by potential users of the AI-based, voice-activated backpack.

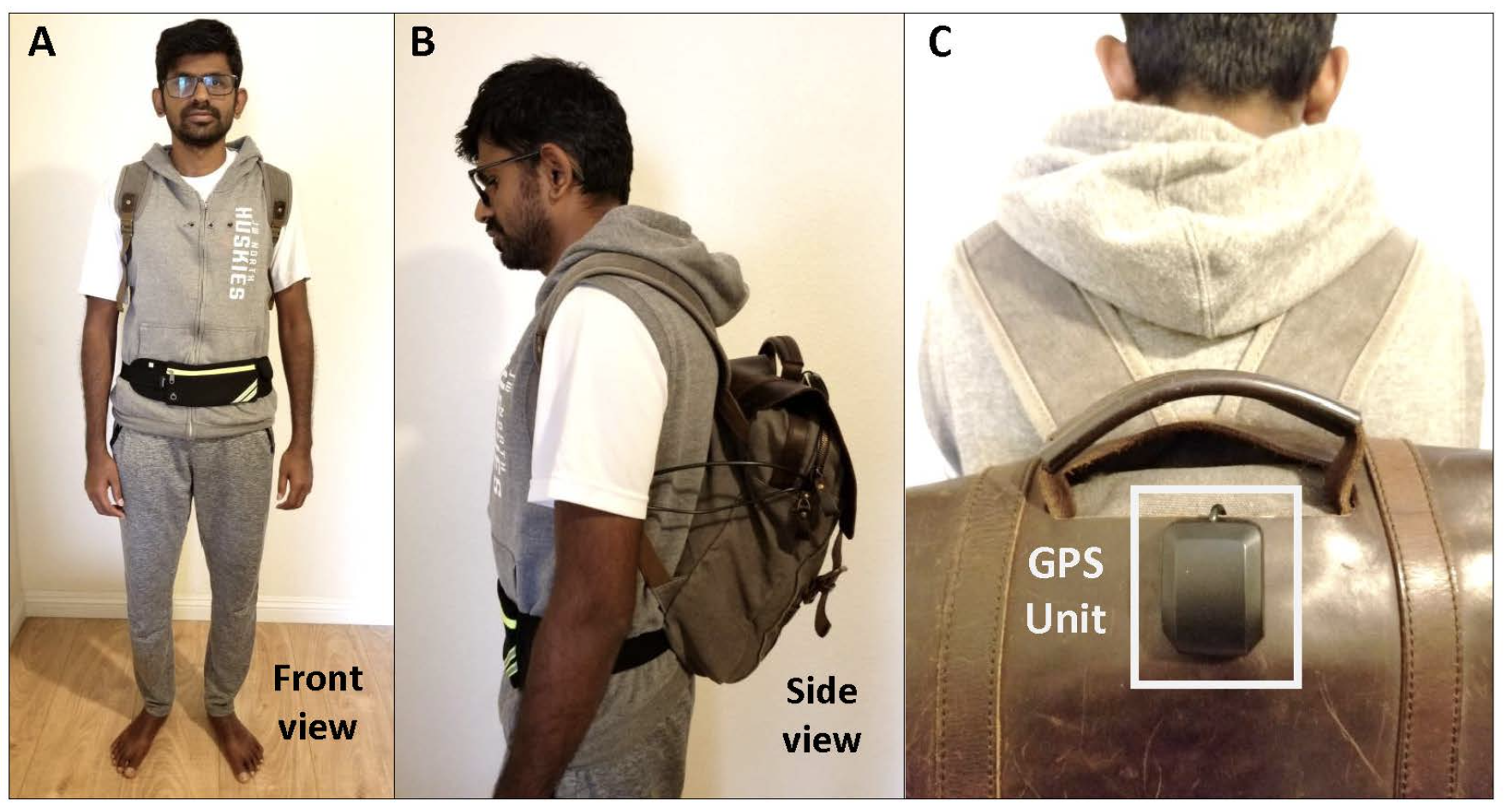

The vision system backpack carries a host computer, usually a laptop, that connects to cameras concealed within a vest jacket. A pocket-sized battery pack provides up to eight hours of power on a charge.

Jagadish Mahendran of the University of Georgia’s Institute for Artificial Intelligence said his team was inspired by earlier work on robotic vision. “Last year when I met up with a visually-impaired friend, I was struck by the irony that while I have been teaching robots to see, there are many people who cannot see and need help,” Mahendran said.

His team won last year’s Intel-sponsored OpenCV Spatial AI Competition, providing the impetus to continue development. The prototype incorporates OpenCV’s AI Kit with Depth, or OAK-D, that incorporates a spatial AI camera that can be affixed to either a user’s vest or a fanny pack also used to carry a battery.

Emphasizing the open-source approach, Mahendran said “the system is meant to be simple, wearable and also unobtrusive so that the user doesn’t gain any unnecessary attention by other pedestrians in a public place.”

The OAK-D camera kit runs on Intel’s Movidius VPU AI chip. Along with an interface that provides the user with critical updates, the system can also be corrected through voice commands. It responds to user feedback via Bluetooth-enabled earphones to provide navigational information.

While the visual system makes use of pre-trained models developed for advanced driver-assistance systems, depth perception is critical to the success of a vision system for the sight impaired. Mahendran explained that his prototype incorporates a pair of cameras to provide stereo vision used to estimate depth. Combined with the spatial AI camera, the system helps users estimate depth while also detecting moving objects, traffic signals, hanging obstacles, crosswalks and changing elevations.

“One of the advantages of this combination of Intel’s Movidius chip and this stereo vision is that you can couple the detection that is happening at the image level with the depth data,” said Mahendran.

The next step is raising the appropriate amount of funding to continue development, optimizing hardware and software while tapping into the open-source development community. “We have a clear set of goals to achieve once we raise funds,” Mahendran said. “We want to make the system as affordable and accessible as possible.”

The current plan is to develop a platform, then optimize it as a “plug-and-play” system in collaboration with open-source developers, added Hema Chamraj, director of Intel’s Technology Advocacy and AI4Good initiative.

“The technology exists,” she stressed, “we are only limited by the imagination of the developer community.”

A video demonstrating the AI vision system is here.

Related

George Leopold has written about science and technology for more than 30 years, focusing on electronics and aerospace technology. He previously served as executive editor of Electronic Engineering Times. Leopold is the author of "Calculated Risk: The Supersonic Life and Times of Gus Grissom" (Purdue University Press, 2016).