Nvidia Unveils Certified Server Program Offering Pre-Built Servers for AI Applications

Nvidia today launched a certified systems program in which participating vendors can offer Nvidia-certified servers with up to eight A100 GPUs. Separate support contracts directly from Nvidia for the certified systems are also available. Besides the obvious marketing motives, Nvidia says the pre-tested systems and contract support should boost confidence and ease deployment for those taking the AI plunge. Nvidia-certified systems would be able to run Nvidia’s NGC catalog of AI workflows and tools.

Adel El-Hallak, director of product management, NGC, announced the program in a blog today and Nvidia held a pre-launch media/analyst briefing yesterday. “Today, we have 13 or 14 systems from at least five OEMs that are Nvidia-certified. We expect to certify up to 70 systems from nearly a dozen OEMs that are already engaged in this program,” said El-Hallak.

The first systems, cited in El-Hallak’s blog, include:

- Dell EMC PowerEdge R7525 and R740 rack servers

- GIGABYTE R281-G30, R282-Z96, G242-Z11, G482-Z54, G492-Z51 systems

- HPE Apollo 6500 Gen10 System and HPE ProLiant DL380 Gen10 Server

- Inspur NF5488A5

- Supermicro A+ Server AS -4124GS-TNR and AS -2124GQ-NART

Large, technically-sophisticated users, such as hyperscalers and large enterprises, are not expected to be big buyers of Nvidia-certified systems but smaller companies and newcomers to AI may be attracted to them, say analysts.

“I don’t think it will speed up the adoption of AI per se but it will take some of the variables out of the equation. Especially small-scale deployments will benefit,” said Peter Rutten, research director, infrastructure systems, platforms and technologies group, IDC. “There is a certain appeal for end users to be assured that the hardware and software are optimized and have that package be officially ‘certified.’ It relieves them from having to optimize the system themselves or having to research the various offerings in the market for optimal performance based on hard-to-interpret benchmarks.”

Karl Freund, senior analyst HPC and deep learning, Moor Insights and Strategies, said, “I think uncertified systems will be fine for large cloud service and e-commerce datacenters; those customers build their own from ODMs, [and] enterprise customers already buy from the OEMs who will certify. [That said] making it simple and easy to stand up hardware AND software from NGC should speed tome to value for IT shops.”

Nvidia didn’t present a detailed test list for certification but El-Hallak offered the following description:

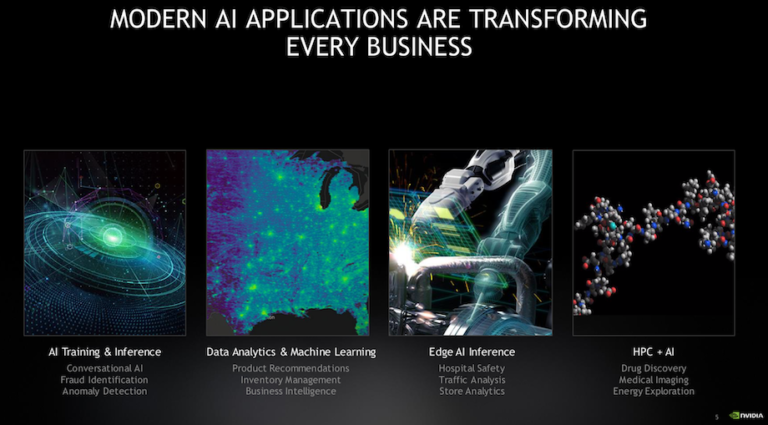

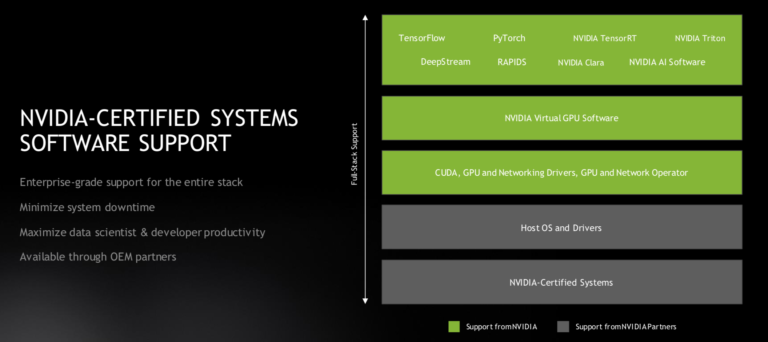

“It starts with different workloads. We test for AI training and inference, machine learning algorithms, AI inferencing at the edge – so streaming video, streaming voice, and HPC types of workloads. We essentially establish a baseline, a threshold, if you will, internally. We provide our OEM partners with training tips that then run the workloads. So we do things like test with different batch sizes, with different provisions, and test across a single and multiple GPUs.

“We [also] test many different use cases. We look at computer vision types of use cases. We look at machine translation models. We test the line rate as two nodes are connected together to ensure the networking and the bandwidth is optimal. [F]rom a scalability perspective, we test for a MIG instance (a multi-instance GPU), so a fraction of the GPU, a single GPU, across multiple GPUs, [and] across multi-nodes. We also test for GPU direct RDMA to ensure there’s a direct path for data exchange between the GPU and third-party devices. Finally, for security, we test for data encryption with built in security such as TLS and IPsec. We also look into TPM to ensure there’s a hardware security of the device,” he said.

Proven ability to run the NGC catalog is a key element. NGC is Nvidia’s hub for GPU-accelerated software, containerized applications, AI frameworks, domain-specific SDKs, pre-trained models and other resources.

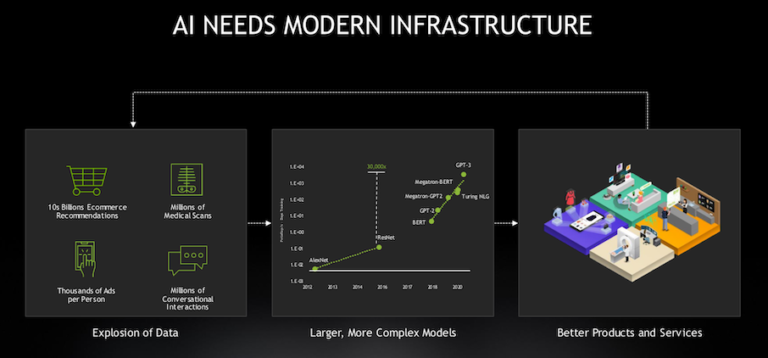

El-Hallak argued the growth of datasets, model sizes, and the dynamic nature of AI software and tools were challenging for all AI adopters, and that certified systems would mitigate some of the challenges. He cited use cases in finance, retail, and HPC where datasets and models have grown extremely large. “Walmart generates 2.5 petabytes every hour,” he said.

Nvidia said there is no cost to OEMs or other partners to participate in the Nvidia-certification program. Once certified, systems are eligible for contract software support directly from Nvidia. “This is where the OEM sells to the end user a support contract and that end user gets access directly to Nvidia. There’s a defined SLA (service level agreement) and escalation path. We support the entire software stack. So whether it’s the CUDA toolkits, the drivers, all the workloads that are certified to run on these systems, users have access directly into Nvidia [for] support,” said El-Hallak.

In the briefing, El-Hallak emphasized use of Mellanox interconnect products (Ethernet and InfiniBand), but in response to an email about whether Mellanox interconnect products were required, Nvidia said, “Partners [can] ship whatever networking their customer wants in Nvidia-certified systems and those systems will be eligible for Nvidia’s enterprise support services. During the certification process we need partners [to] use a standardized hardware and software environment to do a fair apples-to-apples comparison. That standardized environment includes specific releases of the OS, Docker, the Nvidia GPU Driver, and Nvidia network hardware and network drivers. If a partner doesn’t have the required Nvidia networking gear in their certification lab, Nvidia can loan it to them.”

Customers who buy the service contract have two paths to obtain support, according to Nvidia:

- Customer contacts OEM server vendor first. “If OEM server vendor determines that the problem is an Nvidia SW issue, we will request that the customer open a case with Nvidia and provide the OEM server vendor case number as well in case we need to collaborate and reference the case.”

- Customer contacts Nvidia first. “Customers can contact Nvidia through the Nvidia enterprise support portal, email or phone: https://www.nvidia.com/en-us/support/enterprise/; If Nvidia determines that the problem is an issue that the OEM server vendor is responsible for, we will request that the customer open a case with their OEM server vendor.”

Pricing for the Nvidia-certified systems software support is on a per system basis, and varies depending upon the system configuration. As an example, Nvidia says the support cost for ‘volume’ servers featuring two A100 GPUs, is about “$4,299 per system with a 3-year support term that customers can renew.”

Both Freund and Rutten think it’s unlikely there will be a significant pricing differential between Nvidia-certified and uncertified systems.

Rutten said, “I think the server OEMs will increase their ASP somewhat for certified systems. But not a lot, because by now the market has learned fairly well how to deploy AI infrastructure and if ASPs go up too much, end users will decide they’d rather do it themselves than pay a premium, especially if they’re looking to deploy a large cluster where a premium is going to add up in absolute dollars.”

It will be interesting to watch how server vendors distinguish Nvidia-certified products from uncertified systems. To some extent, said Freund, there’s not much left to differentiate among GPU-based AI servers anyway beyond price. “I think all hardware vendors are already stuck in that mode, with exceptions such as Cray, and must differentiate on customer service, both before and after the sale,” he said.

Rutten suggested a little more wiggle room, “There are still various differentiators – host processors, storage, interconnects, the infrastructure stack which often has several proprietary layers, RAS features, and the purchasing model (think: HPE Greenlake). And the certified offers will have different performance results, based on these factors.”

Rutten did wonder if two markets could arise, one in which uncertified systems can’t support Nvidia’s NGC stack and another of certified systems which do.

“I think that is a distinct possibility. It all depends on the price difference we’re going to see between certified and non-certified systems. If large, then we’ll see a whole secondary market evolve for non-certified systems, which cannot be what Nvidia intended to achieve. I haven’t spoken to OEMS yet, so don’t know what their pricing strategies will be, but that’s the crux of the matter.”

Maribel Lopez, founder of Lopez Research, said, “I think end buyers will feel comfortable with certified hardware but that doesn’t mean they won’t continue to purchase non-certified solutions. The big win for certified offerings comes in creating a set of systems where the certain fundamental features and functions are a given. It helps organizations scale faster using the hardware. Certification is only one element of differentiation. Manageability and security are the other areas where HW vendors have to focus their differentiation efforts.”

It’s been a busy few years for Nvidia whose early focus on GPUs has widened to encompass interconnect (Mellanox) technology, AI software (NGC catalog), CPUs (pending Arm acquisition), and foray into the large system (DGX A100) business. The Nvidia-certified system program knits many of those elements together and potentially provides marketing ammunition for Nvidia partner OEMs.

This article first appeared on sister website HPCwire.