Nvidia Expands Its DPU Family, Unveils New Datacenter on Chip Architecture

Nvidia GTC 2020 – Nvidia kicked off its GTC 2020 GPU Technology Conference with a wide range of intriguing AI announcements, from the introduction of its latest Bluefield-2 Data Processing Unit (DPU) models to the unveiling of an all-new data-center-on-a-chip (DOCA) architecture designed to bring major enhancements for networking, storage and security.

Also announced was Nvidia’s DGX SuperPOD modular AI infrastructure that’s designed to enable customers to deploy powerful AI supercomputers in much less time compared to traditional architectures, and the expansion of its Nvidia EGX edge computing platform to now include its Ampere GPUs and its Bluefield-2 DPUs on a single PCIe card.

A new Jetson AI and robotics starter kit was also unveiled, featuring a $59 price tag for a 2GB Jetson Nano developer kit that provides an introduction to Nvidia’s Jetson AI at the Edge platform.

The GTC 2020 event, which launched Monday (Oct. 5) and is being held virtually due to the COVID-19 pandemic, continues through Oct. 9.

The moves come as Nvidia continues to grow its expertise and power in the enterprise AI and computing markets, from its recently announced intentions to acquire chip IP vendor Arm for $40 billion, to its $7 billion acquisition of networking interconnect vendor Mellanox. The Mellanox deal, which closed in April, got Nvidia firmly entrenched in DPUs and networking innovations that are now being woven into its core markets. The Bluefield DPU family came as part of the acquisition.

DPUs Will Help Lead the Way

Jensen Huang, Nvidia’s founder and CEO, said in his opening GTC 2020 keynote that much of his company’s future rests on the innovations being brought to AI and computing by DPUs.

“The data center has become the new unit of computing,” he said. “DPUs are an essential element of modern and secure accelerated data centers in which CPUs, GPUs and DPUs are able to combine into a single computing unit that’s fully programmable, AI-enabled and can deliver levels of security and compute power not previously possible.”

DPUs have different roles than traditional CPUs and GPUs, which focus on compute and accelerated computing respectively. DPUs are used to make CPUs more efficient and allow CPUs to focus on their primary tasks by offloading critical networking, storage and security tasks from CPUs and put those workloads onto the DPUs. The system impacts are considerable – a single BlueField-2 DPU delivers the same data center services used by up to 125 CPU cores, according to Nvidia. That can allow those CPU resources to be freed up and instead used to run critical enterprise applications.

DPUs are programmable processors in the form of a system on a chip (SoC), and they include industry standard, high-performance, software programmable, multi-core CPUs, typically based on the Arm architecture, according to a May blog post by Nvidia. Also included in the DPU is a high-performance network interface that can parse, process and transfer data at the speed of the rest of the network, to GPUs and CPUs.

The Newest Bluefield DPU Models, and the DOCA That Supports Them

Announced at GTC 2020 are the upcoming Nvidia BlueField-2 and Nvidia Bluefield-2X DPUs. The Bluefield-2 combines eight 64-bit A72 Arm cores, two VLIW acceleration engines and the Nvidia Mellanox ConnectX-6 Dx SmartNIC. The Bluefield-2 offers accelerated security, with isolation, root of trust, crypto, key management and more, as well as accelerated networking and storage.

The Nvidia Bluefield-2X DPU includes the features of the Bluefield-2, as well as upgrades such as an Nvidia Ampere GPU and a PCIe Gen 4 interconnect between the DPU and the CPU. The DPUs AI capabilities can be applied to data center security, networking and storage tasks. For example, it can use AI for real-time security analytics, including identifying abnormal traffic, which could indicate theft of confidential data, encrypted traffic analytics at line rate, host introspection to identify malicious activity, and dynamic security orchestration and automated response.

BlueField-2 DPUs are currently being sampled and are expected to be featured in new systems from manufacturers in 2021. BlueField-2X DPUs are under development and are also expected to become available in 2021.

Manuvir Das, head of enterprise computing for Nvidia, said DPUs will not replace current CPUs based on x86 architectures in data centers. “We expect to see the DPU used in conjunction with the host CPU; the application workloads would continue to run on the host CPU and what the DPU is doing is offloading a lot of functionality that is separate from the application in a transparent manner so that the application can have all of the resources on the host CPU.”

As enterprises use specific applications closer to the edge, perhaps for inference on the edge, “then one can certainly envision scenarios where the combination of the CPU and the capabilities of the DPU are sufficient to run the particular inference workloads that have to be run on most devices on the edge,” said Das.

When asked which Ampere GPU is being used in the BlueField-2X, Das declined to comment.

“We are not specifying that, but I can tell you it is not the A100. which as you know, is our flagship Ampere GPU,” he said.

The new DOCA architecture that’s being built to take advantage of the latest Nvidia DPUs has a new Software Development Kit (SDK), to enable developers to build applications on DPU-accelerated data center infrastructure services. DOCA is a comprehensive, open platform for building software-defined, hardware-accelerated networking, storage, security and management applications running on the BlueField family of DPUs. It is fully-integrated into the Nvidia NGC software catalog. DOCA is now available to early access Nvidia partners.

The Bluefield-2 DPU was actually previously unveiled by Nvidia on Sept. 29 at VMworld in partnership with VMware.

Several leading global server manufacturers, including ASUS, Atos, Dell Technologies, Fujitsu, GIGABYTE, H3C, Inspur, Lenovo, Quanta/QCT and Supermicro, said they plan to integrate the new Nvidia DPUs into their enterprise server offerings in the future.

Nvidia DGX SuperPODs – AI Supercomputing for Enterprises

Also announced at GTC 2020 are Nvidia’s new ready-made DGX SuperPODs, which the company is describing as a turnkey AI infrastructure that allows enterprises to acquire powerful AI supercomputers in just a few weeks. The DGX SuperPODs are available in cluster sizes ranging from 20 to 140 individual DGX A100 systems and are now shipping. The first systems are expected to be installed in Korea, the U.K., Sweden and India before the end of the year, according to Nvidia.

DGX SuperPODS come in 20-unit modules that are interconnected with Nvidia Mellanox HDR InfiniBand networking. The systems start at 100 petaflops of AI performance and can scale up to 700 petaflops to run complex AI workloads. Each DGX SuperPOD is racked, stacked and configured by Nvidia-certified partners to help customers get up and running as quickly as possible.

Nvidia’s EGX AI Platform Gains Combined Ampere and Bluefield DPU Power

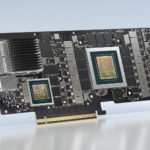

Nvidia’s EGX AI platform also got attention at GTC 2020, with the announcement that the server line is now being expanded to include components that combine the company’s Ampere GPU with a Bluefield-2 DPU all on the same PCIe card for the first time. By pairing the technologies, enterprises will gain a common platform to build secure, accelerated data centers, according to Nvidia.

“Companies around the world will come to use AI to improve virtually every aspect of their business,” Huang said during his keynote. “To support this massive shift, Nvidia has created an accelerated computing platform that helps companies modernize their data centers and deploy AI anywhere.”

The EGX platform is an optimized AI software stack available from the Nvidia NGC software catalog.

New Jetson AI and Robotics Starter Kit Available

In an announcement aimed at students, educators and robotics hobbyists, Nvidia also unveiled its latest Jetson AI computer in a kit, the Jetson Nano 2GB Developer Kit. Priced at $59, the entry-level kit includes a Jetson board along with free online training and AI-certification programs to get started and get up to speed.

Designed to open AI and robotics to a new generation of students, educators and hobbyists, the kits are the latest in the company’s line of Jetson products.

“While today’s students and engineers are programming computers, in the near future they’ll be interacting with, and imparting AI to, robots,” said Deepu Talla, the vice president and general manager of edge computing at Nvidia. “The new Jetson Nano is the ultimate starter AI computer that allows hands-on learning and experimentation at an incredibly affordable price.”

The latest Jetson kit is supported by the Nvidia JetPack SDK, which comes with NVIDIA container runtime and a full Linux software development environment. The kit allows developers to package their applications for Jetson with all its dependencies into a single container that is designed to work in any deployment. It is powered by the Nvidia CUDA-X accelerated computing stack that’s also used to create AI products in such fields as self-driving cars, industrial IoT, healthcare, smart cities and more.

In other news at GTC 2020, Nvidia announced plans to build a new AI supercomputer, to be called Cambridge-1, which will be dedicated to biomedical research and healthcare. The UK-based system will deliver more than 400 petaflops of AI performance, according to Nvidia. The new system is named for University of Cambridge where Francis Crick and James Watson and their colleagues famously worked on solving the structure of DNA. It will feature 80 DGX A100s, 20 terabytes/sec InfiniBand, 2 petabytes of NVMe memory, and require 500KW of power.