New AI Training Method Works on Individual Desktops, Laptops

Training AI models can be incredibly computationally intensive. Researchers often use supercomputers to ready the models, and the impact can be severe: the training process for a single model can be intensive enough to produce the carbon emissions produced by five cars over their entire vehicle lifespans. Apart from the upfront costs and impacts, this computational intensity can also raise the barrier of entry for AI models – a somewhat paradoxical situation for models that are designed to provide more efficient, lower-cost solutions to research problems. Now, researchers from the University of Southern California (USC) and Intel Labs have developed a new method for training AI models on individual desktop workstations.

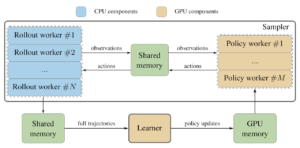

The new method, presented as a paper at the 2020 International Conference on Machine Learning (ICML), trains deep reinforcement learning (RL) algorithms. Aleksei Petrenko, a graduate student at USC, began the project when his Intel internship ended and he lost access to the powerful computing clusters that had hitherto been powering his ongoing RL work. Resolving to remove the necessity for such computing power, Petrenko and his colleagues began work on “Sample Factory,” which splits up typically bundled RL jobs so that they can be individually optimized and run on separate components of a computer. The researchers also put all the data from the process in the shared memory for quicker access by processes.

Sample Factory was tested by teaching the AI to play the classic first-person shooter game Doom, along with 30 other 3D challenges provided by DeepMind. On a single workstation with one (high-core-count) CPU and one GPU, the team achieved 140,000 frames per second of analysis – double the next-best method. When scaled up to more powerful machines, Sample Factory performed even better: a four-GPU machine tackled DeepMind’s 30 challenges so quickly that it outperformed the AI on which DeepMind tested its own challenges, which was trained on a much more powerful computer.

“From my experience, a lot of researchers don’t have access to cutting-edge, fancy hardware,” Petrenko said in an interview with IEEE Spectrum's Edd Gent. “We realized that just by rethinking in terms of maximizing the hardware utilization you can actually approach the performance you will usually squeeze out of a big cluster even on a single workstation.”

And though the workstation used for the experiments was a relatively powerful desktop, the researchers are also seeing gains on consumer-grade machines: Petrenko, for example, ran deep RL experiments on his mid-range laptop – a leap that he said was “unheard of.”