Google Cloud Debuts 16-GPU Ampere A100 Instances

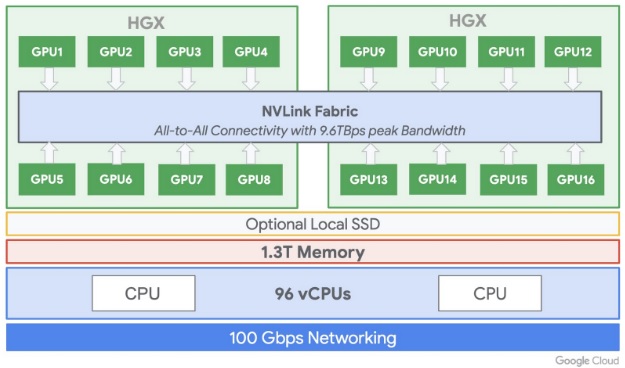

On the heels of the Nvidia’s Ampere A100 GPU launch in May, Google Cloud is announcing alpha availability of the A100 “Accelerator Optimized” VM A2 instance family on Google Compute Engine. The instances are powered by the HGX A100 16-GPU platform, which combines two HGX A100 8-GPU baseboards using an NVSwitch interconnect.

The new instance family targets machine learning training and inferencing, data analytics, as well as high performance computing workloads. With new tensor and sparsity capabilities, each A100 GPU offers up to a 20x performance boost compared with the previous generation GPU, according to Nvidia.

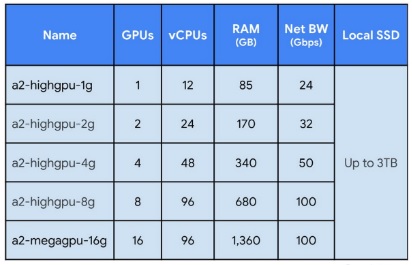

The A2 family of VMs is offered in five configurations, from one to 16 GPUs, with two different CPU- and networking-to-GPU ratios. Each GPU can be partitioned into seven distinct GPUs, owing to Ampere’s multi-instance group (MIG) capability.

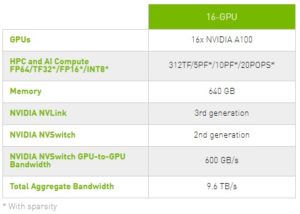

Customers with the most demanding AI workloads will be able to access the full 16-GPU instance, which offers a total of 640GB of GPU memory and 1.3TB of system memory, connected through NVSwitch with up to 9.6TB/s of aggregate bandwidth. Pricing for the A2 family was not disclosed at this time, but it would cost nearly $400,000 to purchase this hardware outright, demonstrating the democratizing power of the cloud-based approach, where the latest HPC hardware is accessible at a fraction of that cost, on-demand.

Recall that while Nvidia’s DGX A100 systems are equipped with 64-core AMD second-gen Eypc Rome processors, the HGX platform can be configured with either AMD or Intel processors. Google Cloud opted for the latter option, and the A2 machines offer from 12 to 96 Intel Cascade Lake vCPUs – plus optional local SSD (up to 3TB).

Google Cloud is introducing the new A2 family less than two months after Ampere’s arrival. This is record time from GPU chip launch to cloud adoption, reflecting the increased demand for HPC in the cloud, driven by AI workloads. There has been a steady march to accelerate the implementation of the latest accelerator gear by cloud providers. It took two years for Nvidia’s K80 GPUs to make it into the cloud (AWS), contracting to about a one-year cadence for Pascal, five months for Volta, and now mere weeks for Ampere. Google notes it was also the first cloud provider to debut Nvidia’s T4 graphics processor. (Google was also first with Pascal P100 instances; AWS skipped Pascal but was first with Volta.)

Google Cloud also announced forthcoming Nvidia A100 support for Google Kubernetes Engine, Cloud AI Platform, and other services.

Based on statements made as part of the Ampere launch, we may expect adoption of the A100 by other prominent cloud vendors, including Amazon Web Services, Microsoft Azure, Baidu Cloud, Tencent Cloud and Alibaba Cloud.

A2 instances are currently available via a private alpha program, and Google reports that public availability and pricing will be announced later this year.

Compute Engine’s new A2-MegaGPU VM: 16 A100 GPUs with up to 9.6 TB/s NVLINK Bandwidth (Source: Google Cloud)