AMD Epyc Rome Tabbed for Nvidia’s New DGX, but HGX Has Intel Option

AMD continues to make inroads into the data center with its second-generation Epyc “Rome” processor, which last week scored a win with Nvidia’s announcement that its new Ampere-based DGX system would rely on AMD rather than Intel CPUs. However, Nvidia partners — OEMs and hyperscalers — can still opt for Intel CPUs when building Ampere-based HGX servers, using the HGX baseboards that are at the heart of the new DGX.

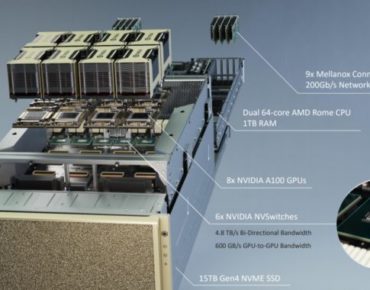

In addition to the eight A100 Ampere-based GPUs that power the DGX A100 for AI and HPC workloads, Nvidia has dropped in two AMD Epyc 7742 processors, which provide a total of 128 cores, with 8 memory channels and 128 PCIe 4.0 lanes per CPU.

In a media briefing, Nvidia’s Charlie Boyle, vice president and general manager of DGX Systems, described the decision to switch CPU suppliers as a technical one. “As we looked in the market as we always do for our designs because we’re agnostic on the CPU,” he said. “We just want to use the best platform and the AMD CPU was the right choice.”

In a media briefing, Nvidia’s Charlie Boyle, vice president and general manager of DGX Systems, described the decision to switch CPU suppliers as a technical one. “As we looked in the market as we always do for our designs because we’re agnostic on the CPU,” he said. “We just want to use the best platform and the AMD CPU was the right choice.”

Rome’s PCIe Gen4 support and high core count were cited as the main motivators. Currently, Intel Xeon 8200-R CPUs top out at 28-cores and use PCIe Gen3.

The DGX A100 – Nvidia’s third-generation DGX system – is the first to incorporate PCIe Gen4, which doubles the I/O bandwidth on the PCI bus. Taking advantage of that of course necessitated all the components in the system have that capability. “The Mellanox CX6 200-gig networking cards, the PCIe switching, and the NVMe storage used inside the system are Gen4, and we needed a CPU complex that could power the eight A100s GPUs,” said Boyle. “That’s why we went with the AMD CPU; it has the PCIe Gen4 support that we needed, and also the very high core count that we need. As we’re addressing these larger and larger AI problems, you need those cores to get the data into the GPUs.”

Boyle also noted that the x86 underpinnings and AMD engineering mean that it’s seamless for customers to transfer to the new system. “[They] can take code they were running on their V100 DGX systems, put it on an A100 DGX, and it just runs faster, they don’t need to change any code,” he said.

Intel’s Ice Lake server processors, based on the 10nm+ process node, are due to become available in the third quarter of 2020. Core count has not been officially disclosed, but like AMD second-gen Epycs, the upcoming Intel processors will support PCIe Gen4 and eight channels of memory (up from six).

HGX systems with AMD’s forthcoming 5nm Epyc CPU, Milan, are already on the roadmap of at least one system supplier. Atos revealed that its HGX-based X2415 BullSequana blades will support Eypc Rome and (future) Milan CPUs.

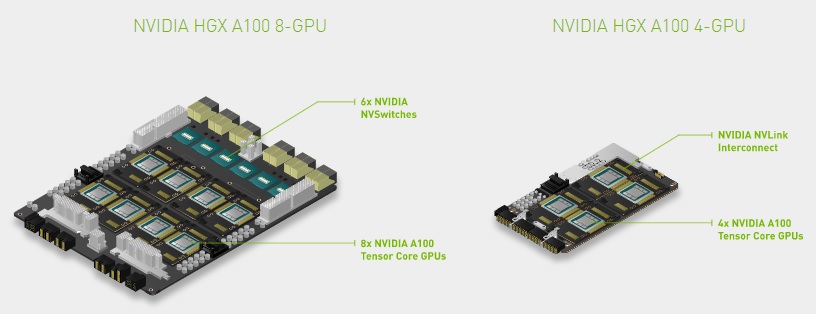

While Nvidia has swapped out Xeons in favor of Epycs in its latest DGX systems, the HGX A100 baseboards used inside partner servers can still utilize Intel Xeon CPUs. Gigabyte, for example, has four new HGX A100 servers in development and plans to offer 8-GPU and 4-GPU DGX A100 configurations with either second-generation AMD Epycs or 3rd generation Intel Xeon Scalable CPUs.

Related

With over a decade’s experience covering the HPC space, Tiffany Trader is one of the preeminent voices reporting on advanced scale computing today.