Talk to Me: Nvidia Claims NLP Inference, Training Records

via Shutterstock

Nvidia says it’s achieved significant advances in conversation natural language processing (NLP) training and inference, enabling more complex, immediate-response interchanges between customers and chatbots. And the company says it has a new language training model in the works that dwarfs existing ones.

Nvidia said its DGX-2 AI platform trained the BERT-Large AI language model in less than an hour and performed AI inference in 2+ milliseconds making “it possible for developers to use state-of-the-art language understanding for large-scale applications....”

In its announcement, Nvidia claimed three NLP records:

Training: Running the largest version of Bidirectional Encoder Representations from Transformers (BERT-Large) language model, an Nvidia DGX SuperPOD with 92 Nvidia DGX-2H systems running 1,472 V100 GPUs cut training from several days to 53 minutes. A single DGX-2 system trained BERT-Large in 2.8 days.

This is important, Nvidia’s Bryan Catanzaro, VP, applied deep learning research, said, because “researchers are constantly improving their models in order to make conversational AI better, so the time it takes to train a model translates into the research progress. The quicker we can train a model, the more models we can train, the more we learn about the problem, and the better the results get.”

Inference: Using Nvidia T4 GPUs on its TensorRT deep learning inference platform, Nvidia performed inference on the BERT-Base SQuAD dataset in 2.2 milliseconds, “well under the 10-millisecond processing threshold for many real-time applications, and a sharp improvement from over 40 milliseconds measured with highly optimized CPU code," the company said.

Inference: Using Nvidia T4 GPUs on its TensorRT deep learning inference platform, Nvidia performed inference on the BERT-Base SQuAD dataset in 2.2 milliseconds, “well under the 10-millisecond processing threshold for many real-time applications, and a sharp improvement from over 40 milliseconds measured with highly optimized CPU code," the company said.

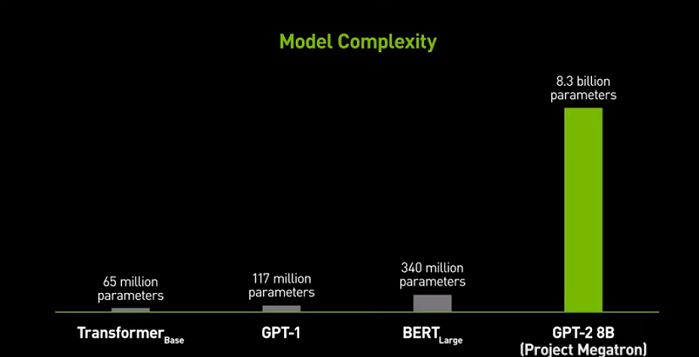

Model: Nvidia said its new custom model, dubbed Megatron, has 8.3 billion parameters, making it 24x bigger than 343 million-parameter BERT-Large and the world's largest language model based on Transformers, the building block used for BERT and other natural language AI models.

“We’ve seen that as language models get bigger and we train them on more data, they get a lot more useful,” Catanzaro said, adding that the goal of Megatron “is to train the biggest, baddest Transformers out there.”

The number of digital voice assistants in operation are predicted to grow from 2.5 billion today to 8 billion within the next four years, according to Juniper Research. Gartner predicts that within two years, 15 percent of customer service interactions will be handled completely by AI, an increase of 400 percent compared with two years ago.

As part of its announcement, Nvidia said Bing search engine developers use the Microsoft Azure AI platform and Nvidia technology to run BERT for voice-activated internet search. Rangan Majumder, Bing group program manager, said the two companies optimized BERT and “achieved two times the latency reduction and five times throughput improvement during inference using Azure Nvidia GPUs compared with a CPU-based platform…."

Startups in Nvidia’s Inception program, including Clinc, Passage AI and Recordsure, are working with Nvidia to build conversational AI services. Clinc customers in the car, healthcare and financial institutions (Barclays, USAA and Isbank) have used NLP with 30 million people globally. "Clinc's leading AI platform understands complex questions and transforms them into powerful, actionable insights for the world's leading brands," said Jason Mars, Clinc CEO.