AI’s Carbon Footprint May Be Bigger Than You Think

Data centers and supercomputers running AI workloads consume a lot of power, and power use causes carbon emissions. That’s generally known, but it’s not widely appreciated just how energy- and carbon-intensive AI can be. Recently, a team of researchers at the University of Massachusetts Amherst used neural network training as a case study to examine this problem – and found that a single training run can have a carbon footprint equal to that of several automobiles over their entire lifespans.

“[Natural language processing] (NLP) models are costly to train and develop,” the researchers wrote. “[Both] financially, due to the cost of hardware and electricity or cloud compute time, and environmentally, due to the carbon footprint required to fuel modern tensor processing hardware.”

The Approach

To quantify these costs, the researchers examined the impacts of training a variety of popular neural network models for NLP. Model training, they explain, requires powering expensive computational resources for weeks or months at a time – and even though some of it is powered by renewable energy or offset using carbon credits, much of it is not.

The researchers trained four models – Transformer, ELMo, BERT and GPT-2 – on a small number of GPUs over the course of a single day (maximum) per model, sampling power consumption throughout the training. They also trained the Transformer “big” model for 3.5 days.

Then, using estimated training times from preexisting research, they extrapolated total power consumption over the course of the training processes for each model. Finally, they multiplied the total power consumption by an EPA-provided value for carbon dioxide emissions per unit energy (in the U.S.) to estimate total emissions.

The (Worrying) Results

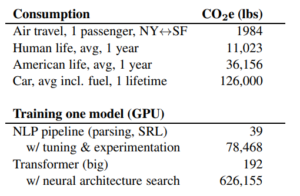

Estimated carbon dioxide equivalent emissions from training comment NLP models, compared to familiar consumption. Chart courtesy of the authors.

To the untrained eye, the result is just a list of numbers – and the researchers make efforts to contextualize it. “Training BERT,” the researchers write, “is roughly equivalent to a trans-American flight.” Training the Transformer “big” model for work on neural architecture search (NAS) was estimated at a whopping 626,155 pounds of carbon dioxide equivalent – as much as five average cars (including fuel) over their entire expected vehicle lifespans. This energy use translates to real costs, as well – hundreds or thousands of dollars to train a single model.

The authors, eager to improve the situation, make a series of recommendations. They suggest that researchers should report training times when proposing new models and should also prioritize computationally efficient hardware and algorithms – but beyond efficiency, they worry that the costs may limit access to only wealthier parties, and recommend that access be more equitably distributed.

About the research

The paper discussed in this article, “Energy and Policy Considerations for Deep Learning in NLP,” was written by Emma Strubell, Ananya Ganesh and Andrew McCallum of the College of Information and Computer Sciences at the University of Massachusetts Amherst. It is available at this link.