Nvidia EGX Pushes Real-Time AI to the Edge

Nvidia is putting a combination of its GPU processors and AI software stack out at the edge within a scalable compute platform designed to enable low latency edge AI “to perceive, understand and act in real time on continuous streaming data between 5G base stations,” according to the company.

Announced at Computex in Taipei, Nvidia’s new EGX was created to perform high-throughput AI using data created by the 150 billion machine sensors and IoT devices expected to be in operation by 2025, according to industry watcher IDC. “Edge servers like those in the NVIDIA EGX platform will be distributed throughout the world to process data in real time from these sensors,” Nvidia said.

EGX scaling starts with Nvidia’s small-scale Jetson Nano, which provides a half trillion operations per second (TOPS) using a small device, up to a full rack of NVIDIA T4 servers, delivering more than 10,000 TOPS for such workloads as real-time speech recognition. “This means an AI microservice running Azure IoT can process about four cameras in the small form factors, and in a full rack it can process up to 3000 cameras,” said Justin Boitano, Nvidia senior director of enterprise and edge computing, during a pre-announcement press briefing last week. “So there’s really a scalable architecture here for doing vision or speech types of problems using artificial intelligence.”

EGX incorporates a partnership between Nvidia and Red Hat to integrate Nvidia Edge Stack software with OpenShift, Red Hat’s Kubernetes container orchestration platform. On the networking front, Nvidia said EGX uses Mellanox (which Nvidia announced in March plans to acquire) Smart NICs (network interface cards) and switches and Cisco security, networking and storage technologies.

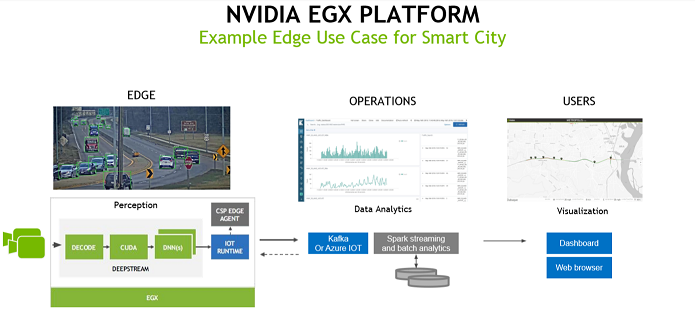

NVIDIA Edge Stack is software built around CUDA, the company’s parallel computing platform and API model, that includes NVIDIA drivers, a CUDA Kubernetes plugin, a CUDA container runtime, CUDA-X libraries and containerized AI frameworks and applications, including TensorRT, TensorRT Inference Server and DeepStream. NVIDIA Edge Stack is optimized for certified servers and is downloadable from the Nvidia NGC registry.

“For machines to hear and respond to human input requires this compute to be at the edge,” Boitano said. “To address AI for speech or for vision Nvidia has been focused on optimizing the full software stack of technologies. It starts with the GPUs, above that we tune algorithms and put them into CUDA-X acceleration libraries, and then we package optimized frameworks and applications and put them into a registry, so it’s easy to download these tools basically for free.”

Boitano outlined an edge AI image recognition scenarios, such as a roadside streaming video data application used by a smart city, using a containerized application to pre-process the video using CUDA to run multiple classification models.

“So if I want to look for cars broken down on the side of the road or emergency vehicles, once you classify something of interest, like an accident, that somebody running the operational offices of a smart city would want to act on, (the system needs) to have a way to collect alerts and send them back to the command and control center…,” Boitano said. “What’s being passed back from the edge to the data center are really small messages, just on the activities of interest, rather than streaming the full video. By sending messages you’re sending back less than 1/100th of the data you’d otherwise have to send back if you were just streaming video.”

And by using containers, “you don’t have to reboot the devices when you install updates, the container can be updated on the fly,” enabling the model to be frequently trained to recognized situations of interest to the command center. “Our EGX business is about building a platform to constantly update and deploy retrained models to the edge,” said Boitano.

The company said that Nvidia AI computing offered by major cloud providers is architecturally compatible with EGX, supporting hybrid and multi-cloud IoT. Edge Stack connects to major cloud IoT services, and remote management is done from AWS IoT Greengrass and Microsoft Azure IoT Edge.

EGX servers are available from ATOS, Cisco, Dell EMC, Fujitsu, HPE, Inspur and Lenovo, Nvidia said, along with server and IoT system makers Abaco, Acer, ADLINK, Advantech, ASRock Rack, ASUS, AverMedia, Cloudian, Connect Tech, Curtiss-Wright, GIGABYTE, Leetop, MiiVii, Musashi Seimitsu, QCT, Sugon, Supermicro, Tyan, WiBase and Wiwynn.

There are more than 40 early adopters of the platform, including BMW Group Logistics, Foxconn D Group, GE Healthcare, Harvard Medical School and data storage vendor Seagate Technology, according to Nvidia.

“Foxconn PC production lines are limited by the speed of inspection because it currently requires four seconds to manually inspect each part,” said Mark Chien, GM, Foxconn D Group. “Our goal is to increase the throughput of the PC production line by over 40 percent using the NVIDIA EGX platform for real-time intelligent decision-making at the edge. Our model detects and classifies 16 defect types and locations simultaneously using fast neural networks running on NVIDIA GPUs, achieving 98 percent accuracy at a superhuman throughput rate.”