‘A Digital Twin of Reality’: Toward Emotional AI and Hyperconnected Health Interventions

Riddle: It’s arguably the most powerful thing in the world and becoming more so, it’s everywhere yet we can’t see it.

Answer: Data. That is: AI data.

Here at Dell Technologies World in Las Vegas, CEO Michael Dell stated it this way: “We’re codifying the world, we’re creating a digital twin of reality, an alternate universe that is rich in data and analytics.”

By soaking up data from all things and wringing meaning from data in all its forms, existence is digitally transformed so that AI, to orthogonally quote the Bard, “finds tongues in trees, books in the running brooks, sermons in stones, and good in everything.”** Well, if not “good” then patterns and relationships in data that algorithmically leads to good outcomes.

Right, we know this, but as we hurtle into the data age it’s worth re-stating because the combination of the data deluge, AI, high performance compute/storage and 5G is gaining tremendous strength and momentum, with profound impact on critical aspects of life.

Death, and how to avoid it, is one of those aspects. The leading cause of mortality, of course, is our health (forgive the F.G.O. - Firm Grasp of the Obvious). Another source: driving. Thirty thousand deaths occur annually on American roads (many more are seriously injured) and 1 million are killed in cars globally. AVs (autonomous vehicles) that replace humans could radically reduce fatalities.

But before we get to robotic cars, AI and data collection strategies are under development to address human-caused accidents, such as driving while falling victim to a health emergency; or drowsy, drunk and distracted driving.

But before we get to robotic cars, AI and data collection strategies are under development to address human-caused accidents, such as driving while falling victim to a health emergency; or drowsy, drunk and distracted driving.

The latter problems have invoked work in the field of intuitive AI, a.k.a. artificial emotional intelligence. While it’s theoretically possible this will lead to forms of AI now seen only in movies, the intuitive AI we’re talking about, while impressive, doesn’t smack of science fiction. In fact, we’re talking about ingesting data generated by the face.

At Dell Technologies World, computer scientist, entrepreneur and Affectiva CEO Rana El-Kaliouby discussed the emerging discipline of “expression recognition.” The company, which grew out of MIT’s Media Lab, developed what it says is the first online face tracking system for assessing consumers’ responses to ads (Coca-Cola and a quarter of the F500 are customers).

“We’re on a mission to humanize technology,” she said, “to build machines that can understand all things human.” El-Kaliouby, a native of Egypt who earned advanced degrees in machine learning at Cambridge University, said she was “struck by how our machines know a lot of things about us, but they’re completely oblivious to our emotional and cognitive states…. We’re designing our technologies to have a ton of IQ – cognitive intelligence – but not enough EQ – emotional intelligence.”

She cited the well-known finding that 93 percent of human communication is non-verbal – tone of voice, how fast we speak, and facial and hand gestures have more impact than the actual words we choose. Affectiva enables software applications to use a webcam to track a person's smirks, smiles, frowns and furrows, and correlates them to emotional and cognitive states. The technology also can measure a person's heart rate from a webcam by tracking color changes in the face, which pulses each time the heart beats (more on health crises below).

Companies in the car industry use Affectiva to assess what drivers and passengers are thinking and doing. The company has built a video database of hundreds of thousands of hours of drivers (who opted in to the research project and consented to having their vidoes shared) from around the world. This work has implications not only for consumer satisfaction but also safety.

“We wanted to understand how people look when they’re driving,” El-Kaliouby said. “Now we assumed, since this was very clearly opt-in and there’s a big camera sitting on the dashboard…, we assumed people would be on their best driving behavior and we wouldn’t see any interesting data at all. We were wrong.”

She shared two alarming videos, one of a driver asleep (or nearly so) with an infant in the back seat, and another of a young woman occasionally glancing at the road while texting – not with one phone but with two.

“Her hands are not on the steering wheel and her eyes are not on the road,” El-Kaliouby said, “and with the eye gaze direction we can detect if she’s not looking at the road. And we have optic detectors that can determine she has not one but two cell phones in her hands. We can do that in real time and integrate that (with the car) to create some kind of interaction.”

This can happen in several ways – starting with alerts that escalate if driver behavior doesn’t improve. As cars become more autonomous, El-Kaliouby foresees a time when the car may pull itself off the road, or take control of the car away from the driver.

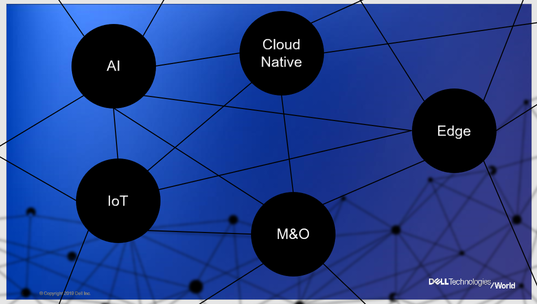

But what if drivers are incapacitated not by their own volition but due to a health emergency, such as a heart attack? That’s the scenario Brad Maltz, Dell EMC chief technologist, emerging technology strategy and development, discussed in a session focused on the interconnectedness of IoT, AI, edge, cloud native and M&O (management and orchestration). Although intuitively we can imagine the relationships between them, Maltz said he considers this to be “a very hard topic within the industry – when you try to take five technology domains, some of the biggest ones that people are discussing right now, and discuss if there are dependencies, are they connected, can you talk about one without talking about the others?”

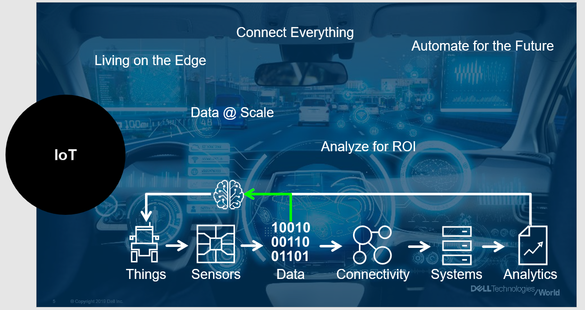

The driver suffering a heart attack, Maltz said, is a useful hypothetical because it can invoke the five domains. Here’s the story:

Joe is an older man with a heart condition, for which he has an intelligent pacemaker, that his doctor, Dr. Jenn, has been treating for years. Joe drives an AV (or, in Dell’s preferred parlance, an ICV – an interconnected vehicle). Joe signed up for real time health monitoring, his doctor and healthcare provider are connected to him, enabling ongoing, remote monitoring of Joe’s vital signs. In addition, the ICV also receives and understands Joe’s health data.

Joe is an older man with a heart condition, for which he has an intelligent pacemaker, that his doctor, Dr. Jenn, has been treating for years. Joe drives an AV (or, in Dell’s preferred parlance, an ICV – an interconnected vehicle). Joe signed up for real time health monitoring, his doctor and healthcare provider are connected to him, enabling ongoing, remote monitoring of Joe’s vital signs. In addition, the ICV also receives and understands Joe’s health data.

While driving 50 miles from his hospital, Joe has a mild heart attack. “What should happen here: we have an intelligent car, an intelligent pacemaker, we have everything connected on the back end.”

According to Maltz, we’re moving toward a time when all these devices and entities will be interconnected, AI will kick in and an entire rescue and triage effort will happen automatically.

“The smart cars of the future will have enough intelligence in them to connect to devices inside of you,” Maltz said, “and they’ll monitor your health statistics: from sensors in the seats, in the steering wheel, from cameras built into the cars, and by wirelessly connecting to devices if you have them on your person. The ICV should be connected to a hospital system..., that should automatically be routed up to the default hospital system that’s built into your profile.”

When Joe’s heart attack strikes the ICV makes a 911 call for emergency help. “Joe didn’t have to do anything,” Maltz said, “that’s a great thing right there.”

Next: the ICV takes control of the car and stops it safely.

While doing so, the car alerts the hospital system on the back end, and the hospital alerts Dr. Jenn. As this happens, EMTs arrive in an ambulance, they use a device to verify the health monitor alert, “they don’t have to guess, they don’t have to do any early-on triage work, the device is telling them why the car pulled over and why Joe is having problems.” They transport Joe back to the hospital and keep Dr. Jenn updated.

Making all this happen involves a considerable amount of IoT, edge, cloud, AI and M&O, all five entities working hard. And this, by the way, builds toward Maltz’s message: that Dell Technologies is putting together the integrated technology apparatus enabling disparate workloads and technologies to work together in a scenario like this.

The ICV, for example, has to be connected to a cellular network – soon enough, that will be 5G – enabling high-bandwidth connectivity with the hospital system, to Joe’s doctor and to core data centers, as well as to the rescuers.

While there are multiple edges in this scenario, Maltz breaks them into the “personal edge,” referring to all the individuals involved, and the “mobile edge,” referring to a car, an ambulance and anything else “that has movement and locality, that isn’t fixed at a given point in time.” There’s also the network edge – cell towers – and the enterprise edge, meaning the doctor’s offices and main data centers in the hospital.

“In each of these types of deployments, you’ll need different form factors, different types of devices, different types of connectivity…, there are so many things you have to take into account,” said Maltz.

AI is playing a central role throughout: AI analyzes data from the personal health monitor and then autonomously stops the car. The car also communicates with other cars that a health emergency is happening so that nearby cars steer clear of the ICV. “So I connected a health outcome from the monitoring device to the automobile world, tying it into a telco world.”

This, Maltz said, is where the overlap of domains comes in: “you need automotive, telco and healthcare to talk to each other to get through this scenario.”

This places a premium on high-grade M&O.

“You have to make sure you can push all AI models to the edge,” he said. “How do you continually deal with model management in this type of scenario? How do you deal with updating all the edge software to make sure they all speak the same language? How do we manage the data pipelines? We keep talking about data, but the reality is data is so important you have to build pipelines and data management platforms around this to deal with this data at scale. M&O will help you deal with that.”

“You have to make sure you can push all AI models to the edge,” he said. “How do you continually deal with model management in this type of scenario? How do you deal with updating all the edge software to make sure they all speak the same language? How do we manage the data pipelines? We keep talking about data, but the reality is data is so important you have to build pipelines and data management platforms around this to deal with this data at scale. M&O will help you deal with that.”

Maltz concluded by extolling containers:

“In this scenario, all my workloads are containers, everything should be delivered through continuous delivery, development has to be lean-agile, make (applications) small, make them quick and iterative as possible. And you’ll probably going to rely on Cloud Foundry, Kubernetes – all the public clouds have version of this as well. You’re going to have to live in that world if you’re going to build a system like this.”

Finally, there is the key role of oversight:

“You have to handle all the application deployments to the correct locations. What if we push the wrong service out to the wrong device? What if I push out a traffic monitoring system to your personal health device? And you have to monitor and analyze everything. This is a life and death situation. If your systems have failures and they go down and have the wrong code or aren’t connected properly, if you’re not monitoring that and you’re not getting intelligence against that, you’re going to have a big problem.”

* As You Like It