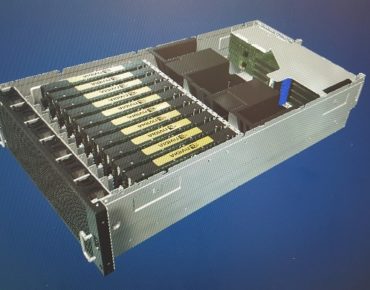

Dell EMC’s Powerhouse Machine Learning Server: up to 10 GPUs

Dell EMC DSS 8440 with 10 Nvidia GPUs

Dell EMC has launched a thoroughbred machine learning server for the data center and at the edge, its most powerful in the company’s line of AI servers, with four, eight or 10 Nvidia Tesla V100 GPUs (14 single-precision teraflops or 112 mixed-precision "AI" teraflops per processor) and up to eight NVMe local storage drives.

In short: a lot of data, a lot of power.

Announced this morning on day two at Dell Technologies World, the Dell EMC DSS 8440 is a two-socket, 4U server designed for ML applications and other demanding workloads. The server isn’t for machine learning rookies – it’s built for organizations whose AI journeys have proceeded to the point of using more sophisticated, complex models utilizing greater amounts of data for which faster training, and quicker training iterations, is critical.

“Most people starting in AI don’t need eight GPUs,” said Dell EMC Senior Quality Engineer David Hillenmeyer at a pre-announcement event yesterday in Las Vegas. “It’s an extremely powerful box, but it’s not a starting point. Depending on where customers are on their journey, if they’re trying to understand where machine learning will help them, there are a variety of products, servers and accelerators that a customer can deploy.”

Such as Dell EMC’s 740 and 7425 servers, which support up to three GPUs, and the 4140, supporting up to four accelerators.

![]() Hillenmeyer said the DSS 8440 is designed to deliver machine learning processing capability driven by development of cloud-native applications that process massive amounts of data. Beyond high performance processing capability, the server has high-speed I/O, with four Micro-Semi 96-lane PCIe switches and eight x4 NVMe PCIe slots.

Hillenmeyer said the DSS 8440 is designed to deliver machine learning processing capability driven by development of cloud-native applications that process massive amounts of data. Beyond high performance processing capability, the server has high-speed I/O, with four Micro-Semi 96-lane PCIe switches and eight x4 NVMe PCIe slots.

Performance does not scale precisely linearly as the number of GPUs in the server increases from four to 10, Hillenmeyer said, though it comes close. He shared data showing that for image recognition workloads, using a single Tesla V100, training can take roughly 1000 minutes while with 8 V100s takes about 180 minutes; for language translation, training with one v100 requires 275 minutes while with eight Nvidia processors the time is cut to 50 minutes, and so forth.

The server also supports the most powerful Intel Xeon Scalable CPUs with up to 24 cores per processor.

For storage, the DSS 8440 can support up to 10 2.5 inch devices, or 32 terabytes of NVMe storage, “so you can have a large sample of training data, and fast access to it, to reduce your training times,” Hillenmeyer said.

The company said the DSS 8440 has an open architecture, based on industry-standard PCIe fabric, allowing for customization of accelerators, storage options and network cards. “This platform is designed to be open to support new technologies coming in this area,” said Hillenmeyer, including the Graphcore machine learning IPU processor, developed jointly with Dell EMC, due for release during the second half of this year, along with FPGAs, he said.

Hillenmeyer said the server can be used in the core data center or out at the edge for workloads requiring low latency. “When we’re talking about generation of a large amount of data we can take this box out to where the data is being generated, as opposed to having to transport all of the data back to the core.”

It is set to ship this quarter.