AWS Upgrades its GPU-Backed AI Inference Platform

Source: Nvidia

The AI inference market is booming, prompting well-known hyperscaler and Nvidia partner Amazon Web Services to offer a new cloud instance that addresses the growing cost of scaling inference.

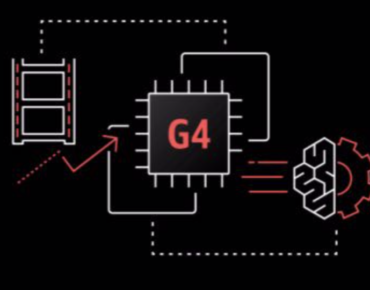

The new "G4" instances, announced this week, will employ Nvidia’s Tesla T4 GPUs, which are optimized for machine learning inference. Based on Nvidia’s Turing architecture, the T4 features 2,560 CUDA cores and 320 Tensor cores, providing a peak output of 8.1 teraflops of single-precision performance, 65 teraflops of mixed-precision, 130 teraops of INT8 and 260 teraops of INT4 performance.

Inference “is the big market,” Nvidia CEO Jensen Huang stressed during the GPU maker’s technology conference this week, with an estimated 80 to 90 percent of the cost of machine learning at scale devoted to AI inference.

The G4 instances running on the AWS Elastic Compute Cloud (EC2) are expected to be available in the coming weeks, according to Matt Garman, vice president of compute services at AWS. They will be comprised of AWS-custom Intel CPUs (4 to 96 vCPUs), up to eight T4 GPUs, up to 384 GiB of memory, up to 1.8 TB of local NVMe storage, and an option for 100 Gbps networking.

Along with machine learning inference, the latest GPU service in the cloud targets video transcoding, media processing and other graphics-intensive applications, the partners said Monday (March 18).

“Machine learning is a great fit for the cloud,” Garman said. The cloud giant and the GPU specialist have been collaborating since AWS released an EC2 cloud instance of a version of Nvidia’s Tesla GPU known as “Fermi” in 2010, making it perhaps the first “GPU as a service.”

Despite the steady performance increases of GPU instances in the cloud, “A lot of our customers are still trying to figure out how exactly do you incorporate machine learning into your applications,” Garman said. Among the initial uses of GPU clusters in the cloud is running machine learning training applications.

As more ML training and test shifted to the cloud, AWS rolled out its SageMaker machine learning and deep learning stack in 2017 designed to streamline a process that previously involved data preparation, selecting training algorithms, scaling to production and ultimately retraining the model.

The partners are touting their collaboration as a way to accelerate iteration of applications ranging from materials properties to drug discovery. Garman noted that AWS EC2 P3 servers running Nvidia’s V100 Tesla GPUs for HPC applications have reduced one startup’s drug design studies from two months to six hours.

The value proposition of running AI in the cloud extends to freeing up the precious time of data scientists and machine learning specialists, Garman stressed.

The first cloud provider to the T4 punch was Google, which is currently offering beta access to the Turing GPUs on its cloud platform. The GPU is also available from system makers HPE, SuperMicro, Dell EMC, Fujitsu, Cisco, and Lenovo. IBM said it intends to enable the Tesla T4 GPU accelerator on select Power9 servers.

Tiffany Trader contributed to this report.

Related

George Leopold has written about science and technology for more than 30 years, focusing on electronics and aerospace technology. He previously served as executive editor of Electronic Engineering Times. Leopold is the author of "Calculated Risk: The Supersonic Life and Times of Gus Grissom" (Purdue University Press, 2016).