IBM and Nvidia in AI Data Pipeline, Processing, Storage Union

IBM and Nvidia today announced a new turnkey AI solution that combines IBM Spectrum Scale scale-out file storage with Nvidia’s GPU-based DGX-1 AI server to provide what the companies call “the highest performance in any tested converged system” while supporting data science practices and AI data pipelines (data prep- training- inference- archive) in which data volumes continually grow.

Called IBM SpectrumAI with Nvidia DGX, the all-flash offering is designed to be an AI data infrastructure and is configurable from a single IBM Elastic Storage Server, to a rack of nine Nvidia DGX-1 servers with 72 Nvidia V100 Tensor Core GPUs, up to multi-rack configurations. IBM said Spectrum storage scales “practically linearly,” with random read data throughput requirements to feed multiple GPUs. The system has demonstrated 120GB/s of data throughput in a rack, according to IBM.

As with other AI multi-vendor partnerships involving Nvidia, the offering is built to handle tasks that would otherwise require data scientists.

“The key thing is we’re removing the impediments to getting AI to be an automated process,” Eric Herzog, VP, Product Marketing and Management, IBM Storage Systems, told EnterpriseTech.

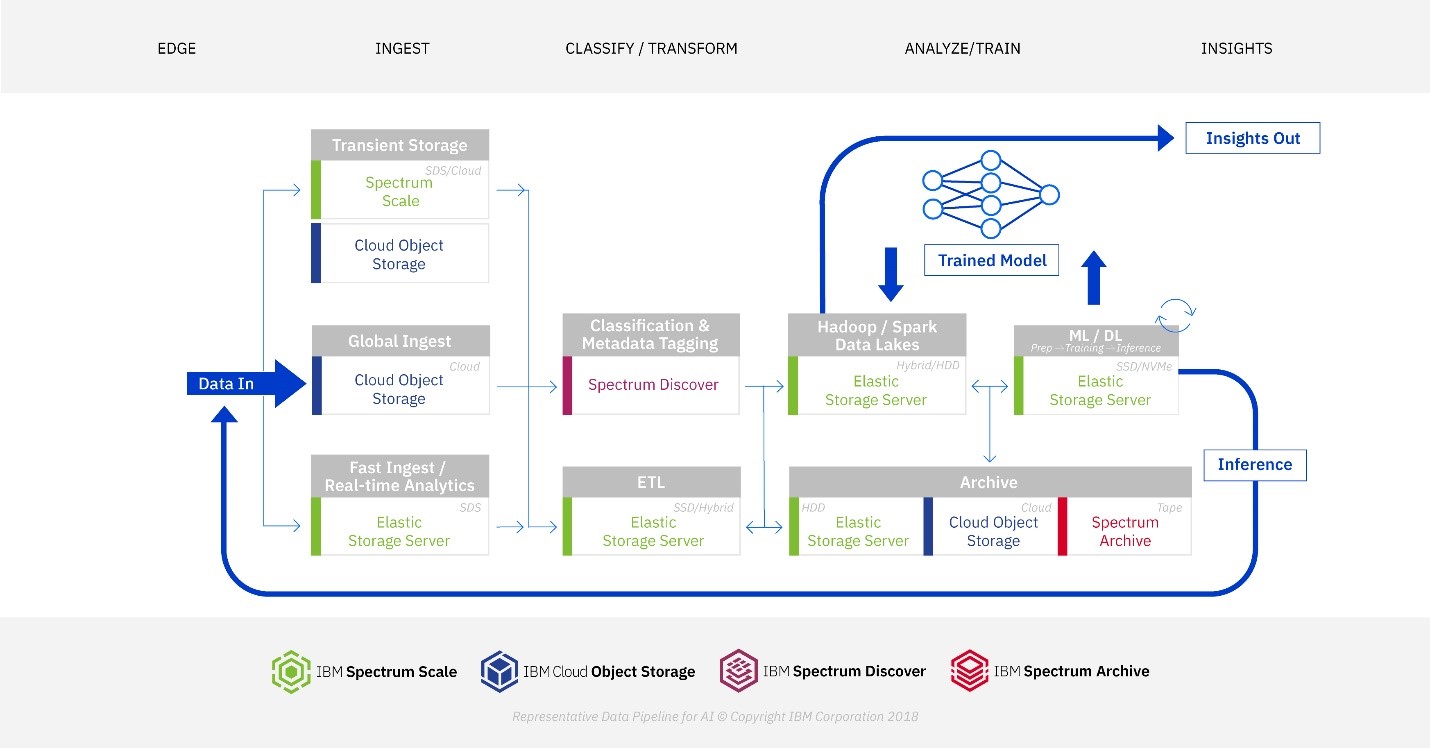

He said the reference architecture for SpectrumAI with Nvidia DGX encompasses IBM Spectrum Scale, for high performance data ingest; Spectrum Discover, to make data accessible via data cataloguing and indexing within an unstructured environment; and an API and software that re-uses the workflows created by Spectrum Discover. “You can use the workflows over and over again,” Herzog said, “minimizing the time the data scientists spend on data prep – it’s automated.”

SpectrumAI provides services across different storage choices, including AWS. The Spectrum Scale parallel file system can share data with IBM Cloud Object Storage and tape with shared metadata services provided by Spectrum Discover.

Herzog said Spectrum Scale has the capacity to support expanding, data-hungry AI implementations at scale.

“It’s important that it be able to grow,” he said. “IBM Spectrum Scale and IBM Cloud Object Storage have, in production today, installations of more than an exabyte. And both of them can scale to multi-exabyte configurations. In fact, this reference architecture its spec’ed up to a capacity of 8 exabytes – not counting the archive side, just counting the primary storage side. So it lets you create giants sets needed for AI. AI is mimicking what we do from a human perspective and allow this vast amount of data to be absorbed.”

Built specifically for AI implementations, DGX-1 servers contain eight Tesla V100 GPUs and deliver a petaFLOPS of throughput, with 256 GB of system memory, according to Nvidia. The DGX software stack is designed for GPU-accelerated training, including the RAPIDS framework. Nvidia said adoption of new AI frameworks is simplified by the container model supported by the Nvidia NGC container repository of applications.

Today’s announcement adds to the number of major server, analytics and storage vendors that have aligned their AI go-to-market strategies with Nvidia DGX technology. In October, high performance storage company DDN announced a set of solutions that combine the A3I (Accelerated, Any-Scale AI) platform with Nvidia DGX-1 AI servers in a pre-configured solution. Similar to the IBM-Nvidia offering, the DDN-Nvidia solution is comprised of three reference architectures, starting at a single DGX-1 with a single storage box and scaling up to a "DGX-1 Pod" with nine DGX-1s. In August, Dell EMC launched Ready Solutions for AI, a technology stack including AI frameworks and libraries, compute, networking, storage and deployment services built around Dell EMC PowerEdge servers. Also in August, NetApp announced a DGX-based AI platform called NetApp Ontap AI, that combines NetApp’s hybrid cloud data services, AFF A800 cloud-connected all-flash storage and NetApp Data Fabric, a data management technology that supports an edge-core-cloud data pipeline.

“IBM's strategy is to make AI/ML/DL more accessible and more performant,” said Ashish Nadkarni, group VP, infrastructure systems, platforms and technologies, at industry watcher IDC. “IBM SpectrumAI with Nvidia DGX is designed to provide a tested and supported platform. For those who are choosing Nvidia DGX servers for the open source frameworks and high-throughput GPU platforms, IBM Spectrum Scale can add intelligent, scalable, secured, metadata-rich, cloud-integrated, multiprotocol, high performing, and efficient storage in an easy to deploy solution from their top tier business partners.”

Herzog said the offering will be sold strictly through an IBM and Nvidia reseller channel comprised of five companies and which Herzog said will expand next year.