Scaling Commercial CFD Code at 3 Supercomputing Centers

The year isn’t over yet and already we've seen new records posted for proprietary physics-based simulations on two Cray machines – at the National Center for Supercomputing Applications (NCSA) and at King Abdullah University of Science and Technology (KAUST) - successfully pushing the limits of all the available nodes in those large machines.

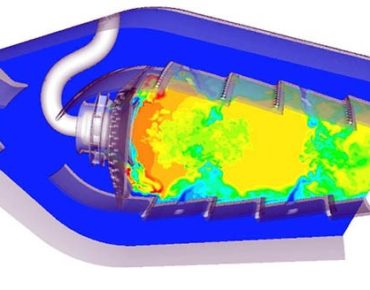

In addition, order-of-magnitude improvements have been recorded this year in scaling for commercial 3D fluid dynamics codes ANSYS Fluent (8x improvement) and CD-adapco’s Star-CCM+ (50x) using the Blue Waters supercomputer at NCSA. Fluent then eclipsed its Blue Waters record of 129,000 cores by reaching 172,032 cores at 82 percent efficiency on Hazel Hen at HLR-Stuttgart in 2016.

It wasn’t always like this.

These improvements result from a concerted effort on the part of three supercomputing centers known for deep engagements with specific industrial consumers of HPC. Years of effort and numerous iterations of software run-break-fix-run-break-fix cycles helped improve both the underlying physics and the scaling of industrial codes of interest.

Key components of this success are:

- HPC centers with intimate knowledge of codes used in industry

- Centers with deep understanding of the science represented in the simulations

- Companies that value the use of HPC

- Centers with favorable practices of granting industrial access to HPC

Early adopters of commercial simulation codes, such as those from CD-adapco and ANSYS, occasionally have access to HPC. Academics focusing on algorithms often develop these tools on desktop computers. Few, if any, commercial independent software vendors (ISVs) have adequate access to HPC to make significant improvements. Few HPC centers have hands-on experience in using ISV codes. This combination – lack of access for ISVs and lack of ISV expertise at HPC centers – may be responsible for ISVs’ unearned reputation that their codes aren’t scalable.

The reason that at-scale access matters for code development is similar to the race-track effect for automobile manufacturers. Things break at track speeds that don’t break in everyday use. And code development methodologies are not sufficiently predictive of at-scale demands to eliminate the run-break-fix routine.

So what changes led to these at-scale accomplishments? It was a combination of shared focus within the industrial community, along with Blue Waters, a team of experts familiar with these specific ISV codes, and leaders who cared about the commercial community.

The dominant model for industrial users to access to at-scale HPC at supercomputing centers is through the front door with proposals, reviews and grants. But when dealing with across-the-board uncertainty (codes, model fidelity, access, expertise) the typical grant review model creates barriers. In the case of these recent breakthroughs a back door approach was used to bypass the front door permission path.

A core team of innovators collaborated for more than six months to scale Alya, a multiphysics code from the Barcelona Supercomputer Center. The Alya code developer spent time in Champaign working closely with NCSA’s Private Sector Program team. This work resulted in a highly efficient scaling to 100,000 cores[i], which gained the respect of the community and major headlines about how engineering code might ultimately be exascale-worthy. The accomplishment won an HPCwire 2014 Reader’s Choice Top Supercomputing Achievement award.

This success fostered enough confidence among the team to attempt to do the same with commercial 3D physics simulation codes. Two ISVs and their FORTUNE100 manufacturing customers were eager to engage. On the NCSA team were domain experts, programmers, HPC experts and business impact leaders.

It wasn’t always like this.

NCSA’s PSP team gained inspiration from HLRS in Germany, the only other known HPC center to have collaborated formally with a commercial ISV. The NCSA team then leveraged the success with the Barcelona Alya code by repeating the effort on Blue Waters with closed commercial codes. The result was breakthrough performance that has since improved code capability at all scales, including at the desktop level.

Innovation efforts such as this have been stunted by lack of access to HPC due to policies and practices at supercomputing centers. Access for the sole purpose of code scaling is rare – particularly if it’s not associated with a clear scientific directive. Numerous industry proposals have failed to be approved on U.S. supercomputers because of two prevailing practices:

1) a systemic bias toward reserving HPC for agency mission efforts or new science

2) an expectation that companies have enough money to buy their own HPC

Never mind that these breakthroughs benefit the entire commercial manufacturing sector, as well as the supply chain responsible for making products for the defense sector, and the biomedical professions that need deeper understanding of human respiratory and circulatory systems (fluids and structures). Broad sector benefits coming from well-developed commercial codes raise all boats.

The leadership necessary to support potential impact such as this is rare.

Sector-wide innovation policy is rare.

The biggest barrier to sector-wide innovation is not just policy, but practice.

We can only hope it’s not always like this.

Merle Giles is CEO of Moonshot Research LLC. He was named an HPCwire ‘Person to Watch’ in 2014.

[i] Osseyran/Giles, Industrial Applications of High-Performance Computing: Best Global Practices, CRC Press (www.crcpress.com Catalogue #K20795), ISBN 978-1-4665-9680-1